Code LLMs Explained,

CodeBERT

CodeBERT is a pre-trained model developed by Microsoft Research, designed to understand and generate code in multiple programming languages as well as natural language text. It is based on the BERT (Bidirectional Encoder Representations from Transformers) architecture, which is a transformer-based model known for its success in natural language understanding tasks.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of CodeBERT

CodeBERT is a pre-trained model developed by Microsoft Research, designed to understand and generate code in multiple programming languages and natural language text.

CodeBERT achieved a mean average precision (MAP) of 0.428

Achieved 0.428 MAP

On a dataset of 100K Java methods, CodeBERT achieved a mean average precision (MAP) of 0.428, significantly higher than the previous state-of-the-art model.

Achieves SOTA performance code search and documentation.

SOTA performance

CodeBERT achieves SOTA performance in both natural language code search and code documentation generation, according to the results.

The first NL-PL model for 6 programming languages

6 programming languages

CodeBERT is the first large NL-PL pretrained model for multiple programming languages. On NLPL probing, the results show that CodeBERT outperforms previous pre-trained models.

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Model Types

-

Key Results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

CodeBERT is an advanced model created by Microsoft Research that comes pre-trained to comprehend and generate code written in various programming languages and natural language text. It leverages the BERT architecture, a transformer-based model with exceptional performance in natural language processing tasks. This enables CodeBERT to process code in a way that is similar to how humans read and understand natural language text. This means that it can perform tasks such as code retrieval, code generation, and code summarization with high accuracy and efficiency. With its ability to process multilingual code, CodeBERT is an invaluable tool for software developers, researchers, and practitioners who work with code in different languages.

Model Highlights

CodeBERT is a pre-trained model based on the successful transformer-based BERT architecture, adapted for programming and natural language tasks.

- CodeBERT is a pre-trained natural language and programming language (PL) model (NL).

- It is useful for downstream NL-PL applications like the natural language code search and code documentation generation.

- CodeBERT employs a Transformer-based neural architecture and a hybrid objective function incorporating the replaced token detection pre-training task.

- The replaced token detection task allows the model to learn better generators by utilizing both "bimodal" data of NL-PL pairs and "unimodal" data.

- CodeBERT achieves cutting-edge performance in natural language code search and documentation generation.

- On NLPL probing, the results show that CodeBERT outperforms previous pre-trained models.

Training Details

Training data

CodeBERT is trained on a large-scale dataset with 2.1 million bimodal data points and 6.4 million unimodal codes from six programming languages (Python, Java, JavaScript, PHP, Ruby, and Go). The data is derived from publicly accessible open-source GitHub repositories.

Training Procedure

CodeBERT is trained cross-modal, leveraging both bimodal NL-PL data and unimodal PL/NL data; it is pre-trained in six programming languages and trained with a new learning objective based on replaced token detection.

Training dataset size

CodeBERT was trained on a batch size of 2048, with a learning rate of 5e-4. FP16 was used to train CodeBERT on a single NVIDIA DGX-2 machine. It combines 16 NVIDIA Tesla V100 interconnected with 32GB memory.

Training time and resources

Training 1,000 batches of data takes 600 minutes with MLM and 120 minutes with RTD. The maximum length is set to 512, and the maximum training step is set to 100K.

Model Types

CodeBERT is a pre-trained model designed to process and generate source code in multiple programming languages. It leverages several pre-training techniques to improve its code and natural language understanding.

| Model | Parameters | Highlight |

| CODEBERT (RTD) | Replaced Token Detection (RTD) | Replaces tokens with others from the same vocabulary to learn more contextual information and relationships. |

| CODEBERT (MLM) | Masked Language Modeling (MLM) | Randomly masks some tokens to predict them from the context of remaining unmasked tokens. |

| CODEBERT (MLM+RTD) | Both Masked Language Modeling and RTD | Combines MLM and RTD approaches for more effective learning and better performance on downstream tasks. |

Key Results

The researchers constructed a dataset for NL-PL probing to investigate the knowledge learned by CodeBERT. The results show that CodeBERT performs better than previous pre-trained models on NLPL probing.

| Task | Dataset | Score |

| natural language code retrieval | CodeSearchNet | 76 |

| PL Probing | CodeSearchNet | 85.66 |

| PL Probing with PRECEDING CONTEXT ONLY | CodeSearchNet | 59.12 |

| NL probing | CodeSearchNet | 74.53 |

| Code-to-Documentation generation | BLEU-4 | 17.83 |

| Code-to-NL | BLEU | 22.36 |

Model Features

CodeBERT is a bimodal pre-trained model for natural language and programming language (PL) (NL). CodeBERT learns general-purpose representations to help downstream NL-PL applications like the natural language code search and documentation generation.

Contextual representations

CodeBERT has been pre-trained on a large corpus of programming language and natural language code, allowing it to learn contextual representations of the underlying meaning and syntax of code and natural language. This allows the model to perform well on downstream tasks without needing a large amount of task-specific training data.

Masked Language Modeling

CodeBERT is trained to predict the original word masked in an input sequence as one of its pre-training tasks. The model is given a sequence in which a random subset of the tokens has been replaced with [MASK] tokens, and it is required to predict the original tokens. This task assists the model in learning context-specific representations of code and natural language.

Replaced Token Detection (RTD)

CodeBERT uses RTD as another pre-training task to learn contextual representations. The model is trained to predict a plausible replacement for a token in the input sequence that has been randomly replaced. Because it must identify and replace specific tokens in the input sequence, this task helps the model learn to reason about code and natural language more nuancedly.

Model Tasks

CodeBERT is an advanced pre-trained model developed by Microsoft Research that can perform a wide range of natural language processing (NLP) tasks related to source code.

Natural Language Code Retrieval

CodeBERT can be configured to retrieve relevant code snippets based on a natural language query. This task entails teaching the model to recognize natural language queries and match them to relevant code snippets, allowing it to provide accurate and relevant search results.

PL Probing

CodeBERT's ability to perform programming language (PL) tasks, such as predicting variable names, types, or control flow structures, can be assessed. This task entails probing the learned representations of the model to determine how well they capture the syntactic and semantic structure of programming language code.

NL Probing

CodeBERT's ability to perform natural language (NL) tasks, such as sentiment analysis or named entity recognition, can also be assessed. This task involves probing the model's learned representations to see how well they capture the meaning and structure of natural language text.

Code-to-Documentation Generation

CodeBERT can be fine-tuned to generate comments or documentation based on a code snippet. This task entails training the model to generate natural language descriptions of code, which will be useful for developers working with the code.

Code-to-NL generation

CodeBERT can also be fine-tuned to generate natural language descriptions of a given code snippet. This task entails teaching the model to translate programming language code into natural language, which can be useful for tasks like code summarization or translation between programming languages.

Fine-tuning

CodeBERT was evaluated on two NL-PL applications by fine-tuning its model parameters. The results demonstrate that CodeBERT achieves state-of-the-art performance in both natural language code search and code documentation generation. Additionally, to investigate the type of knowledge learned by CodeBERT, a dataset for NL-PL probing was created, and the model was evaluated in a zero-shot setting with fixed pre-trained model parameters. The results indicate that CodeBERT outperforms previous pre-trained models on NL-PL probing. Fine-tuning methods for CodeGeeX will be updated in this section soon.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including CodeBERT. The key results are;

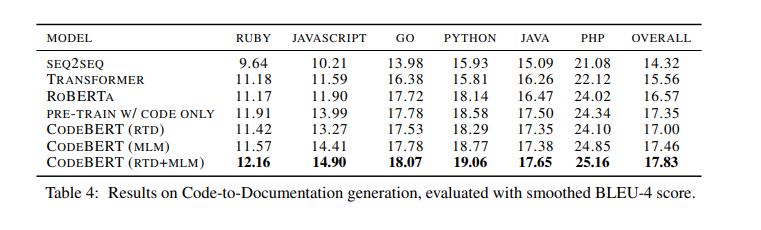

Table 4 shows the results with different models for the code-to-documentation generation task. As we can see, models pre-trained on programming language outperform RoBERTa, which illustrates that pre-trainning models on programming language could improve code-to-NL generation. Besides, results in Table 4 show that CodeBERT pre-trained with RTD and MLM objectives brings a gain of 1.3 BLEU score over RoBERTa overall and achieve the state-of-the-art performance.

Sample Codes

Running the model on a CPU

# Import necessary libraries

from transformers import pipeline, AutoTokenizer, AutoModelForSeq2SeqLM

# Model and tokenizer names

model_name = "microsoft/CodeBERTa-small-v1"

tokenizer_name = "microsoft/CodeBERTa-small-v1"

# Initialize the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained(tokenizer_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name).cpu() # Ensure the model runs on CPU

# Create a summarization pipeline

code_summarization = pipeline("summarization", model=model, tokenizer=tokenizer, device=-1) # device=-1 for CPU

# Input code snippet (Python code)

code_snippet = """

def factorial(n):

if n == 0:

return 1

else:

return n * factorial(n - 1)

"""

# Perform code summarization

summary = code_summarization(code_snippet, max_length=50, min_length=10, do_sample=False)

# Print the summarized code description

print(summary[0]['summary_text'])

Running the model on GPU

# Import necessary libraries

import torch

from transformers import pipeline, AutoTokenizer, AutoModelForSeq2SeqLM

# Check for GPU availability and set device

if torch.cuda.is_available():

device = torch.device("cuda")

else:

raise RuntimeError("No GPU found. Please ensure you have a compatible GPU and CUDA libraries installed.")

# Model and tokenizer names

model_name = "microsoft/CodeBERTa-small-v1"

tokenizer_name = "microsoft/CodeBERTa-small-v1"

# Initialize the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained(tokenizer_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name).to(device) # Ensure the model runs on GPU

# Create a summarization pipeline

code_summarization = pipeline("summarization", model=model, tokenizer=tokenizer, device=device.index) # device for GPU

# Input code snippet (Python code)

code_snippet = """

def factorial(n):

if n == 0:

return 1

else:

return n * factorial(n - 1)

"""

# Perform code summarization

summary = code_summarization(code_snippet, max_length=50, min_length=10, do_sample=False)

# Print the summarized code description

print(summary[0]['summary_text'])

Model Limitations

- CodeBERT, like many other transformer-based language models, has a limit on the number of tokens it can process in one pass. The default maximum length of input sequences for CodeBERT is 512 tokens. Any input sequence longer than 512 tokens must be truncated or split into multiple sequences for processing.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More