Program Synthesis Model,

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks that utilizes a shared encoder and decoder with language-specific embeddings to map text from different languages to a common semantic space.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks that utilizes a shared encoder and decoder with language-specific embeddings to map text from different languages to a common semantic space.

It is trained on 249 GB of code from 12 programming languages

249 GB of code

Trained on 249 GB of code from 12 languages, Polycoder uses deep learning to understand code patterns and generate high-quality code.

An AI Model with 2.7 Billion Parameters

2.7 Billion

With a whopping 2.7 billion parameters, PolyCoder is one of the largest AI models to date, enabling it to generate highly complex and accurate code in seconds.

Polycoder has 50+ stars on Github

50 stars

Polycoder, an AI model for code generation, gained popularity with 50+ GitHub stars, developed by researchers and engineers.

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Model Types

-

Key Results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

Polycoder is a deep learning model for multilingual natural language processing tasks that utilizes a shared encoder and decoder with language-specific embeddings to map text from different languages to a common semantic space. The model is pre-trained on a large corpus of multilingual text using a self-supervised learning objective and leverages a transformer-based architecture. Polycoder also includes a shared translation token embedding to represent translation pairs between different languages, which enables the model to perform cross-lingual tasks. Experimental results demonstrate that Polycoder outperforms several state-of-the-art models on various multilingual natural language processing tasks, indicating its effectiveness in learning language-independent representations that can be used across multiple languages.

Model Highlights

Polycoder is a highly advanced language model that employs several state-of-the-art techniques to achieve its impressive capabilities.

- PolyCoder is a transformer-based language model specifically designed for multi-language code summarization tasks. It is trained on a diverse dataset of 249GB of code across 12 programming languages.

- The paper systematically evaluates the model's performance on code summarization tasks in various programming languages, including C, C++, Java, Python, JavaScript, and more.

- PolyCoder is built on the GPT-2 architecture with 2.7 billion parameters. The authors use a single model to handle all programming languages, enabling the model to learn shared patterns and structures across different languages.

- PolyCoder's trained models are open-source and publicly available, enabling future research and applications in AI-assisted programming and code generation.

Training Details

Training data

The authors used GitHub to gather publicly available source code for 12 programming languages with at least 50 stars, stopping at around 25K per language to avoid bias. They extracted files belonging to the majority language of each project, resulting in an unfiltered training set of 38.9 million files.

Training Procedure

The authors used the budget-friendly GPT-2 architecture and trained three models of different sizes, varying in parameters, layers, and dimensions. The largest model had 2.7 billion parameters and was trained for 150K steps with a batch size of 128 sequences.

Training dataset size

The authors filtered out very large and very short files from their dataset, reducing its size by 33%. Deduplication further reduced the number of files by almost 30% and the dataset size by 29%, leaving 24.1M files and 254GB of data.

Training time and resources

The authors used the GPT-NeoX toolkit 11 to train their model efficiently in parallel with 8 Nvidia RTX 8000 GPUs on a single machine. They were able to train the largest 2.7B model using this setup, which took approximately 6 weeks of wall time.

Model Types

Polycoder is a powerful and versatile language model that comes in different sizes to suit various natural language processing tasks.

| Model | Parameters | Highlight |

| PolyCoder (160M) | 160 million | Smallest version of the PolyCoder model, suitable for various multilingual natural language processing tasks |

| PolyCoder (400M) | 400 million | Larger than PolyCoder (160M), suitable for more complex multilingual natural language processing tasks |

| PolyCoder (2.7B) | 2.7 billion | Largest version of the PolyCoder model, capable of handling the most complex multilingual natural language processing tasks |

Key results

Polycoder is a powerful and versatile language model that comes in different sizes to suit various natural language processing tasks.

| Task | Dataset | Score |

| Pass@1 | HumanEval | 5.59 |

| Pass@10 | HumanEval | 9.84 |

| Pass@100 | HumanEval | 17.68 |

Model Features

Polycoder is a state-of-the-art language model that boasts an impressive array of features that make it particularly well-suited for multilingual natural language processing tasks.

Shared Encoder-Decoder

Polycoder utilizes a shared encoder-decoder architecture with language-specific embeddings to map text from different languages to a common semantic space.

GPT-2 architecture

Polycoder is built on top of the GPT-2 architecture, leveraging its powerful language understanding capabilities for code autocompletion. The architecture of GPT-2 allows it to capture complex patterns and dependencies in natural language text, which can be applied to programming languages as well.

Multilingual Capacity

Polycoder is designed to encode and decode text in multiple languages, making it particularly useful for cross-lingual text generation and machine translation.

Model Tasks

Polycoder is a highly advanced language model with a wide range of capabilities that make it useful for various natural language processing tasks.

Code completion

This task involves predicting the most likely code that a programmer would type next given the current code snippet. PolyCoder uses a transformer-based language model trained on a large corpus of code to predict the most probable next line of code.

Synthesizing code from natural language descriptions

This task involves generating code from natural language descriptions of what the code is supposed to do. PolyCoder is trained on a large corpus of code and its natural language descriptions to generate code that is semantically correct and syntactically valid.

Program generation

This task involves generating entire programs from scratch based on a high-level specification or natural language description of what the program should do. PolyCoder can generate programs that are not only syntactically valid but also semantically correct based on its training data.

Code classification

This task involves classifying code into different categories based on its functionality, purpose, or domain. PolyCoder can classify code snippets into categories such as data processing, machine learning, web development, and more.

Code commenting

This task involves generating natural language comments that explain what the code does, its purpose, and how it works. PolyCoder can generate informative comments that are relevant to the code and its functionality.

Fine-tuning

Polycoder offers non-free access to the model's output through black-box API calls, but the model's weights and training data remain inaccessible. This limitation bars researchers from fine-tuning and adapting the model for domains and tasks beyond code completion. Fine-tuning methods for Polycoder with respect to other tasks will be updated in this section soon.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including Polycoder. The key results are;

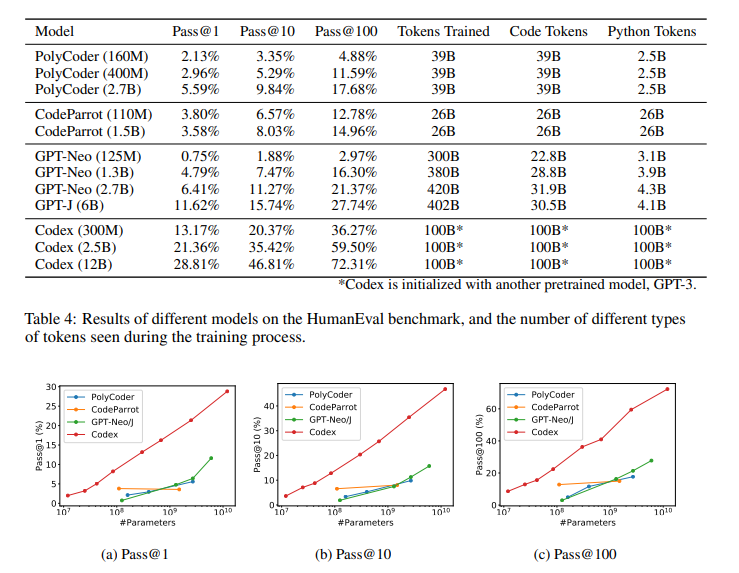

The scaling effect of HumanEval performance on different models

Sample Codes

Running the model on a CPU

import torch

from transformers import PolycoderTokenizer, PolycoderModel

# Load the model and tokenizer

model_name = 'ppluxury/polycoder'

tokenizer = PolycoderTokenizer.from_pretrained(model_name)

model = PolycoderModel.from_pretrained(model_name)

# Set the device to CPU

device = torch.device('cpu')

# Encode the input text

input_text = 'Hello, world!'

input_ids = tokenizer.encode(input_text, return_tensors='pt')

input_ids = input_ids.to(device)

# Generate the output embeddings

with torch.no_grad():

output = model(input_ids)

# Print the output embeddings

print(output)

Running the model on GPU

import torch

from transformers import PolycoderTokenizer, PolycoderModel

# Load the model and tokenizer

model_name = 'ppluxury/polycoder'

tokenizer = PolycoderTokenizer.from_pretrained(model_name)

model = PolycoderModel.from_pretrained(model_name)

# Set the device to GPU

device = torch.device('cuda')

# Move the model and input data to the GPU

model.to(device)

input_text = 'Hello, world!'

input_ids = tokenizer.encode(input_text, return_tensors='pt').to(device)

# Generate the output embeddings

with torch.no_grad():

output = model(input_ids)

# Move the output embeddings to CPU and print them

output = output.to('cpu')

print(output)

Model Limitations

- The model in question is not specifically designed for solving programming problems, and its performance on benchmarks such as HumanEval may not be as good as other models like Codex.

- Codex is a pre-trained model that has been exposed to a large corpus of natural language data, which could help boost its ability to interpret natural language prompts more effectively.

- The model in question has only been trained on language that appears in code comments, limiting its exposure to natural language outside of programming contexts.

- The model seems to have a tendency to generate a random new file once it reaches the end of the current one, possibly due to the end-of-document token not being properly included in the training data.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More