Code LLMs Explained,

SantaCoder

SantaCoder is a 1.1 B parameters program synthesis model pre-trained on Python, Java & JavaScript. The main model uses Multi Query Attention and it was trained for the Fill-in-the-Middle objective using near-deduplication and comment-to-code ratio as filtering criteria.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of SantaCoder

SantaCoder model was trained on GitHub code. It is not an instruction model, users should either phrase commands like they occur in source code or write a function signature and docstring and let the model complete the function body.

Model was trained with 1.1 Billion parameters on Java, JavaScript, and Python.

1.1 Billion Parameters

The base training dataset for the model contains 268 GB of Python, Java, and JavaScript files. Data removed opt-out requests, near-deduplication, and PII-redaction.

Santacode Model Trained with Multi Query Attention and Advanced Techniques.

Multi Query Attention

Multi Query Attention can significantly speed up inference for larger batch sizes, while fill-in-the-middle enables code models to do infilling tasks.

The model is trained with an enormous amount of 236 billion tokens

236 Billion tokens

The model was trained for Multi Query Attention and Fill-in-Middle with a total of 600,000 iterations, processing an enormous amount of 236 billion tokens during its training.

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Key Results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

The SantaCoder models consist of multiple models with 1.1 billion parameters each, which were trained on a subset of The Stack (version 1.1) containing Python, Java, and JavaScript code. The main model utilized Multi Query Attention and was trained using near-deduplication and comment-to-code ratio as filtering criteria while using the Fill-in-the-Middle objective. Additional models were trained with variations in architecture and objective and using different filter parameters on different datasets. Despite being substantially smaller, the model obtains comparable or stronger performance than open-source, multilingual models, InCoder-6.7B and CodeGenMulti-2.7B, on code generation and infilling tasks on the MultiPL-E benchmark for three languages.

Model Highlights

The model research reports multiple experiments to de-risk the model architecture and the data processing pipeline. All models are released under an OpenRAIL license.

- SantaCoder is a 1.1B parameter model trained on Python, JavaScript, and Java. It obtains comparable or stronger performance than previous open-source models, InCoder-6.7B and CodeGenMulti-2.7B, on code generation and infilling tasks on the MultiPL-E benchmark for these three languages.

- The primary SantaCoder model employs Multi Query Attention, which enhances the model's ability to understand complex and multi-part queries, resulting in more accurate responses.

- Near-deduplication and comment-to-code ratio were employed as filtering criteria during training to refine the model further. This enabled the model to filter redundant data and optimize performance effectively.

- The Fill-in-the-Middle objective was also utilized in training the main model, which allowed the model to accurately predict missing code fragments, resulting in higher code generation accuracy.

- Apart from the main model, multiple SantaCoder models were trained on various datasets with different filter parameters, architecture, and objective variations to cater to diverse use cases and provide more specialized services.

- The model uses Hugging Face Tokenizer using the Byte-Pair Encoding (BPE) algorithm on raw bytes with a vocabulary size of 49,152 tokens. The tokenizer was trained on 600,000 rows (Around 2.6 GB) of data—200,000 for each language—which were pre-tokenized using a digit splitter and the default GPT-2 pre-tokenizer regex before being converted to bytes.

Training Details

Training data

The training dataset consists of Python, Java, and JavaScript files, totaling 268 GB in size, sourced from The Stack v1.1. The model was trained for Multi Query Attention and Fill-in-Middle with a total of 600,000 iterations, processing an enormous amount of 236 billion tokens during its training.

Training Procedure

The model is a 1.1B-parameter decoder-only transformer with FIM and MQA trained in float16. It has 24 layers, 16 heads, and a hidden size of 2048. The model is trained for 300K iterations with a global batch size of 192 using Adam g Adam with β1 = 0.9, β2 = 0.95, e = 10−8, and a weight-decay of 0.1.

Data Preprocessing

The model experimented the impact of 4 preprocessing methods on the training data: filtering files from repositories with 5+ GitHub stars, filtering files with a high comments-to-code ratio, more aggressive filtering of near-duplicates, and filtering files with a low character-to-token ratio.

Training Time and Resources

A total of 118B tokens are seen in training. The learning rate was set to 2 × 10−4 and followed a cosine decay after warming up for 2% of the training steps. Each training run takes 3.1 days to complete on 96 Tesla V100 GPUs for a total of 1.05 × 1021 FLOPs.

Key Results

SantaCoder's best model outperforms InCoder6.7B and CodeGen-Multi-2.7B in both left-to-right generation and infilling on the Java, JavaScript, and Python portions of MultiPL-E.

| Task | Dataset | Score |

| Multi Query Attention (FIM) | HumanEval | 0.34 |

| Multi Head Attention | HumanEval | 0.37 |

| Multi Query Attention | HumanEval | 0.37 |

| Multi Query Attention (FIM) | MBPP | 0.61 |

| Multi Head Attention | MBPP | 0.64 |

| Multi Query Attention | MBPP | 0.62 |

| left-to-right (pass@100) | HumanEval | 0.45 |

| fill-in-the-middle (line filling, exact match) | HumanEval | 0.55 |

| Python docstring generation | BLEU Score | 18.13 |

Model Features

To cater to the unique needs of developers and to provide optimal performance across various tasks and domains, the SantaCoder family includes several model types, each with distinct characteristics in terms of dataset filtering, architecture, and objectives.

Large-scale models

SantaCoder models have 1.1 billion parameters, making them capable of understanding complex code patterns and generating high-quality code completions.

Multi-language support

The models are trained on Python, Java, and JavaScript code, allowing them to cater to the needs of developers working with these popular programming languages.

Multi Query Attention

The main SantaCoder model uses Multi Query Attention, which helps it efficiently capture long-range dependencies and understand the context of the code. Multi Query Attention in the architecture of the transformer neural network enables key and value embeddings to be shared across attention heads, resulting in lower memory requirements and faster inference for large-batch settings.

Near-deduplication and comment-to-code ratio filtering

The training data is filtered using near-deduplication and comment-to-code ratio, which helps in reducing redundancy and ensuring a diverse and representative dataset for training the models. The dataset was also decontaminated by removing files that contained test-samples from HumanEval, APPS, MBPP and MultiPL-E.

Fill-in-the-Middle objective

The main SantaCoder model is trained using the Fill-in-the-Middle objective, which encourages the model to generate code completions that fit well in the context of the surrounding code.

Model Tasks

SantaCoder models are designed to assist developers in various aspects of the software development process, offering valuable support in writing efficient and high-quality code.

Code infilling

This task involves completing or adding missing code segments in a given code snippet. Santacoder can use machine learning algorithms to analyze the code snippet and predict the missing segments based on patterns and syntax.

Code generation

This task involves creating a new code snippet based on given requirements or specifications. Santacoder can generate code using deep learning algorithms that learn from examples and can generate code that matches the intended functionality.

Causal masking mechanism

This task involves hiding certain parts of the code from the machine learning model to prevent it from learning spurious correlations that can lead to biased or incorrect predictions. Santacoder can use causal masking to ensure that the model only learns from relevant features and avoids overfitting.

Clone detection

This task involves identifying code segments that are similar or identical to each other, which can indicate code duplication or plagiarism. Santacoder can use code analysis tools and machine learning algorithms to detect clones and recommend changes to eliminate redundancy.

Code repair

This task involves identifying and fixing errors or bugs in the code. Santacoder can use automated tools to detect errors and recommend fixes based on best practices and common coding standards.

Code translation

This task involves converting code from one programming language to another. Santacoder can use machine learning algorithms to analyze the syntax and semantics of the source code and generate equivalent code in the target language.

Fine-tuning

It is advised to fine-tune SantaCoder only on programming languages that are close to Python, Java & JavaScript, otherwise, the model might not converge well.

Setup

This repo provides code to fine-tune the pre-trained SantaCoder model on code/text datasets such as The Stack dataset. You can use this Google Colab for fine-tuning. You can use the train.py script to train on a local machine. It allows you to launch training using the command line on multiple GPUs. Detailed steps are explained here.

Tips & Tricks

If the model still doesn't fit in your memory, use batch_size 1 and reduce seq_length to 1024, for example. If you want to use streaming and avoid downloading the entire dataset, add the flag streaming. If you want to train your model with Fill-In-The-Middle, use a tokenizer that includes FIM tokens, like SantaCoder's, and specify the FIM rate arguments fim_rate and fim_spm_rate (by default, they are 0, for SantaCoder we use 0.5 for both).

Benchmark Results

Benchmarking is an important process to evaluate the performance of any code LLM, including SantaCoder. The key results are;

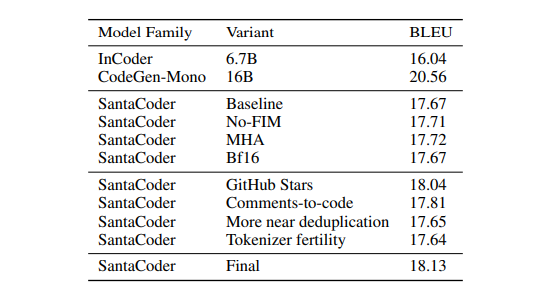

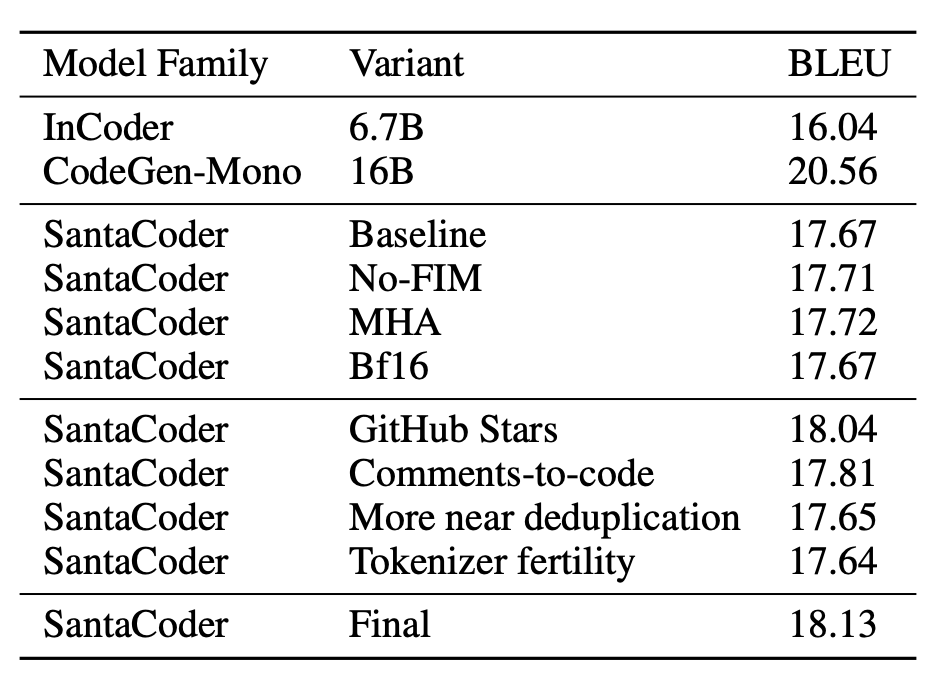

CodeXGLUE (Lu et al., 2021) Python Docstring generation smoothed 4-gram BLEU scores using the same methodology as Fried et al. (2022) (L-R single). Models are evaluated zeroshot, greedily and with a maximum generation length of 128.

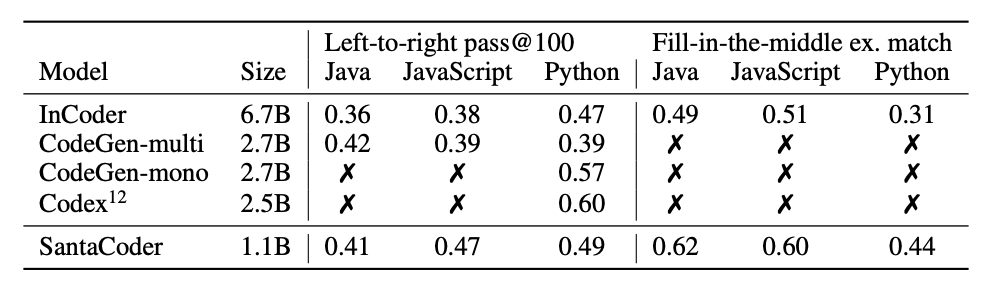

Comparing the performance of the final version of SantaCoder with InCoder (Fried et al., 2022), CodeGen (Nijkamp et al., 2022), and Codex (Chen et al., 2021) on left-to-right (HumanEval pass@100) and fill-in-the-middle benchmarks (HumanEval line filling, exact match).

CodeXGLUE (Lu et al., 2021) Python Docstring generation smoothed 4-gram BLEU scores using the same methodology as Fried et al. (2022) (L-R single). Models are evaluated zeroshot, greedily and with a maximum generation length of 128.

Sample Codes

Generation

# pip install -q transformers

from transformers import AutoModelForCausalLM, AutoTokenizer

checkpoint = "bigcode/santacoder"

device = "cuda" # for GPU usage or "cpu" for CPU usage

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

model = AutoModelForCausalLM.from_pretrained(checkpoint, trust_remote_code=True).to(device)

inputs = tokenizer.encode("def print_hello_world():", return_tensors="pt").to(device)

outputs = model.generate(inputs)

print(tokenizer.decode(outputs[0]))

Fill-in-the-middle

input_text = "<fim-prefix>def print_hello_world():\n <fim-suffix>\n print('Hello world!')<fim-middle>"

inputs = tokenizer.encode(input_text, return_tensors="pt").to(device)

outputs = model.generate(inputs)

print(tokenizer.decode(outputs[0]))

Load other checkpoints

model = AutoModelForCausalLM.from_pretrained( "bigcode/santacoder", revision="no-fim", # name of branch or commit hash trust_remote_code=True )

Model Limitations

- The Santacoder model has undergone training on source code primarily in Python, Java, and JavaScript, with English being the most commonly used language. While it can generate code snippets with some level of understanding, its ability to produce functional and bug-free code cannot be guaranteed.

- Additionally, its efficiency and security may also be compromised, especially when dealing with other languages outside its training domain.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More