T2I Models Explained,

PFGM++

PFGM++ is a family of physics-inspired generative models that embeds trajectories for N dimensional data in N+D dimensional space using a simple scalar norm of additional variables. It unifies diffusion models and Poisson Flow Generative Models, and provides a trade-off between robustness and rigidity.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of PFGM++

PFGM++ is a family of physics-inspired generative models that embeds trajectories for N-dimensional data in N+D dimensional space using a simple scalar norm of additional variables.

State-Of-The-Art FID of 1.74 on CIFAR-10

SOTA FID Scores

PFGM++ generative model achieved a state-of-the-art FID score of 1.74 on CIFAR-10.

Finite D can be superior to previous SOTA diffusion models

Superior Finite D

Finite variable models outperformed previous state-of-the-art diffusion models on CIFAR-10/FFHQ datasets.

Smaller D exhibit improved robustness

Improved Robustness

Smaller variable models have improved robustness against modeling errors, as demonstrated in the study

Blockchain Success Starts here

-

Introduction

-

Key Highlights

-

Training Details

-

Key Results

-

Business Applications

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

PFGM++ is a family of physics-inspired generative models that embeds trajectories for N dimensional data in N+D dimensional space using a simple scalar norm of additional variables. It unifies diffusion models and Poisson Flow Generative Models and provides a trade-off between robustness and rigidity. PFGM++ uses an unbiased perturbation-based objective and allows for hyperparameters transfer. Experiments show that models with smaller D exhibit improved robustness and superior performance on CIFAR-10/FFHQ datasets.

Key highlights

Highlights of the PFGM++ model are:

- PFGM++ is a family of physics-inspired generative models that unifies diffusion and Poisson Flow Generative Models (PFGM).

- PFGM++ embeds generative trajectories for N-dimensional data in N+D dimensional space, where D is a simple scalar norm of additional variables that control the progression.

- The choice of D allows for a trade-off between robustness and rigidity.

- PFGM++ uses an unbiased perturbation-based objective, similar to diffusion models, instead of biased large batch field targets used in PFGM.

- PFGM++ provides a direct alignment method for transferring hyperparameters from diffusion models (D→∞) to any finite D values.

- PFGM++ models with smaller D exhibit improved robustness against modeling errors.

Training Details

Training data

The authors used CIFAR-10 and FFHQ 64x64 datasets to train and evaluate the PFGM++ models.

Training dataset size

CIFAR-10 dataset consists of 50,000 32x32 color images in 10 classes, and FFHQ dataset consists of 70,000 high-quality faces of 64x64.

Training Procedure

The models were trained using stochastic gradient descent (SGD) with a learning rate of 0.0002, batch size of 64, and 100,000 training steps.

Training time and resources

The authors report that training the models on CIFAR-10 dataset took about 5 days on a single Nvidia A100 GPU, and training on FFHQ dataset took about 10 days on a single Nvidia V100 GPU.

Key Results

PFGM++ is a family of physics-inspired generative models that unifies diffusion and Poisson Flow Generative Models (PFGM).

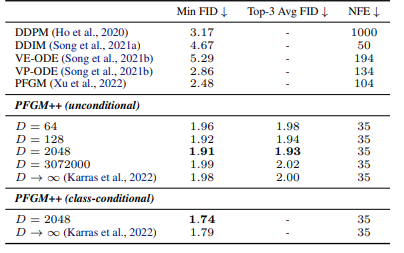

| Task | Dataset | Score |

| Image Generation, PFGM++ (64) | CIFAR-10 | 1.96 |

| Image Generation, PFGM++ (128) | CIFAR-10 | 1.92 |

| Image Generation, PFGM++ (2048) | CIFAR-10 | 1.91 |

| Image Generation, PFGM++ (3072000) | CIFAR-10 | 1.99 |

| Image Generation, PFGM++ (2048) | CIFAR-10 | 1.74 |

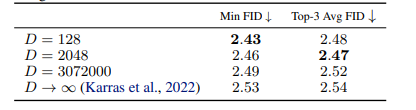

| Image Generation, PFGM++ (128) | FFHQ | 2.43 |

| Image Generation, PFGM++ (2048) | FFHQ | 2.46 |

| Image Generation, PFGM++ (3072000) | FFHQ | 2.49 |

Business Applications

This table provides a quick overview of how PFGM can streamline various business operations relating to image generation.

| Tasks | Business Use Cases | Examples |

| Image Generation | Advertising | Creation of visual content for social media ads |

| Fashion | Generating clothing designs for online catalogs | |

| Real Estate | Creation of virtual tours of properties for marketing | |

| Gaming | Creating game assets such as characters and landscapes | |

| Film and TV | Generating special effects and CGI elements |

Model Features

Unification of diffusion models and Poisson flow generative models (PFGM)

PFGM++ unifies these two families of generative models, allowing for more flexibility and control over the generative trajectories.

Embedding of trajectories in N+D dimensional space

PFGM++ embeds the generative trajectories for N dimensional data in N+D dimensional space, where D is the number of additional variables.

Unbiased perturbation-based objective

PFGM++ uses an unbiased perturbation-based objective, which is similar to the one used in diffusion models.

Direct alignment method

PFGM++ provides a direct alignment method for transferring well-tuned hyperparameters from diffusion models to any finite D values.

Superior performance on image datasets

PFGM++ has been shown to achieve superior performance on image datasets, such as CIFAR-10/FFHQ 64x64 datasets, with FID scores of 1.91/2.43 when D=2048/128.

Model Tasks

Image Generation

PFGM is a generative model that can perform image-generation tasks. Given a dataset of images, PFGM learns the underlying distribution of the images and generates new images similar to those in the dataset. The generated images can be used for various applications, such as generating realistic images for computer graphics, creating new designs for fashion or product manufacturing, or generating new visual content for social media and advertising purposes.

Fine-tuning

Post-training quantization is a technique that can be used to compress neural networks without the need for fine-tuning. This technique introduces more estimation errors to the neural network, impacting its accuracy. Sung et al. (2015) proposed this method, which involves reducing the precision of the weights and activations in the network to a lower bit width. This can be done without retraining the network. However, the reduction in precision can introduce additional quantization noise or approximation errors in the model's predictions, thereby compromising the model's accuracy. The fine-tuning methods will be updated here soon.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including PFGM++. The key results are;

Table 1. CIFAR-10 sample quality (FID) and number of function evaluations (NFE).

Table 2. FFHQ sample quality (FID) with 79 NFE in an unconditional setting.

Sample Codes

Example code to run PFGM++ on GPU using PyTorch:

import torch

from pfgmplusplus import PFGMPlusPlus

# Load the training data

train_data = torch.load("train_data.pt")

# Set the device to GPU

device = torch.device("cuda")

# Initialize the PFGM++ model

model = PFGMPlusPlus(num_dims=3, num_add_dims=64, num_steps=20).to(device)

# Set the optimizer and learning rate

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

# Train the model

for i in range(num_epochs):

# Get a batch of data

batch = train_data[i:i+batch_size].to(device)

# Update the model

loss = model(batch)

loss.backward()

optimizer.step()

optimizer.zero_grad()

# Print the loss

if i % print_interval == 0:

print("Epoch {}: Loss={}".format(i, loss.item()))

# Generate new images

with torch.no_grad():

# Generate a batch of images

noise = torch.randn(batch_size, num_dims).to(device)

generated_images = model.generate(noise)

# Save the generated images

torch.save(generated_images, "generated_images.pt")

Model Limitations

- The paper suggests that further research is needed to explore the limitations of PFGM++ models, particularly concerning scaling to larger datasets and applications in different domains.

- The authors also mention that the performance of the models could be improved with more advanced optimization methods and architectural modifications.

Other LLMs

PFGM++

PFGM++ is a family of physics-inspired generative models that embeds trajectories for N dimensional data in N+D dimensional space using a simple scalar norm of additional variables.

Read More

MDT-XL2

MDT proposes a mask latent modeling scheme for transformer-based DPMs to improve contextual and relation learning among semantics in an image.

Read More

Stable Diffusion

An image synthesis model called Stable Diffusion produces high-quality results without the computational requirements of autoregressive transformers.

Read More