Genz 70B V3

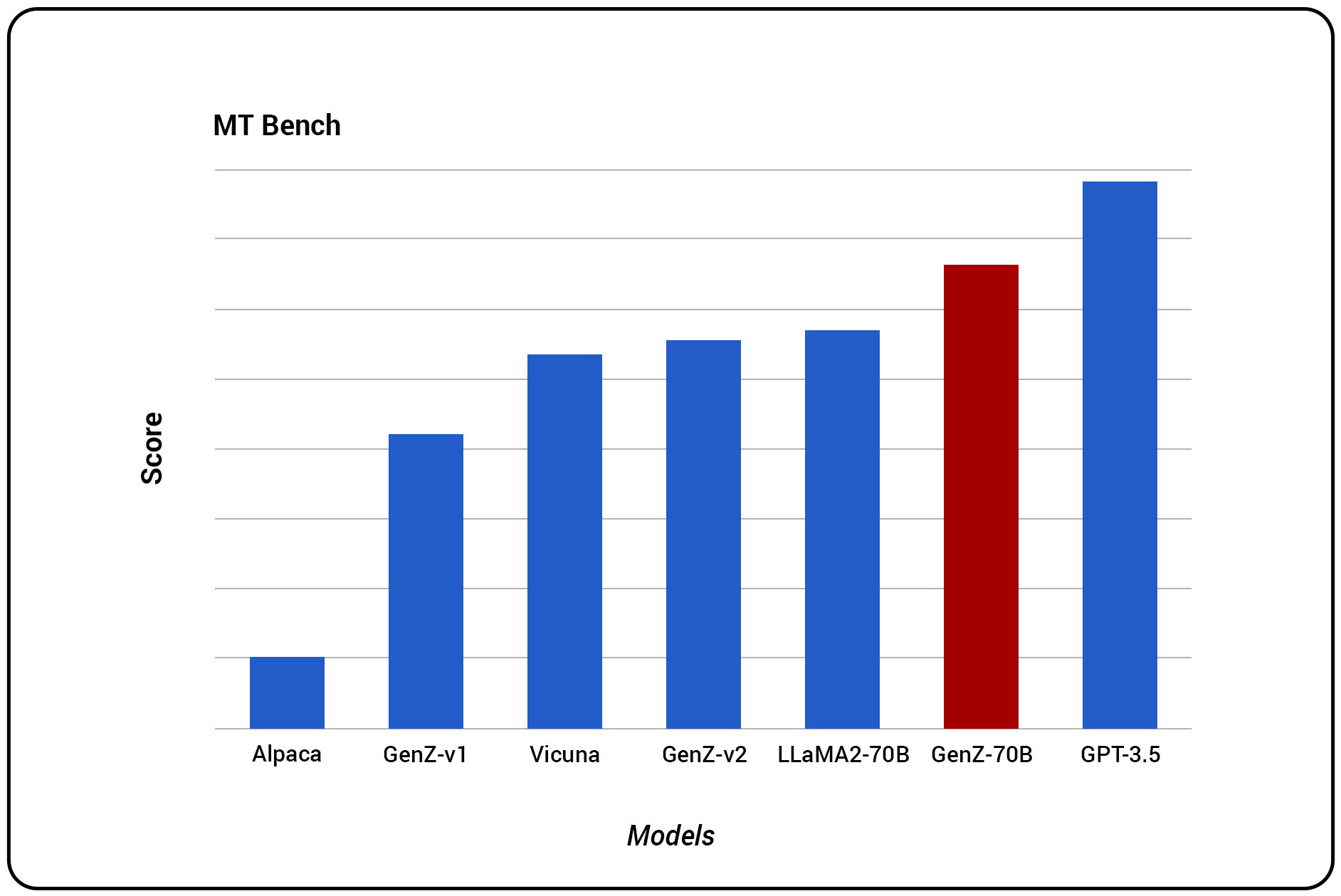

GenZ 70B V3, an instruction-fine-tuned model offering a commercial license. The model is primarily fine-tuned for enhanced reasoning, roleplaying, and writing capabilities. On the MMLU benchmark, the model, with a score of 70.32, outperformed LLaMA2 70B, with a score of 69.83. Moreover, GenZ 70B’s exceptional score of 7.34 on the MT bench benchmark is the SOTA result for open-source LLMs with commercial licenses.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Benchmark

-

How To Use

-

Why Use GenZ?

-

Other LLMs

About Model

Accubits Technologies, in collaboration with Bud Ecosystem, has open-sourced their fifth Large Language Model (LLM) - GenZ 70B, an instruction-fine-tuned model offering a commercial license. The model is primarily fine-tuned for enhanced reasoning, roleplaying, and writing capabilities. In our initial evaluations, the model exhibited outstanding results. On the MMLU benchmark, the model, with a score of 70.32, outperformed LLaMA2 70B, with a score of 69.83. Moreover, GenZ 70B’s exceptional score of 7.34 on the MT bench benchmark is the SOTA result for open-source LLMs with commercial licenses.

Model Highlights

GenZ V3 70B is an advanced Large Language Model fine-tuned on the foundation of Meta's open-source Llama V2 70B parameter model. It is an auto-regressive language model with an optimized transformer architecture. We fine-tuned the model with curated datasets using the Supervised Fine-Tuning (SFT) method. The mode is fine-tuned with OpenAssistant’s instruction fine-tuning dataset and Thought Source for the Chain Of Thought (CoT) approach. With extensive fine-tuning, the model has acquired additional skills and capabilities beyond what a pretrained model can offer. We are committed to continuously enhancing the capabilities of GenZ by regularly fine-tuning our models with various curated datasets to make them even better. Our goal is to reach the state of the art and beyond - and we're committed to staying the course until we get there.

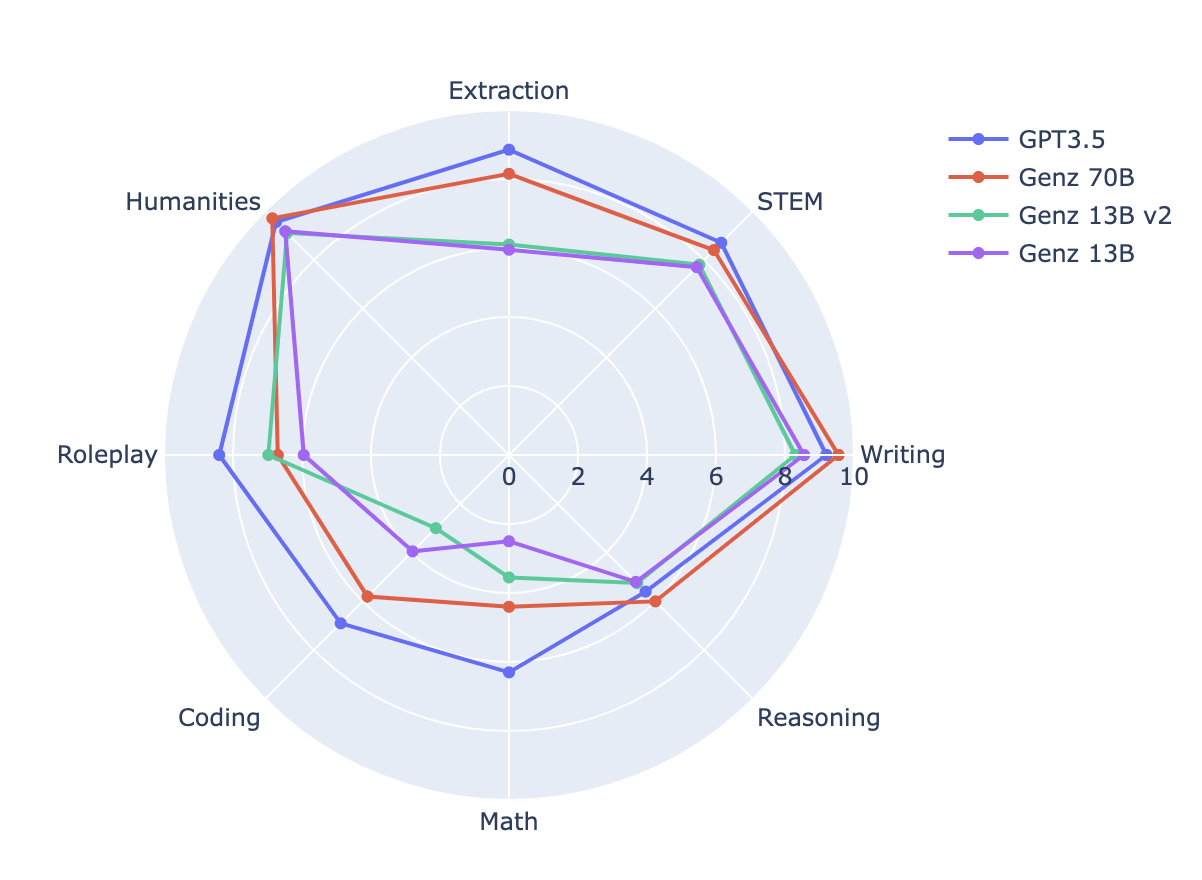

Benchmark

Preliminary assessments carried out on the model delivered remarkable performance results. Notably, on the MMLU benchmark, the model achieved a noteworthy score of 70.32, surpassing LLaMA2 70B's score of 69.83.

| Model Name | MT Bench | MMLU | Human Eval | Hellaswag | BBH |

| Genz 13B | 6.12 | 53.62 | 17.68 | 77.38 | 37.76 |

| Genz 13B v2 | 6.79 | 53.68 | 21.95 | 77.48 | 38.1 |

| Genz 70B | 7.34 | 70.32 | 37.8 | -- | 54.69 |

GenZ 70B attained an outstanding score of 7.34 on the MT bench benchmark, establishing a new state-of-the-art benchmark for open-source Large Language Models (LLMs) with commercial licenses.

How To Use

Start by importing the necessary modules from the ‘transformers’ library and ‘torch’.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("budecosystem/genz-70b", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("budecosystem/genz-70b", torch_dtype=torch.bfloat16, rope_scaling={"type": "dynamic", "factor": 2})

prompt = "### User:\nWrite a python flask code for login management\n\n### Assistant:\n"

inputs = tokenizer(prompt, return_tensors="pt")

sample = model.generate(**inputs, max_length=128)

print(tokenizer.decode(sample[0]))

Why Use GenZ?

While pretrained models are undeniably powerful, GenZ brings something extra to the table. We've fine-tuned it with curated datasets, which means it has additional skills and capabilities beyond what a pretrained model can offer. Whether you need it for a simple task or a complex project, GenZ is up for the challenge.

What's more, we are committed to continuously enhancing GenZ. We believe in the power of constant learning and improvement. That's why we'll be regularly fine-tuning our models with various curated datasets to make them even better. Our goal is to reach the state of the art and beyond - and we're committed to staying the course until we get there.

But don't just take our word for it. We've provided detailed evaluations and performance details in a later section, so you can see the difference for yourself.

Choose GenZ and join us on this journey. Together, we can push the boundaries of what's possible with large language models.

Other LLMs

Genz-13B V1

GenZ-13B V1 is an instruction fine-tuned model that expertly handles complex instructions, offering a truly impressive 4K input length capacity.

Read More

Genz-13B V2

Genz-13B V2 is a newly built instruction fine-tuned model that can manage complex instructions and is fine-tuned using the LLaMa2 pre-trained model.

Read More

Genz-70B V3

Genz-70B V3 is an instruction-fine-tuned model with a commercial license optimized to excel in advanced reasoning, immersive roleplaying, and writing tasks.

Read More