T2I Models Explained,

Stable Diffusion

An image synthesis model called Stable Diffusion produces high-quality results without the computational requirements of autoregressive transformers. It represents the state-of-the-art in class-conditional image synthesis and super-resolution. It can model complex distributions of natural images without requiring trillions of parameters because it is built from denoising autoencoders.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Stable Diffusion

The Stable Diffusion is an image synthesis model that produces high-quality results in class-conditional image synthesis and super-resolution.

Stable Diffusion, a image synthesis model has been made open-source by Stability AI.

1.1 Billion Parameter

Stable Diffusion, a state-of-the-art image synthesis model that uses a 1.1 billion-parameter latent diffusion model.

Stable Diffusion has been pretrained and fine-tuned on the LAION dataset.

5B Image Dataset

The stable Diffusion model is pretrained and fine-tuned on the LAION 5-billion image dataset to generate high-quality images.

Training the existing model on a specific dataset for just 30 minutes.

Image Variations

Dolly's language processing capabilities are improved through a fine-tuning process that involves training on a specific dataset for just 30 minutes.

Blockchain Success Starts here

-

Introduction

-

Key Highlights

-

Training Details

-

Key Results

-

Business Applications

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

Stable Diffusion, a text-to-image neural network, creates pixel-level attribution maps using the DAAM text-image attribution method. The method's generalized attribution quality and semantic segmentation were both assessed. The research paper analyzed interaction patterns on head-dependent heat maps and investigated the function of syntax in the pixel space. According to research that examined semantic phenomena such as feature entanglement, Cohyponyms had a detrimental effect on generation quality. This analysis offers fresh perspectives on large diffusion models when viewed from the standpoint of visuolinguistics.

Key highlights

The release of this model provides researchers and developers with access to a powerful tool for generating high-quality images while also reducing computational requirements. Here are a few key highlights of Stable Diffusion:

- Denoising autoencoders and diffusion models are used in the image synthesis model stable diffusion (DMs).

- It can be controlled without retraining and produces cutting-edge results.

- The model operates directly in pixel space but is expensive and requires much computational power.

- When trained on the latent space of robust, pre-trained autoencoders, stable diffusion achieves near-optimal detail preservation, complexity reduction, and visual fidelity.

- The model's cross-attention layers are incorporated into its architecture, making it a strong and adaptable generator for general conditioning inputs like text or bounding boxes.

Training Details

Training data

The authors randomly sampled 200 words from each of the 14 most common part-of-speech tags in COCO, as extracted with spaCy, to construct their word-image dataset. This resulted in 2,800 word-prompt pairs.

Training dataset size

Stability AI recently open-sourced Stable Diffusion, a 1.1 billion-parameter latent diffusion model, pretrained and fine-tuned on the LAION 5-billion image dataset.

Training Procedure

Stable Diffusion 2.0 base model (512 by 512 pixels) with 30 inference steps, the default 7.5 classifier guidance score, and the state-of-the-art DPM solver.

Training time and resources

The Stable Diffusion paper authors have not provided sufficient information regarding the dataset size and training time.

Key Results

The Stable Diffusion is an image synthesis model that produces high-quality results in class-conditional image synthesis and super-resolution.

| Task | Dataset | Score |

| Image Generation | COCO-Gen | 64.7 |

| Image Generation | COCO-Gen | 59.1 |

| Image Generation | COCO-Gen | 60.7 |

| Image Generation | COCO-Gen | 59 |

| Image Generation | COCO-Gen | 55.4 |

| Image Generation | Unreal-Gen | 58.9 |

| Image Generation | Unreal-Gen | 60.8 |

| Image Generation | Unreal-Gen | 58.3 |

| Image Generation | Unreal-Gen | 57.9 |

| Image Generation | Unreal-Gen | 52.5 |

Business Applications

This table provides a quick overview of how Stable Diffusion can streamline various business operations relating to image generation.

| Tasks | Business Use Cases | Examples |

| Denoising | Improving the quality of noisy text data | Cleaning up OCR scanned documents, removing noise from speech recognition transcripts, enhancing low-quality images and videos |

| Image Generation | Creating synthetic data for computer vision models | Generating new product images for e-commerce, creating realistic images of non-existent products or environments for marketing |

| Instance Segmentation | Object detection and segmentation in visual data | Identifying specific objects in satellite or drone imagery, detecting objects in medical scans, identifying individuals in CCTV footage |

| Semantic Segmentation | Identifying objects in images based on their semantic meaning | Self-driving cars identifying objects on the road, identifying parts of a medical image for diagnosis, classifying land use in satellite imagery |

| Text-to-Image Generation | Creating visual representations of text-based data | Generating images for social media posts or articles, creating visual aids for presentations or reports |

| Unsupervised Semantic Segmentation | Identifying patterns and relationships in text data | Clustering similar documents or sentences together, identifying topics or themes in a large corpus of text, identifying key phrases or entities in text data for NLP tasks. |

Model Features

Stability AI, a tech company, has recently released an open-source version of Stable Diffusion, a state-of-the-art image synthesis model that uses a 1.1 billion-parameter latent diffusion model.

Stable Sinkhorn Autoencoder

To enhance the quality of the generated images, the model incorporates a Stable Sinkhorn Autoencoder (SSAE) module that performs denoising and reduces the impact of noise in the input text.

Multi-Scale Structure

The model also utilizes a multi-scale structure that generates images at different resolutions, starting from a coarse level and progressively increasing the level of detail, enabling it to capture both the global and local features of the image.

Generative Adversarial Network

The proposed architecture for text-to-image generation is a diffusion-based model that combines a language model and a generative adversarial network (GAN) to generate high-quality images from textual descriptions.

Model Tasks

Denoising

Stable diffusion is a technique that removes noise from images. It involves modifying the pixel values in an image to smooth out small variations and eliminate unwanted artifacts. Adjusting the diffusion parameters makes it possible to preserve important features while removing noise.

Image Generation

Stable diffusion can also be used to generate new images. By starting with a seed image and iteratively modifying it using the diffusion process, it is possible to create a wide variety of novel images. This technique has been used for artistic purposes and generating synthetic training data for machine learning algorithms.

Instance Segmentation

Instance segmentation identifies and delineates individual objects within an image. Stable diffusion can be used to enhance the edges of objects and make them more distinct, which can aid in the process of object detection and segmentation.

Semantic Segmentation

Semantic segmentation involves assigning a label to each pixel in an image based on its semantic meaning (e.g. "car", "person", "tree"). Stable diffusion can smooth out small variations in pixel values and make it easier to identify object boundaries, improving the accuracy of semantic segmentation algorithms.

Text-to-Image Generation

Text-to-image generation is the task of generating an image from a textual description. Stable diffusion can generate images that match a given textual description by iteratively modifying an initial image to better match the desired features.

Unsupervised Semantic Segmentation

Unsupervised semantic segmentation involves identifying clusters of pixels in an image with similar semantic meanings without prior knowledge of the object classes. Stable diffusion can be used to group pixels together based on their similarity, which can aid in unsupervised semantic segmentation.

Fine-tuning

Stable UnCLIP 2.1 offers two variants, Stable unCLIP-L and Stable unCLIP-H, conditioned on CLIP ViT-L and ViT-H image embeddings, respectively, and based on SD2.1-768. This model allows for image variations and mixing operations, can be combined with other models like KARLO, and has a public demo at clipdrop.co/stable-diffusion-reimagine. Stable Diffusion 2.1 features two models, Stable Diffusion 2.1-v (768x768 resolution) and Stable Diffusion 2.1-base (512x512 resolution), both based on the same parameters and architecture as 2.0, fine-tuned on 2.0, and using less restrictive NSFW filtering of the LAION-5B dataset. Attention operation is evaluated at full precision when xformers is not installed. Stable Diffusion 2.0 includes Stable Diffusion 2.0-v (768x768 resolution) with the same parameters as 1.5, using OpenCLIP-ViT/H as the text encoder, trained from scratch, and fine-tuned from SD 2.0-base (trained as a standard noise-prediction model on 512x512 images). It also features a x4 upscaling latent text-guided diffusion model and a new depth-guided stable diffusion model finetuned from SD 2.0-base, conditioned on monocular depth estimates via MiDaS. Lastly, d2i is a text-guided inpainting model, finetuned from SD 2.0-base.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including Stable Diffusion. The key results are;

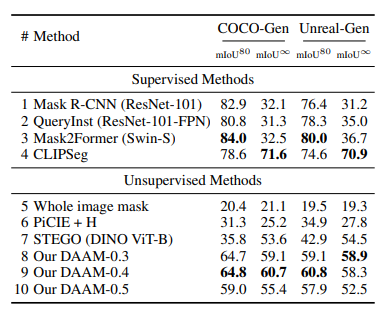

Table 1: MIoU of semantic segmentation methods on our synthesized datasets. Best in each section bolded.

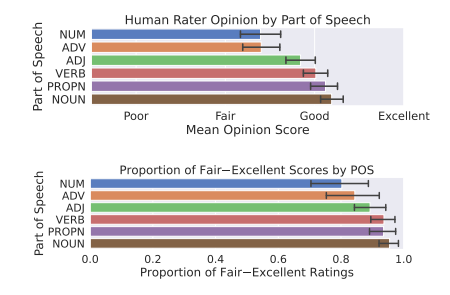

Figure 3: On the top, mean opinion scores grouped by part of speech, with 95% confidence interval bars; on the bottom, proportion of fair–excellent scores, grouped by part-of-speech.

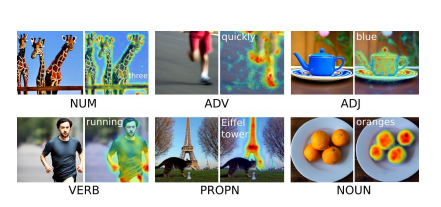

Figure 4: Example generations and DAAM heat maps from COCO for each interpretable part-of-speech.

Sample Codes

To use DAAM as a Library Import and use DAAM as follows

from daam import trace, set_seed

from diffusers import StableDiffusionPipeline

from matplotlib import pyplot as plt

import torch

model_id = 'stabilityai/stable-diffusion-2-base'

device = 'cuda'

pipe = StableDiffusionPipeline.from_pretrained(model_id, use_auth_token=True)

pipe = pipe.to(device)

prompt = 'A dog runs across the field'

gen = set_seed(0) # for reproducibility

with torch.cuda.amp.autocast(dtype=torch.float16), torch.no_grad():

with trace(pipe) as tc:

out = pipe(prompt, num_inference_steps=30, generator=gen)

heat_map = tc.compute_global_heat_map()

heat_map = heat_map.compute_word_heat_map('dog')

heat_map.plot_overlay(out.images[0])

plt.show()

Model Limitations

- Compared to pixel-based methods, LDMs require less computation.

- Comparatively speaking to GANs, LDMs have a slower sequential sampling process.

- When high precision is required, using LDMs may be in question.

- For tasks requiring fine-grained accuracy in pixel space, the reconstruction capacity of f = 4 autoencoding models may become a bottleneck.

- The superresolution models might already have this limitation.

Other LLMs

PFGM++

PFGM++ is a family of physics-inspired generative models that embeds trajectories for N dimensional data in N+D dimensional space using a simple scalar norm of additional variables.

Read More

MDT-XL2

MDT proposes a mask latent modeling scheme for transformer-based DPMs to improve contextual and relation learning among semantics in an image.

Read More

Stable Diffusion

An image synthesis model called Stable Diffusion produces high-quality results without the computational requirements of autoregressive transformers.

Read More