T2I Models Explained,

StyleGAN-XL

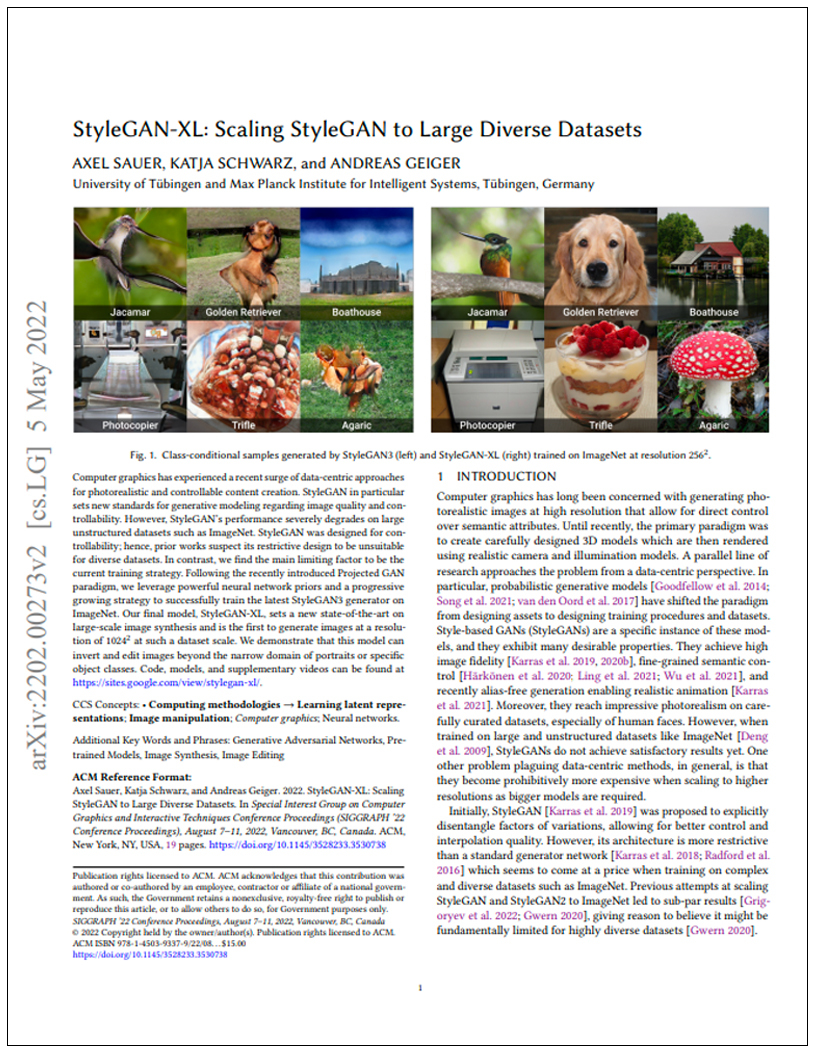

StyleGAN-XL is a new image synthesis model that sets a record by generating high-resolution images at 1024*1024 pixels. Its efficient training strategy allows for a larger model with less computation than prior models and can generate images beyond portraits and specific objects. Despite being three times larger than standard models, it can still match the performance of prior models with far less training time.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of StyleGAN-XL

StyleGAN-XL is a new image synthesis model that sets a record by generating high-resolution images at 1024*1025 pixels. Its efficient training strategy allows for a larger model with less computation than prior models and can generate images beyond portraits and specific objects.

StyleGAN-XL outperforms BigGAN on ImageNet

Gets 13.5 PSNR

Using basic latent optimization, StyleGAN-XL achieves a PSNR of 13.5 on average for inversion on the ImageNet validation set at 512x512, outperforming BigGAN at a PSNR of 10.8.

State-of-the-art performance at a higher resolution

512*512 pixels

Training StyleGAN-XL to match prior state-of-the-art performance on a 512x512 image resolution takes 400 days on a single NVIDIA Tesla V100 GPU.

Model trained on a total of 220 million images

220 Million

The authors trained the model on a total of 220 million images, significantly larger than the datasets used in previous state-of-the-art models.

Blockchain Success Starts here

-

Introduction

-

Key Highlights

-

Training Details

-

Key Results

-

Business Applications

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

StyleGAN-XL is a new image synthesis model that sets a record by generating high-resolution images at 1024*1024 pixels. Its efficient training strategy allows for a larger model with less computation than prior models and can generate images beyond portraits and specific objects. Despite being three times larger than standard models, it can still match the performance of prior models with far less training time.

Key highlights

Highlights of StyleGAN-XL:

- Sets a new state-of-the-art in large-scale image synthesis

- Generates images at a resolution of 1024*1024 pixels, a first at this dataset scale

- Can invert and edit images beyond narrow domains like portraits or specific objects

- Efficient training strategy triples the parameters of standard StyleGAN3

- Reaches prior state-of-the-art performance in a fraction of the training time

- First to demonstrate image synthesis on ImageNet-scale at a resolution of 1024*1024 pixels

- Can train a significantly larger model than previously possible with less computation

- The model is three times larger in depth and parameter count than a standard StyleGAN3

- Requires 400 days of training on a single NVIDIA Tesla V100 to match prior state-of-the-art performance at a resolution of 5122 pixels

Training Details

Training data

The model is trained on a diverse set of datasets, including ImageNet, LSUN, and YFCC100M. The datasets are first preprocessed to improve consistency and remove low-quality images.

Training dataset size

The authors trained the model on a total of 220 million images, significantly larger than the datasets used in previous state-of-the-art models.

Training Procedure

The authors introduce an efficient progressive, growing strategy that enables large-scale training while maintaining stability.

Training time and resources

The authors used a total of 512 NVIDIA V100 GPUs to train the model for approximately 9 days.

Key Results

StyleGAN-XL is a new image synthesis model that sets a record by generating high-resolution images at 1024*1025 pixels.

| Task | Dataset | Score |

| Image Generation | CIFAR-10 | 1.85 |

| Image Generation | FFHQ 1024 x 1024 | 2.02 |

| Image Generation | FFHQ 256 x 256 | 2.19 |

| Image Generation | FFHQ 512 x 512 | 2.41 |

| Image Generation | ImageNet 128x128 | 1.81 |

| Image Generation | ImageNet 256x256 | 2.3 |

| Image Generation | ImageNet 32x32 | 1.1 |

| Image Generation | ImageNet 512x512 | 2.4 |

| Image Generation | ImageNet 64x64 | 1.51 |

| Image Generation | Pokemon 1024x1024 | 25.47 |

| Image Generation | Pokemon 256x256 | 23.97 |

Business Applications

This table provides a quick overview of how StyleGAN-XL can streamline various business operations relating to image generation.

| Tasks | Business Use Cases | Examples |

| Image Synthesis | Marketing and advertising | Generate high-quality images of products and services for marketing campaigns. |

| Entertainment and media | Create realistic images of characters and scenes for movies, TV shows, and video games. | |

| Architecture and design | Generate 3D models and architectural visualizations for design and construction projects. | |

| Human face synthesis | Fashion and beauty | Create realistic images of models wearing clothing and accessories for fashion campaigns. |

| Entertainment and media | Generate lifelike images of characters for movies, TV shows, and video games. | |

| Law enforcement and security | Generate images of suspects based on eyewitness descriptions or composite sketches. |

Model Features

The technical model features of StyleGAN-XL include

Multi-resolution architecture

StyleGAN-XL uses a multi-resolution generator architecture with a progressive, growing approach that allows for training high-quality images with high resolution of up to 1024x1024 pixels.

Adaptive instance normalization

StyleGAN-XL uses adaptive instance normalization (AdaIN) to adjust the mean and variance of the features in the intermediate layers of the generator network. This allows for fine-grained control over the style of the generated images.

Noise injection

StyleGAN-XL uses a noise injection mechanism to introduce random variation in the features of the generator network. This helps to increase the diversity and randomness of the generated images.

Demodulation

StyleGAN-XL uses a demodulation technique to control the magnitude of the feature vectors in the intermediate layers of the generator network. This helps to ensure that the features are properly scaled and normalized.

Extended network depth

StyleGAN-XL extends the depth of the generator network with a new architecture called the “bottleneck block,” which allows for deeper networks and better image quality.

Large-scale training

StyleGAN-XL uses a large-scale training process that involves distributed training on multiple GPUs. This allows for faster convergence and higher-quality images.

Model Tasks

Image synthesis

Create new images with varying styles, colors, and shapes in the given classes.StyleGAN-XL can create high-quality images in 10 different classes of the CIFAR-10 dataset with a resolution of 32x32. It can create high-quality images with 224x224 resolution using the ImageNet classes provided.

Human face synthesis

StyleGAN-XL can generate high-quality human faces with high resolution (up to 1024x1024), diversity, and realism. Create new human faces with varying ages, gender, ethnicity, and other characteristics. Manipulate images by performing age progression/regression, gender-swapping, and expression transfer tasks.

Fine-tuning

In terms of fine-tuning, the authors use the original latent space W for inversion and find that StyleGAN-XL already achieves satisfactory inversion results using basic latent optimization. They report that StyleGAN-XL yields an average PSNR of 13.5 and FID of 21.7 on the ImageNet validation set at 512x512 resolution, improving over BigGAN's PSNR of 10.8 and FID of 47.5. The authors do not mention any specific fine-tuning methods used with StyleGAN-XL in this passage.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including StyleGAN-XL. The key results are;

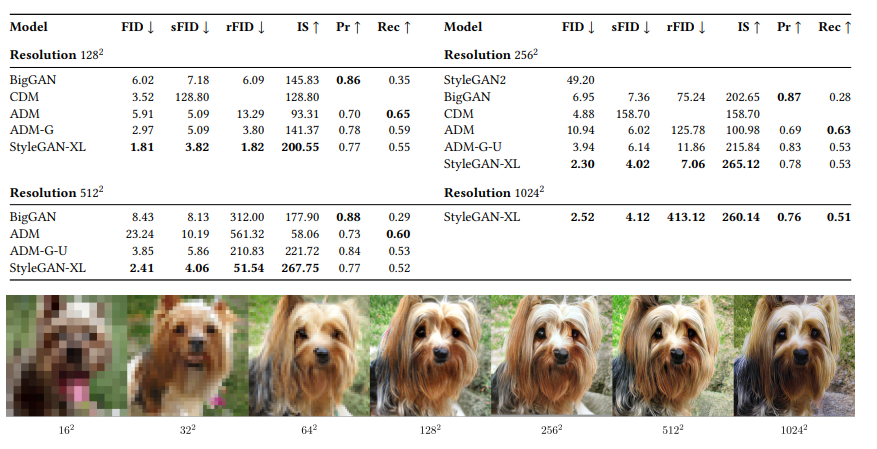

StyleGAN-XL Models

Table - Image Synthesis on ImageNet. Empty cells indicate that the model was unavailable, and the respective metric was not evaluated in the original work.

Figure - Samples at Different Resolutions Using the Same w. The samples are generated by the models obtained during progressive growing. We up a sample of all images to 1024*1024 using nearest-neighbor interpolation for visualization. Zooming in is recommended.

Sample Codes

A sample code to run StyleGAN-XL on GPU using PyTorch:

import torch

from torchvision.utils import save_image

from model import Generator

device = torch.device('cuda')

# Load pre-trained generator

g_ema = Generator(1024, 512, 8)

g_ema.load_state_dict(torch.load('stylegan2-ffhq-config-f.pt')['g_ema'], strict=False)

g_ema.eval().to(device)

# Generate image

with torch.no_grad():

z = torch.randn([1, g_ema.style_dim]).to(device)

c = None

img, _ = g_ema([z], [c], truncation=0.5)

save_image((img + 1) / 2, 'generated.png', normalize=True, range=(-1, 1))

Model Limitations

- Despite the impressive results, the authors note that the generated images still suffer from certain artifacts, such as color fringing and over-smoothing.

- They also note that the current model is limited in its ability to generate high-quality images of specific object classes, such as animals or vehicles, due to the diversity of the training datasets.

Other LLMs

PFGM++

PFGM++ is a family of physics-inspired generative models that embeds trajectories for N dimensional data in N+D dimensional space using a simple scalar norm of additional variables.

Read More

MDT-XL2

MDT proposes a mask latent modeling scheme for transformer-based DPMs to improve contextual and relation learning among semantics in an image.

Read More

Stable Diffusion

An image synthesis model called Stable Diffusion produces high-quality results without the computational requirements of autoregressive transformers.

Read More