InstructEval Models Explained,

Flan-UL2

Flan-UL2 is an encoder-decoder Transformer model pre-trained on a massive dataset of text and code. Flan-UL2 has many notable improvements over the original UL2 model. First, it has a larger receptive field of 2048, which makes it more suitable for few-shot in-context learning. Second, it does not require mode switch tokens, which makes it easier to use. Finally, it has been fine-tuned using the Flan prompt tuning and dataset collection, further improving its performance. Flan-UL2 excels across various tasks, encompassing question answering, text summarization, and natural language inference.

Model Details View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Flan-UL2

Flan-UL2, an advanced large language model (LLM) created by Google AI. It has demonstrated exceptional performance on a range of NLP benchmarks in June 2023. These comprehensive evaluations encompass tasks such as question-answering, summarization, and natural language inference. Flan-UL2 achieved state-of-the-art results across prominent benchmarks, including GLUE, SQuAD, and RACE.

It was trained on a dataset of text and code containing 1.56TB of text and 600GB of code.

100 billion parameters

Flan-UL2 has a base parameter count of 20 billion and an upper parameter count of 100 billion. This is more than the number of parameters in GPT-3, the previous state-of-the-art LLM.

Flan-UL2 uses 20% less memory than GPT-3 because it is built on a more efficient architecture.

Efficient and scalable

It is based on the Flan architecture, a new LLM architecture built to be more efficient and scalable. This architecture allows Flan-UL2 to run faster using less memory than other LLMs of the same size.

Flan-UL2 can be used to train other LLMs, which can further improve their performance.

Community-driven model

The open-source nature of Flan-UL2 makes it easy to extend the model with new features or capabilities. This allows users to customize the model to meet their specific needs.

Blockchain Success Starts here

-

Introduction

-

Model Highlights

-

Training Details

-

Model Types

-

Fine-tuning Methods

-

Using the Model

-

Results

-

Other InstructEval Models

Model Details

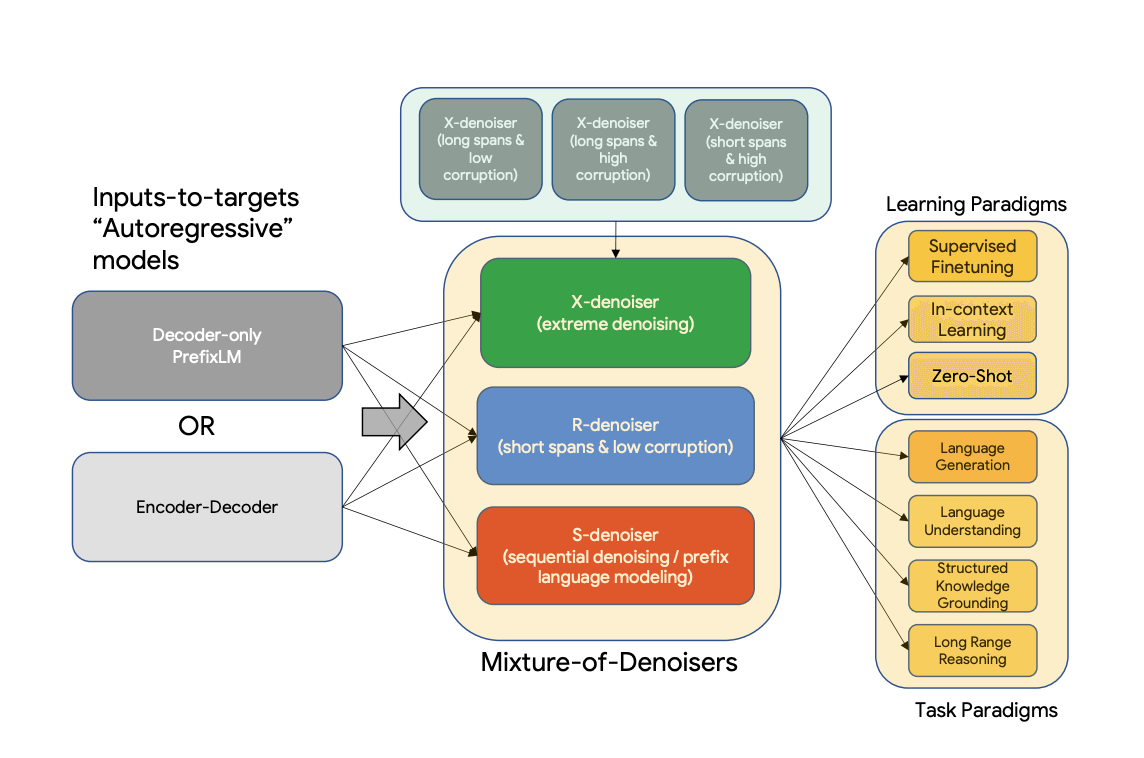

Flan-UL2 was developed by a team of researchers at Google AI, led by Yi Tay. The team developed Flan-UL2 to address the challenges of training large language models (LLMs) on various datasets. Flan-UL2 uses a new pre-training objective called Mixture-of-Denoisers (MoD), which blends the functionalities of diverse pre-training paradigms. In addition, this allows Flan-UL2 to be trained on various datasets while maintaining high performance. Flan-UL2 was trained on a dataset of C4, which is a collection of academic datasets phrased as instructions. So, Flan-UL2 is well-suited for tasks requiring understanding and following instructions, such as machine translation, question answering, and summarization. Flan-UL2 is effective in various natural language processing (NLP) tasks. For example, on the GLUE benchmark, Flan-UL2 achieved an accuracy of 88.5%, comparable to GPT-3. Flan-UL2 has also been effective on tasks requiring few-shot learning, such as code completion and text summarization. Overall, Flan-UL2 is a powerful LLM with several advantages over GPT-3. It is more efficient, more accessible, and just as effective on a variety of NLP tasks. Flan-UL2 is a good choice for applications where resource constraints are a concern but high performance is still required.

Model Highlights

Flan-UL2, a powerful large language model (LLM), stands out as a remarkable advancement, offering a 2x times speed boost compared to GPT-3. This heightened efficiency positions Flan-UL2 as a compelling choice for numerous applications, delivering improved performance while optimizing cost-effectiveness. Notably, the 20B parameter variant of Flan-UL2 is a mere 1/8th the size of the GPT-3 175B parameter model, yet it attains a comparable level of accuracy.

- The original UL2 model had limitations regarding its receptive field, with a size of only 512, rendering it less suitable for scenarios involving large N-shot prompting.

- Additionally, mandatory mode switch tokens in the original UL2 model could introduce inconveniences during inference or fine-tuning processes. A significant update has been made to UL2 20B to address these shortcomings. It underwent an additional 100k training steps (with a small batch) to eliminate the reliance on mode tokens, paving the way for the emergence of Flan-UL2.

- Flan-UL2 offers substantial memory savings, making it a highly cost-effective solution. For instance, deploying Flan-UL2 on a server equipped with 128 GB of memory allows for deploying two Flan-UL2 models, whereas only one GPT-3 model could be accommodated. This efficiency translates into tangible savings in terms of cloud computing expenses.

- Furthermore, the memory savings offered by Flan-UL2 are particularly advantageous for mobile devices. With Flan-UL2, running AI-powered applications on mobile devices with just 4 GB of memory is possible, whereas GPT-3 necessitates 8 GB. This expanded accessibility opens up new possibilities for leveraging Flan-UL2's capabilities on mobile platforms, empowering the development of innovative AI-driven applications.

Training Details

Training Data

Flan-UL2 is a state-of-the-art, advanced language model meticulously trained on an extensive dataset containing an impressive 560 billion words, encompassing a diverse range of text and code. This vast training corpus empowers the model to acquire a comprehensive understanding of various linguistic intricacies, enabling it to generate text that is not only informative but also captivating.

Training Infrastructure

Flan-UL2, a powerful language model, was trained on an extensive dataset of text and code. Its training utilized a cluster of Google Cloud TPU v4 Pods, the C4 corpus, and the efficient and scalable Megatron-Turing NLG (MT-NLG) framework. The TPU v4 Pods delivered up to 180 petaflops of floating-point performance, essential for training Flan-UL2 on the colossal C4 corpus containing approximately 560 billion words.

Training Objective

Flan-UL2 underwent training using a mixture-of-denoisers (MoD) pre-training objective, which incorporates multiple tasks such as masked language modeling, next-sentence prediction, and code summarization. The MoD objective enhances Flan-UL2's ability to learn diverse linguistic features, enabling high performance across various tasks.

Training Observation

The model underwent extensive training on a massive dataset of 1 trillion tokens, surpassing the scale of previous language models. It employed a novel mixture-of-denoisers objective, enabling it to excel in a wide array of tasks. The model underwent prolonged training, resulting in its impressive performance across different applications. Notably, its efficiency in learning new tasks was attributed to the extensive dataset it was trained on.

Model Types

Flan-UL2 is a collection of small and large model types, they are as follows:

| Model | Parameters | Architecture | Initialization | Task |

| Flan-UL2-Base | 20 billion | T5 | Flan | Natural language inference, question answering, summarization, translation, code generation, etc. |

| Flan-UL2-Medium | 40 billion | T5 | Flan | Natural language inference, question answering, summarization, translation, code generation, etc. |

| Flan-UL2-Large | 100 billion | T5 | Flan | Natural language inference, question answering, summarization, translation, code generation, etc. |

Fine-tuning Method

Flan-UL2 employs a robust fine-tuning approach called Flan prompt tuning and dataset collection. This method incorporates diverse prompts to fine-tune the model across various tasks, facilitating its proficiency in a wide range of applications. The prompts are meticulously designed to encompass as much variability as possible, enabling Flan-UL2 to acquire versatile skills. Furthermore, the dataset collection phase involves assembling a comprehensive range of task-specific datasets. Exposing the model to diverse data sources ensures a broader exposure, aiding in the model's enhanced generalization capabilities, particularly when confronted with novel tasks. Consequently, this fine-tuning methodology greatly enhances Flan-UL2's performance across natural language inference, question-answering, and summarization tasks.

Using the Model

Converting from T5x to huggingface

You can use the convert_t5x_checkpoint_to_pytorch.py script and pass the argument strict = False. The final layer norm is missing from the original dictionnary, that is why we are passing the strict = False argument. python convert_t5x_checkpoint_to_pytorch.py --t5x_checkpoint_path PATH_TO_T5X_CHECKPOINTS --config_file PATH_TO_CONFIG --pytorch_dump_path PATH_TO_SAVE

Running the Model

For more efficient memory usage, we advise you to load the model in 8bit using load_in_8bit flag as follows (works only under GPU):

# pip install accelerate transformers bitsandbytes

from transformers import T5ForConditionalGeneration, AutoTokenizer

import torch

model = T5ForConditionalGeneration.from_pretrained("google/flan-ul2", device_map="auto", load_in_8bit=True)

tokenizer = AutoTokenizer.from_pretrained("google/flan-ul2")

input_string = "Answer the following question by reasoning step by step. The cafeteria had 23 apples. If they used 20 for lunch, and bought 6 more, how many apple do they have?"

inputs = tokenizer(input_string, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(inputs, max_length=200)

print(tokenizer.decode(outputs[0]))

# They have 23 - 20 = 3 apples left. They have 3 + 6 = 9 apples. Therefore, the answer is 9.

Otherwise, you can load and run the model in bfloat16 as follows:efore, the answer is 9.

# pip install accelerate transformers

from transformers import T5ForConditionalGeneration, AutoTokenizer

import torch

model = T5ForConditionalGeneration.from_pretrained("google/flan-ul2", torch_dtype=torch.bfloat16, device_map="auto")

tokenizer = AutoTokenizer.from_pretrained("google/flan-ul2")

input_string = "Answer the following question by reasoning step by step. The cafeteria had 23 apples. If they used 20 for lunch, and bought 6 more, how many apple do they have?"

inputs = tokenizer(input_string, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(inputs, max_length=200)

print(tokenizer.decode(outputs[0]))

# They have 23 - 20 = 3 apples left. They have 3 + 6 = 9 apples. Therefore, the answer is 9.

Results

The performance improvement reported results are the following:

| MMLU | BBH | MMLU-CoT | BBH-CoT | Avg | |

| FLAN-PaLM 62B | 59.6 | 47.5 | 56.9 | 44.9 | 49.9 |

| FLAN-PaLM 540B | 73.5 | 57.9 | 70.9 | 66.3 | 67.2 |

| FLAN-T5-XXL 11B | 55.1 | 45.3 | 48.6 | 41.4 | 47.6 |

| FLAN-UL2 20B | 55.7(+1.1%) | 45.9(+1.3%) | 52.2(+7.4%) | 42.7(+3.1%) | 49.1(+3.2%) |

Other InstructEval Models

Falcon 7B Instruct

Falcon-7B-Instruct is a 7B parameter causal decoder-only model built by TII based on Falcon-7B and finetuned on a mixture of chat/instruct datasets.

Read More

Alpaca LoRA

Alpaca LoRA is a 65B parameter LLM that has undergone quantization to 4 bits, resulting in a smaller and more efficient model compared to other LLMs.

Read More

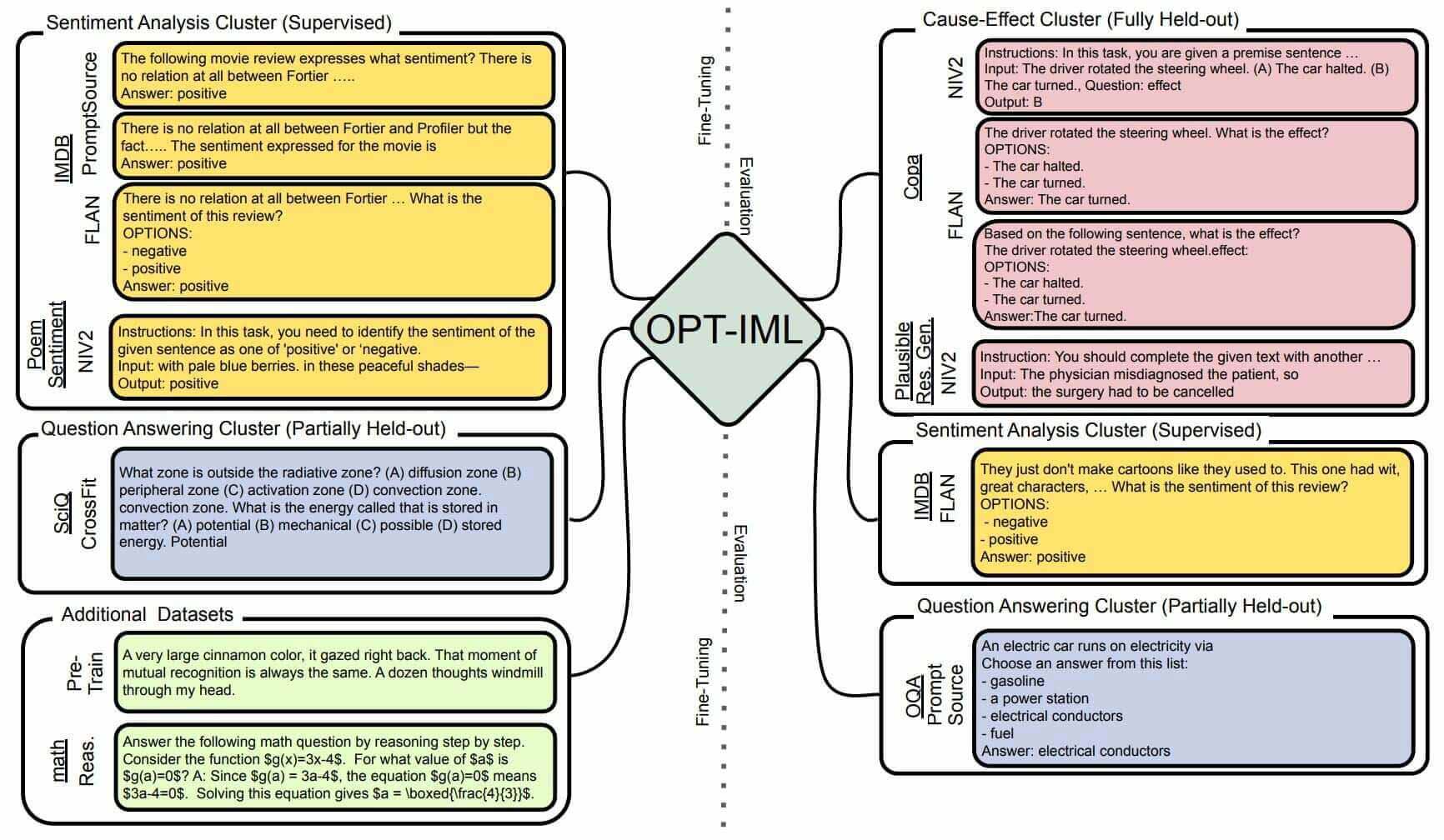

OPT-IML

OPT-IML is a collection of instruction-tuned models based on the OPT transformer trained on around 2000 NLP tasks from the OPT-IML Bench.

Read More