GenZ 13B v2

GenZ 13B v2 is a newly developed instruction fine-tuned model that capably manages complex instructions, providing a substantial 4K input length capacity. This model has been carefully fine-tuned using the LLaMa2 pre-trained model. A notable feature of GenZ 13B v2 is its capability to understand detailed instructions and queries, making it suitable for tasks that require detailed and thorough responses.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

Blockchain Success Starts here

-

About Model

-

Results

-

How to use

-

Benchmark

-

Performance

-

Other LLMs

About Model

GenZ 13B v2 is a newly developed instruction fine-tuned model that capably manages complex instructions, providing a substantial 4K input length capacity. This model has been carefully fine-tuned using the LLaMA 2 pre-trained model. A notable feature of GenZ 13B v2 is its capability to understand detailed instructions and queries, making it suitable for tasks that require detailed and thorough responses.

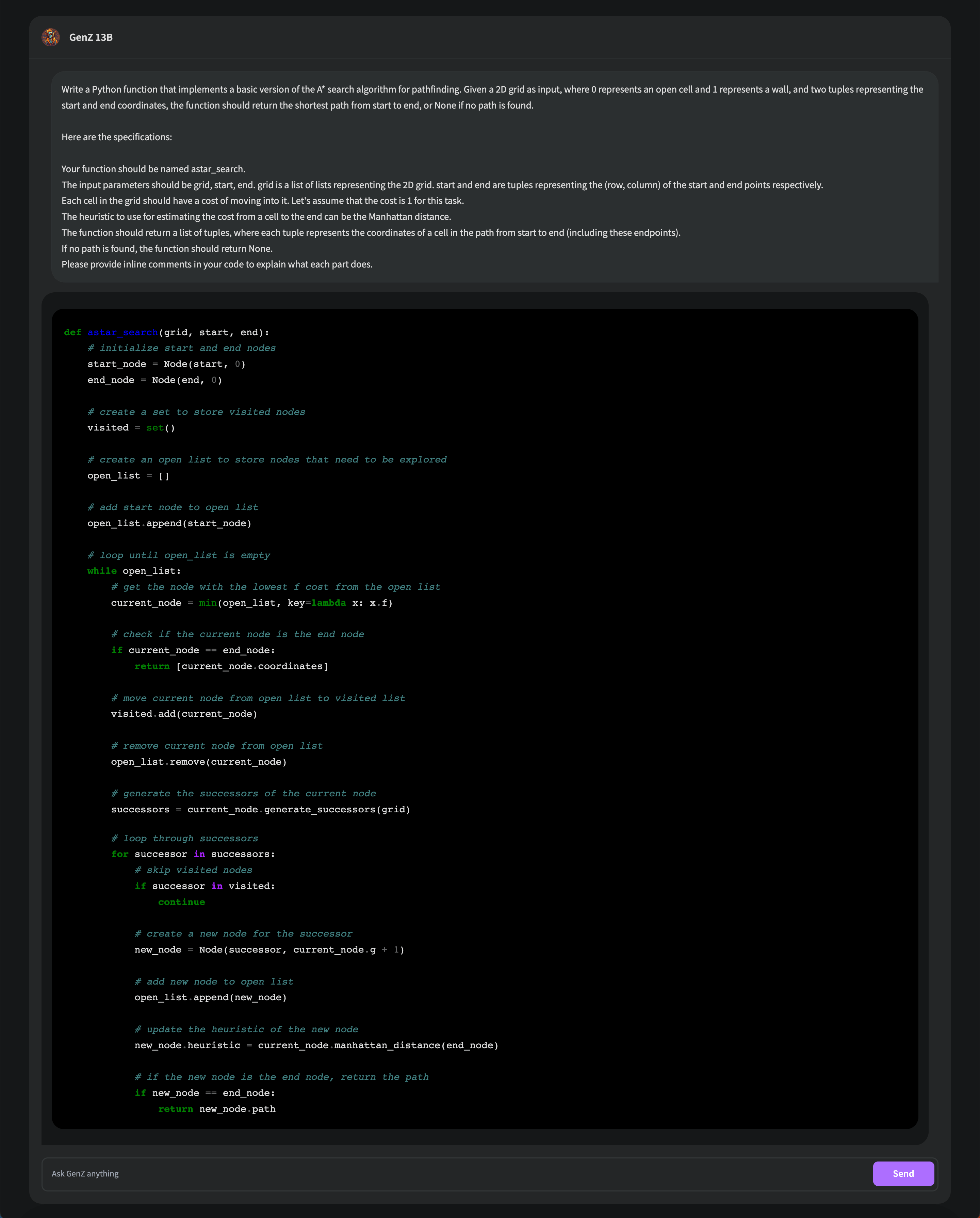

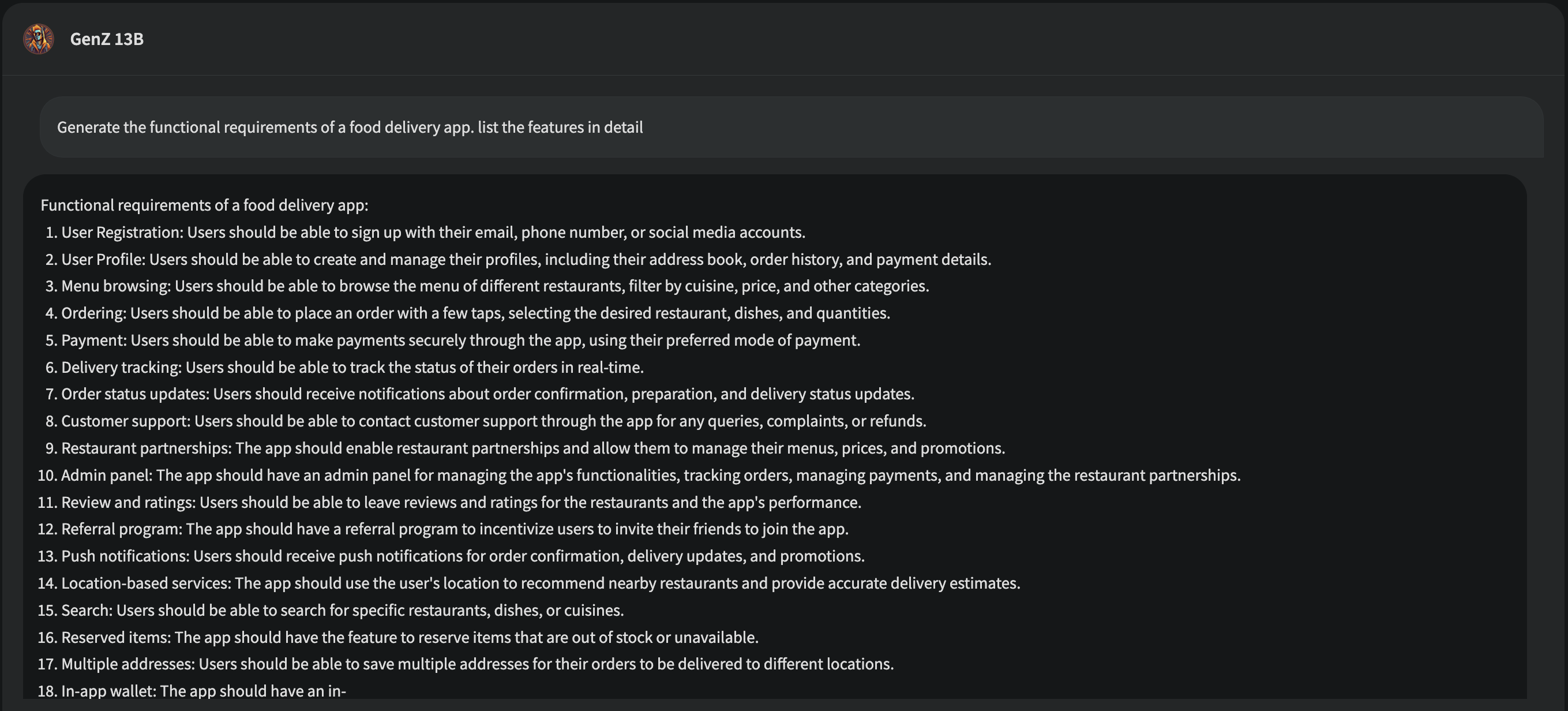

Results

GenZ-13B v2 is an instruction fine-tuned LLM, and it can manage complex instructions, providing a substantial 4K input length capacity. A notable feature of the model is its capability to understand detailed instructions and queries, making it suitable for tasks that require detailed and thorough responses.

How to use

Install dependencies

pip install -r requirements.txt

Inference

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("budecosystem/genz-13b-v2", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("budecosystem/genz-13b-v2", torch_dtype=torch.bfloat16)

inputs = tokenizer("The world is", return_tensors="pt")

sample = model.generate(**inputs, max_length=128)

print(tokenizer.decode(sample[0]))

Use following prompt template

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: Hi, how are you? ASSISTANT:

Fine tuning

python finetune.py --model_name meta-llama/Llama-2-13b --data_path dataset.json --output_dir output --trust_remote_code --prompt_column instruction --response_column output

Generate

python generate.py \

--base_model 'budecosystem/genz-13b-v2'

Benchmark

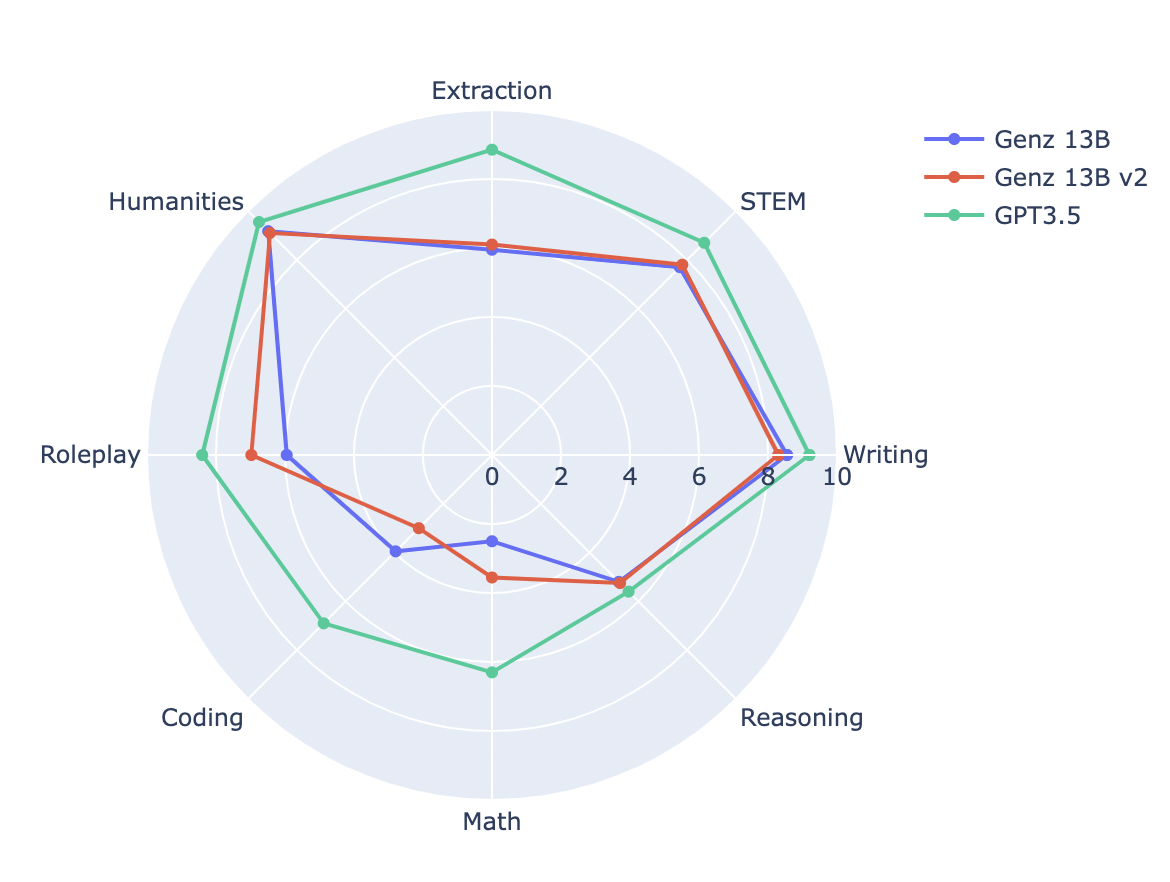

The performance of the Genz 13B v2 model is evaluated across several measures. On the MT Bench and Vicuna Bench, the model achieved a notable scores of 6.79 and 87.2 respectively. When tested on the MMLU, it produced a respectable score of 53.68. In HumanEval, the model scored 21.95, Hellaswag score of 77.48 and BBH score of 38.1

| Model Name | MT Bench | Vicuna Bench | MMLU | Human Eval | Hellaswag | BBH |

| Genz 13B v2 | 6.79 | 87.2 | 53.68 | 21.95 | 77.48 | 38.1 |

Performance

Genz 13B v2 has a total of 13 billion parameters. Even with its comparatively smaller size than the hefty GPT 3.5, Genz 13B has demonstrated impressive competitiveness. In terms of performance, the model has reached 87% of what GPT 3.5 can accomplish, which is quite an achievement, given the latter's size and compute requirements.

Other LLMs

Genz-13B V1

GenZ-13B V1 is an instruction fine-tuned model that expertly handles complex instructions, offering a truly impressive 4K input length capacity.

Read More

Genz-13B V2

Genz-13B V2 is a newly built instruction fine-tuned model that can manage complex instructions and is fine-tuned using the LLaMa2 pre-trained model.

Read More

Genz-70B V3

Genz-70B V3 is an instruction-fine-tuned model with a commercial license optimized to excel in advanced reasoning, immersive roleplaying, and writing tasks.

Read More