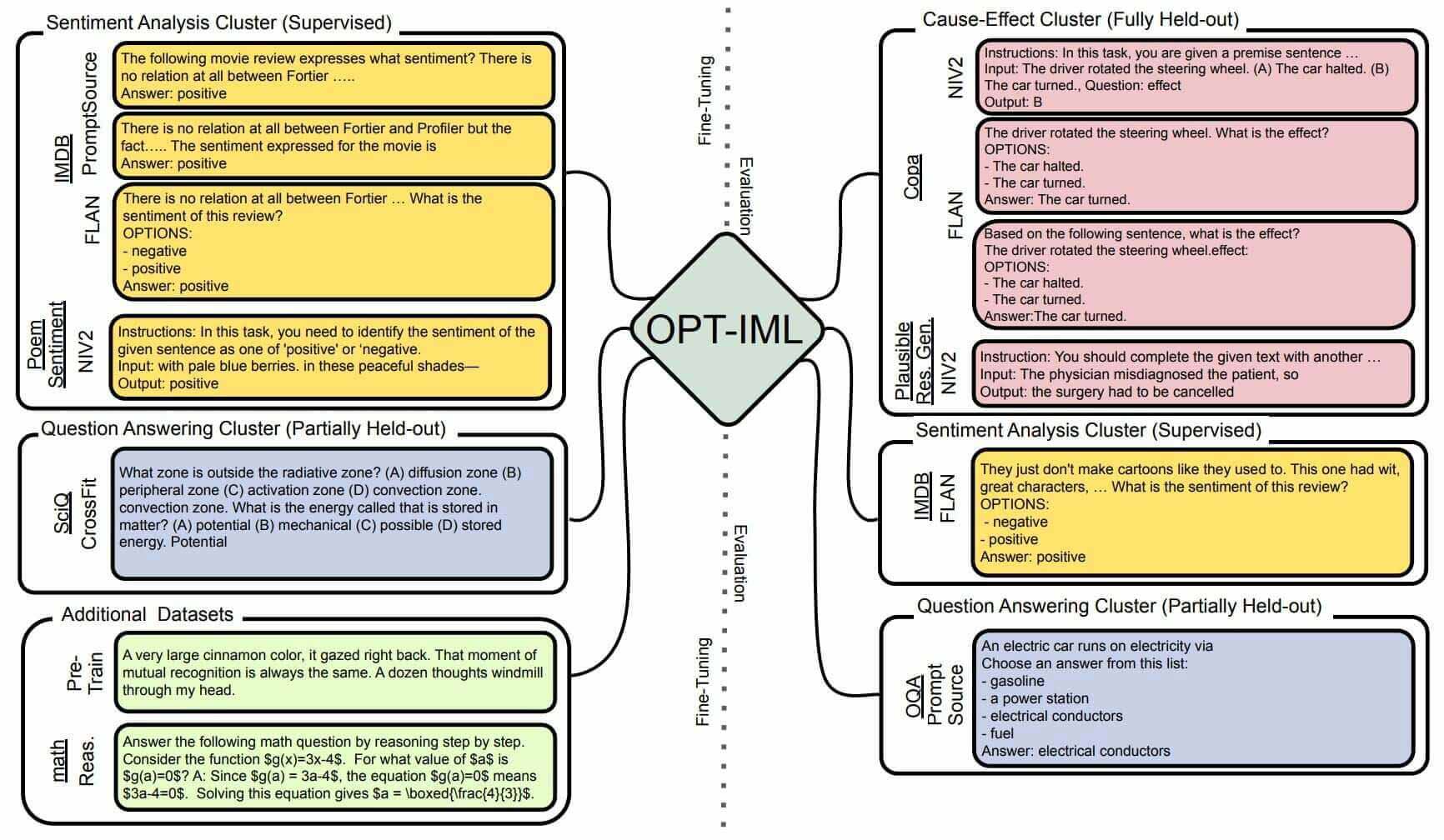

An Overview of OPT-IML

OPT-IML, which stands for OPT + Instruction Meta-Learning, was developed by researchers at Meta AI (formerly Facebook AI). As a substantial benchmark for Instruction Meta-Learning (IML), OPT-IML encompasses a collection of 2000 NLP tasks derived from 8 existing benchmarks, organized into distinct task categories. This comprehensive benchmark evaluates model generalization across three dimensions: tasks from entirely held-out categories, held-out tasks from familiar categories, and held-out instances from familiar tasks. The creation of the OPT-IML benchmark addresses the limitations observed in prior IML research, which primarily focused on modest benchmarks consisting of a few dozen tasks. By scaling both the model and benchmark sizes, OPT-IML enables researchers to explore the impact of instruction-tuning decisions on downstream task performance.

It achieves 93.2% accuracy on noisy held-out tasks from seen categories.

Robustness

OPT-IML's training on a vast and diverse dataset of text and code enhances its robustness, enabling it to easily handle data noise and errors while effectively adapting to unforeseen input and real-world challenges.

OPT-IML is much better at information retrieval tasks compared to GPT-3.

Transferability

OPT-IML exhibits excellent transferability to new tasks, requiring minimal or no additional training. Exposure to diverse tasks during training enables the model to generalize adeptly to novel problem domains.

The model can be trained on datasets 100 times larger than those used for training GPT-3.

Scalability

OPT-IML is highly scalable, allowing for its seamless application to larger models and datasets. This scalability empowers the model to tackle increasingly complex problem domains and leverage extensive data resources for enhanced learning capabilities.