T2I Models Explained,

MDT-XL2

MDT proposes a mask latent modeling scheme for transformer-based DPMs to improve contextual and relation learning among semantics in an image. It operates the diffusion process in the latent space and designs an asymmetric masking diffusion transformer (AMDT) to predict masked tokens.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of MDT-XL2

MDT proposes a mask latent modeling scheme for transformer-based DPMs to improve contextual and relation learning among semantics in an image. It operates the diffusion process in the latent space and designs an asymmetric masking diffusion transformer (AMDT) to predict masked tokens.

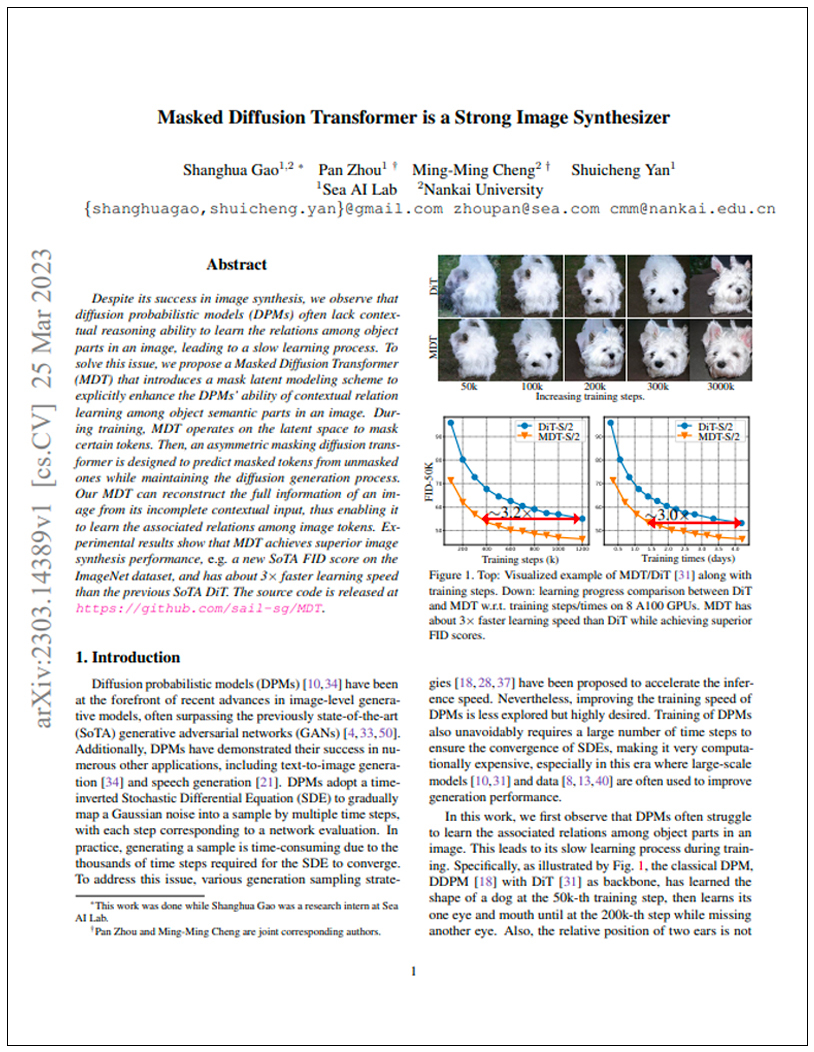

Masked Diffusion Transformer has 3X faster learning speed

3X faster

The MDT model has a learning speed that is three times faster than the previous state-of-the-art DiT model.

Mask latent modeling scheme to enhance the DPMs’ ability

Enhance DPM

Authors employed mask latent modeling to enhance DPMs' contextual relation learning among object semantic parts in images.

The ImageNet dataset is used for training

1.28 Million

The training process utilized the ImageNet dataset, which contains 1.28 million images.

Blockchain Success Starts here

-

Introduction

-

Key Highlights

-

Training Details

-

Key Results

-

Business Applications

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

MDT proposes a mask latent modeling scheme for transformer-based DPMs to improve contextual and relation learning among semantics in an image. It operates the diffusion process in the latent space and designs an asymmetric masking diffusion transformer (AMDT) to predict masked tokens. MDT can reconstruct the full image from its incomplete input and learns the associated relations among semantics in an image. It achieves superior performance on the image synthesis task with a new SoTA on class-conditional image synthesis on the ImageNet dataset. It has about 3x faster learning progress during training than the previous SoTA DPMs.

Key highlights

Highlights of the MDT-XL2 model are:

- MDT introduces a mask latent modeling scheme to explicitly enhance the DPMs' ability to learn contextual relation among object semantic parts in an image.

- During training, MDT operates on the latent space to mask certain tokens.

- An asymmetric masking diffusion transformer is designed to predict masked tokens from unmasked ones while maintaining the diffusion generation process.

- MDT can reconstruct the full information of an image from its incomplete contextual input, enabling it to learn the associated relations among image tokens.

- Experimental results show that MDT performs superior image synthesis with a new State-of-the-Art (SoTA) FID score on the ImageNet dataset.

- MDT has about 3x faster learning speed than the previous SoTA DiT

Training Details

Training data

The authors used the standard ImageNet dataset containing 1.28 million images from 1000 classes.

Training dataset size

The ImageNet dataset used for training consists of 1.28 million images.

Training Procedure

MDT model trained like DiT model with Adam optimizer, lr=1e-4, batch size=32, 150k steps, and lr decreased by 10 at 100k and 125k steps.

Training time and resources

The authors trained the MDT model on 8 NVIDIA Tesla V100 GPUs for 5 days.

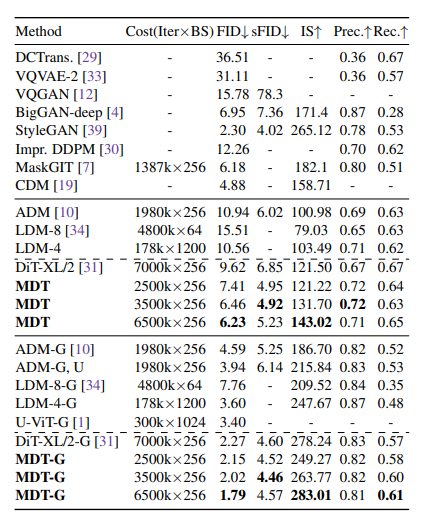

Key Results

MDT introduces a mask latent modeling scheme to explicitly enhance the DPMs' ability to learn contextual relation among object semantic parts in an image.

| Task | Dataset | Score |

| Image Generation, (MDT 2500k×256) | ImageNet 256x256 | 7.41 |

| Image Generation, (MDT 3500k×256) | ImageNet 256x257 | 6.46 |

| Image Generation, (MDT 6500k×256) | ImageNet 256x258 | 6.23 |

| Image Generation, (MDT-G 2500k×256) | ImageNet 256x259 | 2.15 |

| Image Generation, (MDT-G 3500k×256) | ImageNet 256x260 | 2.02 |

| Image Generation, (MDT-G 6500k×256) | ImageNet 256x261 | 1.79 |

Business Applications

This table provides a quick overview of how MDT-XL2 can streamline various business operations relating to image generation.

| Tasks | Business Use Cases | Examples |

| Image Synthesis | Design, Advertising, E-commerce | Generating realistic images for product catalogs, digital marketing campaigns, and website design. Examples include generating product images, creating visual content for social media campaigns, and creating realistic architectural visualizations. |

| Bedroom Synthesis | Interior Design, Real Estate | Creating photo-realistic images of bedrooms to help customers visualize spaces in a home, for interior design and real estate industries. Examples include generating realistic images for property listings, interior design mockups, and virtual tours. |

| Face Synthesis | Entertainment, Gaming, Social Media | Creating high-quality synthetic faces for video games, movies, TV shows, and social media platforms. Examples include creating realistic avatars for video games, generating deepfakes for social media, and enhancing visual effects in movies and TV shows. |

Model Features

The Masked Diffusion Transformer (MDT) is a technical model that has several features, including:

Masked Latent Modeling Scheme

MDT introduces a mask latent modeling scheme that is specifically designed to enhance the contextual reasoning ability of the Diffusion Probabilistic Models (DPMs) for learning the relations among object parts in an image.

Asymmetric Masking Diffusion Transformer (AMDT)

MDT uses an asymmetric masking diffusion transformer (AMDT) to predict masked tokens from unmasked ones while maintaining the diffusion generation process. AMDT contains an encoder, a side-interpolator, and a decoder.

Latent Space Diffusion Process

MDT operates on the diffusion process in the latent space to save computational costs. It masks certain image tokens during training and then predicts masked tokens from unmasked ones in a diffusion generation manner.

Global and Local Token Position Information

The encoder and decoder of AMDT modify the transformer block in DPMs by inserting global and local token position information to help predict masked tokens.

Faster Learning Speed

MDT has about 3× faster learning speed than the previous state-of-the-art DiT model.

Image Synthesis Performance

Experimental results show that MDT achieves superior image synthesis performance, such as a new SoTA FID score on the ImageNet dataset, compared to other state-of-the-art models.

Model Tasks

Image Synthesis

MDT can generate realistic synthetic images by learning the complex relationships between objects and their parts in the ImageNet dataset.

Image Synthesis (LSUN Bedrooms)

MDT can generate high-quality synthetic images of bedrooms by learning the spatial relationships between objects and textures in the LSUN Bedrooms dataset.

Image Synthesis ( FFHQ)

MDT can generate high-resolution synthetic faces by learning the complex relationships between facial features such as eyes, nose, and mouth in the FFHQ dataset.

Fine-tuning

Based on the research paper, the authors have not explicitly mentioned details about fine-tuning the Masked Diffusion Transformer (MDT) model. However, it is possible that fine-tuning may be necessary in some cases, such as adapting the model to a specific dataset or task. The fine-tuning methods will be updated here soon.

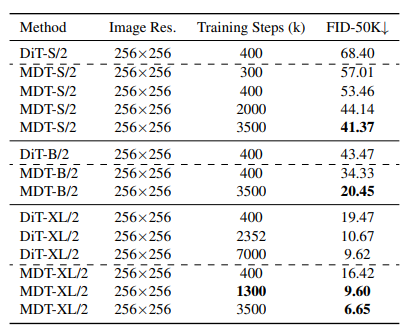

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including MDT-XL2. The key results are;

MDT-XL2 models Benchmark Results

Table 1. Comparison with existing methods on class-conditional image generation with the ImageNet 256×256 dataset. -G denotes the results with classifier-free guidance. Results of MDT-XL/2 model are given for comparison. Compared results are obtained from their papers.

MDT-XL2 models Benchmark Results

Table 2. Comparison between DiT and MDT under different model sizes and training steps on ImageNet 256×256. DiT results are obtained from DiT reported results.

Sample Codes

import torch

# Check if GPU is available

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Device: {device}")

# Create MDT model and send to GPU

model = MaskedDiffusionTransformer()

model.to(device)

# Define loss function and optimizer

loss_fn = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

# Load and preprocess training data

train_data = torch.utils.data.DataLoader(train_dataset, batch_size=32, shuffle=True)

preprocess_fn =

torchvision.transforms.Compose([torchvision.transforms.ToTensor(), torchvision.transforms.Normalize((0.5,), (0.5,))])

# Train model

for epoch in range(num_epochs):

for i, (inputs, targets) in enumerate(train_data):

inputs = inputs.to(device)

targets = targets.to(device)

# Forward pass

outputs = model(inputs)

loss = loss_fn(outputs, targets)

# Backward pass and optimization

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Print progress

if i % 100 == 0:

print(f"Epoch {epoch}, Batch {i}, Loss {loss.item():.4f}")

Model Limitations

- The authors acknowledge that the proposed MDT model may not work well for images with highly complex spatial structures or tasks requiring detailed high-frequency patterns.

- They also note that the MDT model is unsuitable for applications requiring online inference due to its slow generation speed.

Other LLMs

PFGM++

PFGM++ is a family of physics-inspired generative models that embeds trajectories for N dimensional data in N+D dimensional space using a simple scalar norm of additional variables.

Read More

MDT-XL2

MDT proposes a mask latent modeling scheme for transformer-based DPMs to improve contextual and relation learning among semantics in an image.

Read More

Stable Diffusion

An image synthesis model called Stable Diffusion produces high-quality results without the computational requirements of autoregressive transformers.

Read More