Code LLMs Explained,

CodeGeeX

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained on a large code corpus of over 20 programming languages. The Model is published by Knowledge Engineering Group (KEG) & Data Mining at Tsinghua University. As of June 22, 2022, CodeGeeX has been trained on more than 850 billion tokens on a cluster of 1,536 Ascend 910 AI Processors.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of CodeGeeX

CodeGeeX is a large-scale multilingual code generation model that boasts 13 billion parameters and is trained on an extensive code corpus encompassing more than 10+ programming languages.

Extensive training on a massive dataset

850B tokens

CodeGeeX, the multilingual code generation model, has undergone extensive training on a massive dataset consisting of over 850 billion tokens.

Variety of programming languages

10+ languages

CodeGeeX is a versatile code generation model that supports 10+ popular programming languages, such as Python, Java, C++, C, JavaScript, and Go.

Available in popular IDEs as an extension or plugin

Extensions

CodeGeeX offers Customizable Programming Assistant in VS Code and JetBrains IDEs as an extension/plugin. It empowers users with a better coding experience.

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Key Results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

CodeGeeX is a cutting-edge code generation model with a capacity of 13 billion parameters, designed to support multiple programming languages. The model has been pre-trained on an extensive code corpus of more than 20 popular programming languages to enable it to generate high-quality code in diverse contexts. It performs well for generating executable programs in several mainstream programming languages, including Python, C++, Java, JavaScript, Go, etc. It also supports the translation of code snippets between different languages. With one click, CodeGeeX can transform a program into any expected language with high accuracy. All codes and model weights are publicly available for research purposes. It is free in the VS Code extension marketplace, and It supports code completion, explanation, summarization, and more.

Model Highlights

Some of the unique features of CodeGeeX are listed below.

- It is a left-to-right autoregressive decoder, which takes code and natural language as input and predicts the probability of the next token.

- It contains 40 transformer layers with a hidden size of 5,120 for self-attention blocks and 20,480 for feed-forward layers, making its size reach 13 billion parameters. It supports a maximum sequence length of 2,048.

- The model generates executable programs in several mainstream languages, including Python, C++, Java, JavaScript, and Go.

- CodeGeeX enables seamless, high-accuracy translation of code snippets between different programming languages with a single click.

- Available as a free VS Code extension, CodeGeeX enhances the coding experience with features like code completion, explanation, and summarization.

- CodeGeeX introduces the HumanEval-X Benchmark to standardize multilingual code generation and translation evaluation, consisting of 820 coding problems in 5 languages, complete with tests and solutions.

Training Details

Training data

The model's training data is divided into two main sections. The first section is derived from publicly available datasets of code, namely The Pile and CodeParrot. The second section comprises code directly scraped from public GitHub repositories. This section contains code samples from popular programming languages such as Python, Java, and C++, among others.

Training Procedure

The training procedure of CodeGeeX involves implementing the model in MindSpore 1.7 and training it on a cluster of 1,536 Ascend 910 AI Processors with 32GB of memory. The model weights are primarily in the FP16 format, except for layer-norm and softmax layers, where FP32 is used for higher precision and stability. The entire model consumes approximately 27GB of memory.

Training dataset size

The Pile is a subset of a larger code corpus that focuses on collecting code from public repositories on GitHub that have more than 100 stars. The authors select code samples from these repositories in 23 popular programming languages. To obtain higher-quality data, the authors narrow down our selection to repositories with at least one star and a size smaller than 10MB.

Training time and resources

CodeGeeX's training process demands substantial computational resources and time. The model is built using MindSpore 1.7 and trained on a cluster of 1,536 Ascend 910 AI Processors, each with 32GB of memory. The entire model requires about 27GB of memory to function properly.

Key Results

The model has 40 transformer layers and a hidden size of 5,120 for self-attention blocks and 20,480 for feed-forward layers, giving it a total of 13 billion parameters.

| Task | Dataset | Score |

| pass@100 avg | HumanEval-X | 62 |

| Crosslingual Code Translation (avg of pass@100) | HumanEval-X | 72.5 |

Model Features

CodeGeeX is initially implemented in Mindspore and trained Ascend 910 AI Processors. It has several unique features:

Multilingual Code Generation

CodeGeeX excels in generating executable programs in various mainstream languages such as Python, C++, Java, JavaScript, Go, and more.

Crosslingual Code Translation

The model enables seamless translation of code snippets between different programming languages, with just a single click, delivering high accuracy translations.

Customizable Programming Assistant

CodeGeeX is freely available as a VS Code extension, offering code completion, explanation, summarization, and more to enhance users' coding experience.

Open-Source and Cross-Platform

The model's code and weights are publicly accessible for research purposes, supporting both Ascend and NVIDIA platforms. Inference is available on Ascend 910, NVIDIA V100, or A100.

HumanEval-X for Realistic Multilingual Benchmarking

CodeGeeX introduces the HumanEval-X Benchmark to standardize the evaluation of multilingual code generation and translation. This benchmark comprises 820 human-crafted coding problems in 5 programming languages (Python, C++, Java, JavaScript, and Go), each accompanied by tests and solutions. HumanEval-X is available on the HuggingFace platform.

Model Tasks

CodeGeeX is a highly advanced language model with a wide range of capabilities that make it useful for various natural language processing tasks.

Code generation

It involves the automated creation of new code, either from scratch or based on a given prompt. For example, users may input a natural language description of a particular task or function they want their code to perform. CodeGeeX can generate the corresponding code for that task or function in the desired programming language. This can greatly streamline the code-writing process and reduce the time and effort needed to develop new software.

Code translation

It refers to converting code from one programming language to another. For instance, a user may have code written in Python, but they need it in C++ for a particular application. CodeGeeX can translate the input code into the desired target programming language. This can be especially useful for software developers who need to work across multiple programming languages and platforms, as it enables them to easily convert code from one language to another without having to rewrite it from scratch.

Fine-tuning

CodeGeeX-13B-FT is a fine-tuned version of the model, fine-tuned using the training set of code translation tasks in XLCoST; Go is absent in the original set. The results indicate that models have a preference for languages, e.g., CodeGeeX is good at translating other languages to Python and C++, while CodeGen-Multi-16B is better at translating to JavaScript and Go; these could probably be due to the difference in language distribution in the training corpus. Among 20 translation pairs, It is observed that the performance of A-to-B and B-to-A are always negatively correlated, which might indicate that the current models are still not capable of learning all languages well. Fine-tuning methods for CodeGeeX will be updated in this section soon.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including CodeGeeX. The key results are;

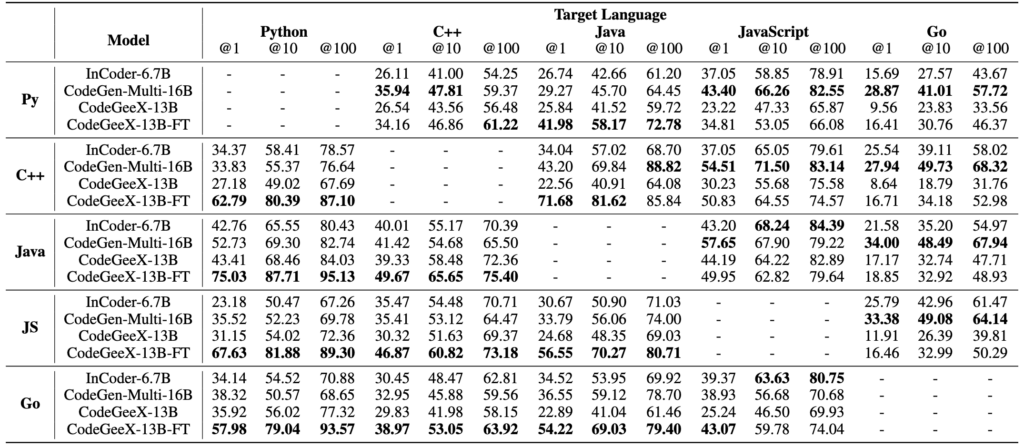

Results on HumanEval-X code translation task. Best language-wise performances are bolded. The results indicate that models have a preference for languages, e.g., CodeGeeX is good at translating other languages to Python and C++, while CodeGen-Multi-16B is better at translating to JavaScript and Go; these could probably be due to the difference in language distribution in the training corpus

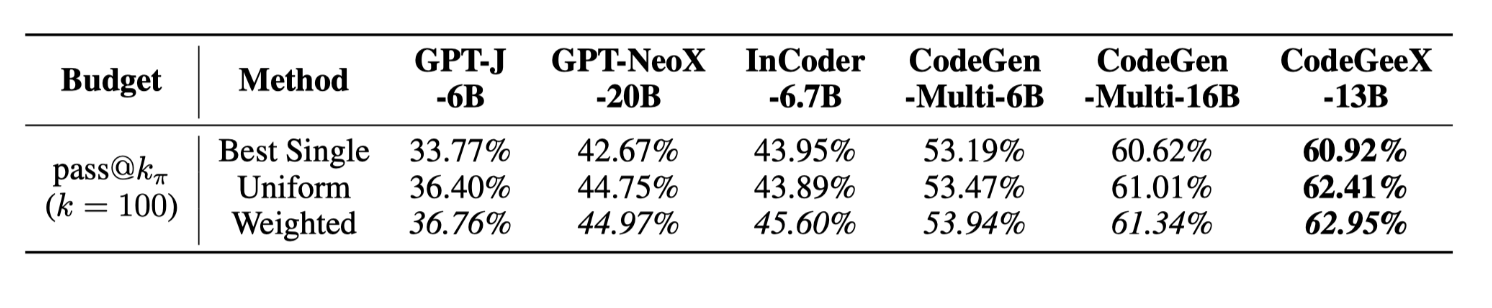

Results for fixed-budget multilingual generation on HumanEval-X. Best model-wise performance on methods are bolded, while best method-wise performance for models are in italic.

Sample Codes

Running the model on a CPU

import torch

import transformers

# Load the CodeGeeX model

model = transformers.AutoModelForSeq2SeqLM.from_pretrained("codegeex/codegeex-base-

multilingual-cpu")

# Load the tokenizer

tokenizer = transformers.AutoTokenizer.from_pretrained("codegeex/codegeex-base-multilingual-cpu")

# Encode the input code snippet

input_code = "print('Hello, World!')"

input_tokens = tokenizer.encode(input_code, return_tensors="pt")

# Generate code in Python

generated_code = model.generate(input_tokens, max_length=128, num_beams=4, early_stopping=True)

decoded_code = tokenizer.decode(generated_code[0], skip_special_tokens=True)

# Print the generated code

print(decoded_code)

Running the model on GPU

import torch

import transformers

# Load the CodeGeeX model

model = transformers.AutoModelForSeq2SeqLM.from_pretrained("codegeex/codegeex-base-multilingual-gpu", use_cuda=True)

# Load the tokenizer

tokenizer = transformers.AutoTokenizer.from_pretrained("codegeex/codegeex-base-multilingual-gpu")

# Encode the input code snippet

input_code = "print('Hello, World!')"

input_tokens = tokenizer.encode(input_code, return_tensors="pt").cuda()

# Generate code in Python

generated_code = model.generate(input_tokens, max_length=128, num_beams=4, early_stopping=True).cuda()

decoded_code = tokenizer.decode(generated_code[0],

skip_special_tokens=True)

# Print the generated code

print(decoded_code)

Model Limitations

- On the other hand, functional correctness can be tested through Python-based evaluation methods like HumanEval, MBPP, and APPS. Still, they are limited to Python and lack diverse, multilingual benchmarks, slowing down the advancement of multilingual program synthesis.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More