Code LLMs Explained,

CodeGen

The CodeGen model is a pre-trained neural network-based model for program synthesis that generates code from natural language descriptions. It uses an encoder-decoder architecture with a transformer-based design to capture complex dependencies between different parts of the code expression.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of CodeGen

The CodeGen model is a pre-trained neural network-based model for program synthesis that generates code from natural language descriptions.

38.83 BLEU score and 0.376 F1 score on CoNaLa dataset

Score of 38.83 on BLEU

CodeGen-Multi 16.1B has achieved state-of-the-art performance on program synthesis tasks, with a BLEU score of 38.83 on the CoNaLa dataset.

CodeGen is trained on MTPB with 115 problems

Trained on 115 problems

CodeGen is trained on a Multi-Turn Programming Benchmark (MTPB) that has 115 expert-written problems, each with a multi-step description in natural language.

CODEGEN-NL models outperforms GPT-NEO and GPTJ

Outperforms GPT-NEO

CODEGEN-NL models (350M, 2.7B, and 6.1B) outperform or are comparable to GPT-NEO and GPT-J models.

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Model Types

-

Key Results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

The CodeGen model is a pre-trained neural network-based model for program synthesis that generates code from natural language descriptions. It uses an encoder-decoder architecture with a transformer-based design to capture complex dependencies between different parts of the code expression. The model incorporates a novel "active intermediate attention" mechanism that selectively attends to relevant parts of the input to generate accurate code expressions. The Hugging Face model repository offers a large-scale version of the CodeGen model with 16 billion parameters trained on a diverse corpus of programming languages, making it a versatile tool for various program synthesis tasks. Users can fine-tune the model on their dataset to improve its performance. Overall, the CodeGen model can potentially automate software development and generate high-quality code expressions from natural language specifications.

Model Highlights

CodeGen is a neural network-based model that uses an encoder-decoder architecture with a transformer-based design. The model is a pre-trained version of a large-scale program synthesis model that was trained using a supervised learning approach.

- CodeGen is a pre-trained neural network model designed for program synthesis, allowing it to generate code from natural language descriptions.

- The model employs a transformer-based architecture, which enables it to capture complex dependencies between different code expressions.

- CodeGen features "active intermediate attention", a novel attention mechanism that selectively focuses on relevant parts of the input to generate accurate code expressions.

- With the Hugging Face model repository, CodeGen is available in a large-scale version trained on a diverse range of programming languages, including Python, Java, C++, and JavaScript.

- The CodeGen model can be fine-tuned to suit specific program synthesis tasks, offering flexibility in generating high-quality code from natural language specifications.

Training Details

Training data

The CODEGEN models are trained on three datasets: The PILE for natural language, BIGQUERY for multilingual, and BIGPYTHON for Python language. CODEGEN-NL, CODEGEN-MULTI, and CODEGEN-MONO are the models that resulted.

Training Procedure

The models are trained in JAX to utilize the hardware efficiently. In JAX, the operator is used for parallel evaluation. The operator enables the single-program, multiple-data (SPMD) code paradigm.

Training dataset size

The data set contains 119.2 billion tokens in C, C++, Go, Java, JavaScript, and Python. CodeGen-Multi 16B was pre-trained on BigQuery, a large-scale dataset of multiple programming languages from GitHub repositories, after being initialized with CodeGen-NL 16B.

Training time and resources

According to the research paper, the CodeGen model was trained on a cluster of 512 GPUs over eight days. The average time spent training per epoch was around 40 minutes.

Model Types

The model incorporates a novel attention mechanism called "active intermediate attention" that selectively attends to relevant parts of the input to generate accurate code expressions.

| Model | Parameters | Highlight |

| CODEGEN-NL 350M | 350 million | Good balance between accuracy and efficiency |

| CODEGEN-NL 2.7B | 2.7 billion | High accuracy with a wide range of programming languages |

| CODEGEN-NL 6.1B | 6.1 billion | Improved accuracy and ability to handle more complex code expressions |

| CODEGEN-NL 16.1B | 16.1 billion | State-of-the-art performance on program synthesis tasks |

Key Results

CodeGen, on natural language and programming language data, and open source the training library JAXFORMER. The utility of the trained model demonstrates that it is competitive with the previous state-of-the-art on zero-shot Python code generation on HumanEval.

| Task | Dataset | Score |

| Pass@1 | HumanEval | 29.28 |

| Pass@10 | HumanEval | 49.86 |

| Pass@100 | HumanEval | 75 |

| Multi-Turn Programming | Pile | 30.33 |

| Multi-Turn Programming | Bigquery | 26.27 |

| Multi-Turn Programming | Bigpython | 47.34 |

| Pass@1 | MBPP | 35.28 |

| Pass@10 | MBPP | 67.32 |

| Pass@100 | MBPP | 80.09 |

Model Features

The model is based on an encoder-decoder architecture with a transformer-based design, and it supports multiple programming languages, including Python, Java, C++, and JavaScript.

Code Property Graph (CPG) Representation

CodeGen employs a novel graph representation called Code Property Graph (CPG), which combines aspects of the Abstract Syntax Tree (AST), Control Flow Graph (CFG), and Data Flow Graph (DFG). This representation is designed to effectively capture the structural and semantic information of the source code effectively. The unified representation allows for a more comprehensive understanding of the code and facilitates subsequent graph transformations.

Graph Construction

The first step in the CodeGen framework is graph construction. The source code is parsed and converted into a high-level graph representation (CPG) in this phase. The process involves creating nodes and edges that represent various code elements, such as variables, functions, control flow, and data dependencies. This graph-based representation helps in better understanding and manipulation of the code structure.

Graph Transformation

The second component of CodeGen is graph transformation. The input CPG is modified to generate a new graph representing the desired code changes in this stage. The transformation is guided by rules and can involve tasks like bug fixing, code refactoring, or optimization. These rules are applied iteratively until a satisfactory transformed graph is obtained.

Applicability to Real-World Tasks

CodeGen addresses real-world code modification tasks such as bug fixing, code refactoring, and code optimization. By automating these tasks, the framework can save developers significant time and effort, improving the overall development process. Furthermore, the unified graph representation and transformation capabilities of CodeGen make it a flexible and extensible solution, potentially applicable to various programming languages and modification tasks.

Rule-Based Transformations

CodeGen leverages a rule-based approach to perform graph transformations. This allows developers to define custom rules for specific code modification tasks, providing a high degree of control and adaptability to different scenarios. As a result, the framework can be tailored to address various challenges and requirements in software development and maintenance.

Active Intermediate Attention

Active Intermediate Attention (AIA) can be understood as a mechanism that enables the model to pay particular attention to the intermediate states generated throughout the code synthesis process.

Model Tasks

The CodeGen model is designed to automate program synthesis tasks that generate code expressions from natural language descriptions.

Multi-Turn Programming (MTP)

The Multi-Turn Programming (MTP) task involves breaking down a single program into multiple subproblems or prompts that must be solved in order. This paradigm divides complex programs into manageable subproblems, enabling more effective program synthesis. The CODEGEN model was tested against an open benchmark of 115 different MTP problem sets, demonstrating the effectiveness of the multi-turn approach in improving program synthesis.

Bug Fixing

CodeGen can identify and fix bugs in the source code by analyzing the Code Property Graph (CPG) representation, applying relevant transformation rules, and generating the corrected code. This process can help streamline software development and maintenance by automating bug detection and repair.

Code Refactoring

CodeGen can perform refactoring tasks, which involve restructuring existing code without changing its external behavior. The framework can identify areas in the code that can be improved for readability, maintainability, or adherence to best practices. By applying appropriate transformation rules on the CPG, CodeGen can generate refactored source code that is easier to understand and maintain.

Code Optimization

CodeGen can optimize source code by identifying performance bottlenecks or areas that can be improved for efficiency. Using the CPG representation and transformation rules, the framework can make changes to the code that enhance its performance without altering its functionality. This can improve runtime, memory usage, and overall system performance.

Fine-tuning

Fine-tuning CodeGen with PeriFlow enables you to harness the power of AI-driven code generation more efficiently and cost-effectively. By leveraging PeriFlow's state-of-the-art optimization techniques, you can achieve faster inference, parameter-efficient training, easy integration, cost savings, and scalability when adapting CodeGen for your specific needs. PeriFlow's Serving (Orca) component delivers up to 30x higher throughput at the same latency level compared to NVIDIA Triton + FasterTransformer, making it an ideal choice for serving CodeGen models. This combination of human creativity and AI-powered coding capabilities not only improves productivity but also reduces overall costs, opening up a world of possibilities for developers and organizations alike. Fine-tuning methods for CodeGen will be updated in this section soon.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including CodeGen. The key results are;

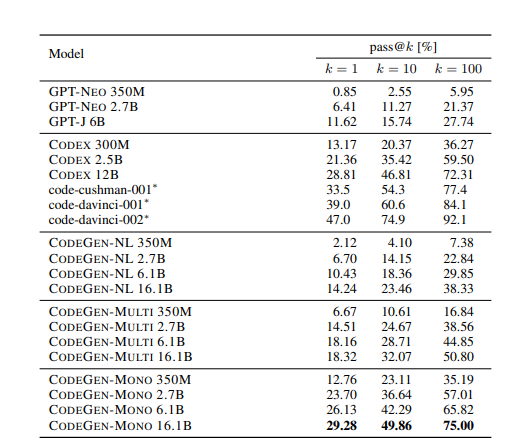

Evaluation results on the HumanEval benchmark. Each pass@k (where k ∈ {1, 10, 100}) for each model is computed with three sampling temperatures (t ∈ {0.2, 0.6, 0.8}) and the highest one among the three are displayed, which follows the evaluation procedure in Chen et al. (2021). Results for the model marked with ∗are from Chen et al. (2022). position. Therefore, we believe two main factors contribute to the program synthesis capacity: 1) large scale of model size and data size and 2) noisy signal in training data. The scaling of such LLMs requires data and model parallelism. To address these requirements, a training library JAXFORMER (https://github.com/salesforce/jaxformer) was developed for efficient training on Google’s TPU-v4 hardware. We refer to Appendix A for further details on the technical implementation and sharding schemes. Table 6 summarizes the hyper-parameters

Sample Codes

Running the model on a CPU

# Import necessary libraries

import torch

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

# Define the CodeGen model and load pre-trained checkpoint

model_name = "Salesforce/codegen-nl-ruby-16M"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

# Set the device to run on the CPU

device = torch.device("cpu")

model.to(device)

# Example input in natural language

input_text = "Given a list of numbers, sort them in descending order using Ruby"

# Tokenize input text and generate code expression

input_ids = tokenizer.encode(input_text, return_tensors="pt")

output = model.generate(input_ids.to(device))

# Decode the generated code expression and print it

decoded_output = tokenizer.decode(output[0], skip_special_tokens=True)

print("Generated code expression: ", decoded_output)

Running the model on GPU

# Import necessary libraries

import torch

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

# Define the CodeGen model and load pre-trained checkpoint

model_name = "Salesforce/codegen-nl-python-10M"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSeq2SeqLM.from_pretrained(model_name)

# Set the device to run on the GPU

device = torch.device("cuda")

model.to(device)

# Example input in natural language

input_text = "Given a list of numbers, find the maximum value using Python"

# Tokenize input text and generate code expression

input_ids = tokenizer.encode(input_text, return_tensors="pt")

input_ids = input_ids.to(device)

output = model.generate(input_ids)

# Decode the generated code expression and print it

decoded_output = tokenizer.decode(output[0], skip_special_tokens=True)

print("Generated code expression: ", decoded_output)

Limitations

While the CodeGen model has proven to be a highly effective tool for natural language processing, its capabilities have limitations.

Profanity

All variants of CODEGEN are firstly pre-trained on the Pile, which includes a small portion of profane language. Focusing on the GitHub data that best aligns with the use case of program synthesis. A study reports that 0.1% of the data contained profane language and had sentiment biases against gender and certain religious groups. For this reason, CODEGEN may generate such content as well. In addition to risks on natural language outputs (e.g., docstrings), generated programs may include vulnerabilities and safety concerns, which are not remedied in the model work. Models should only be used in applications once treated for these risks.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More