Program Synthesis Model,

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs) with deep reinforcement learning (RL) techniques to overcome the limitations of existing code generation methods.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs) with deep reinforcement learning (RL) techniques to overcome the limitations of existing code generation methods.

CodeRL's training process involves an actor network.

Actor-Critic Architecture

CodeRL trains a code-generating AI called an "actor-network" alongside a "critic-network" that evaluates how well the generated code works.

CodeRL introduces a critical sampling strategy

Critical Sampling Strategy

CodeRL uses a sampling technique during testing that incorporates feedback from both unit tests and the critic network

CodeRL achieves new SOTA results

New SOTA Results

CodeRL achieves new SOTA results on two distinct benchmarks - the challenging APPS benchmark and the simpler MBPP benchmark.

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Model Types

-

Key results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs) with deep reinforcement learning (RL) techniques to overcome the limitations of existing code generation methods. Traditional program synthesis methods, which use a standard supervised fine-tuning procedure, often fail to utilize important signals in problem specifications, such as unit tests. As a result, these approaches may struggle to solve complex, unseen coding tasks effectively. To tackle these limitations, CodeRL employs a unique approach that leverages both pretrained LMs and RL. During the training process, the code-generating LM acts as an actor network, while a critic network is introduced to predict the functional correctness of the generated programs. The critic network provides dense feedback signals to the actor, allowing for better learning and adaptation.

Model Highlights

CodeRL is a state-of-the-art AI with the below-listed capabilities.

- CodeRL builds upon the encoder-decoder architecture of CodeT5 by incorporating improved learning objectives, larger model sizes for increased learning capacity, and enhanced pretraining data to enable more accurate and efficient program synthesis.

- Demonstrating its versatility, CodeRL showcases strong zero-shot transfer capabilities. It achieves new state-of-the-art results on the simpler MBPP benchmark, highlighting its ability to generalize to previously unseen tasks.

- As an open-source project, CodeRL encourages collaboration among developers and researchers by providing access to its codebase. This allows for modifications, enhancements, and the exploration of new ideas, further improving program synthesis and code generation methods.

- By combining pretrained language models with deep reinforcement learning techniques, CodeRL effectively generates programs based on natural language problem descriptions. This approach addresses the limitations of traditional supervised fine-tuning methods, resulting in improved performance in complex coding tasks.

Training Details

Training data

The research paper does not provide explicit information about the training data used for CodeRL.

Training dataset size

The research paper does not provide explicit information about the training dataset size used for CodeRL. However, it is worth noting that the pretraining step involves using a large corpus of public Python code and natural language data, typically involving millions or even billions of tokens.

Training procedure

CodeRL has two stages: pretraining and fine-tuning. In pretraining, the model learns Python syntax and natural language understanding. In fine-tuning, the model is trained on a dataset of natural language problem descriptions and Python programs using deep reinforcement learning.

Training time and resources

The research paper does not provide specific details about the training time and resources required for CodeRL. However, training large-scale language models like CodeRL typically involves significant computational resources and time.

Model Types

By incorporating state-of-the-art methods from natural language processing and reinforcement learning, CodeRL aims to automate the process of software development and improve the quality, performance, and efficiency of code.

| Model | Highlight |

| CodeT5-large | A 770M-CodeT5 model trained with Masked Span Prediction objective on CSN obtained new state-of-the-art results on various CodeXGLUE benchmarks. |

| CodeT5-large-ntp-py | The 770M-CodeT5 model was pre-trained using Masked Span Prediction objective on CSN and GCPY, and then with Next Token Prediction objective on GCPY. |

| CodeT5-finetuned_critic | The model is based on CodeT5-base and is capable of predicting Compile Error, Runtime Error, Failed Tests, and Passed Tests outcomes. |

| CodeT5-finetuned_critic_binary | Similar to the previous model, this one was trained to predict whether unit tests passed or failed. A critic was used to aid in generating procedures during inference. |

| CodeT5-finetuned_CodeRL | A CodeT5 model which was initialized from the prior pretrained CodeT5-large-ntp-py and then finetuned on APPS following our CodeRL training framework. |

Key results

The pass@k metrics are computed using the official implementation of the HumanEval benchmark, as it provides a better measurement of pass@k normalized by the number of possible k programs.

| Task | Dataset | Score |

| Pass@1 | APPS | 2.69 |

| Pass@5 | APPS | 6.81 |

| Pass@1000 | APPS | 20.98 |

| 1@k | APPS | 8.48 |

| 5@k | APPS | 12.62 |

| Code-to-Text generation | CodeXGLUE | 19.87 |

| Text-to-Code generation | CodeXGLUE | 45.08 |

| Code-to-Code generation (Java to C#) | CodeXGLUE | 83.56 |

| Code-to-Code generation (C# to Java) | CodeXGLUE | 79.77 |

| Code refine (medium) | CodeXGLUE | 89.22 |

| zero-shot transfer ability | MBPP | 63 |

Model Features

The model is based on the T5 architecture, which has been widely used for natural language processing tasks.

Reinforcement Learning Integration

The framework incorporates deep reinforcement learning with an actor-critic architecture during training, allowing the model to learn more effectively and adapt better to complex coding tasks.

Critic Network

The critic network is introduced to predict the functional correctness of generated programs, providing dense feedback signals to the actor network (the code-generating language model) during training.

Extended Encoder-Decoder Architecture

CodeRL builds on the encoder-decoder architecture of CodeT5, incorporating enhanced learning objectives, larger model sizes, and better pretraining data for optimal results.

Model Tasks

CodeRL is capable of performing a wide range of code-related tasks.

Basic Programming Tasks

CodeRL can generate simple programs or scripts based on natural language problem descriptions. These tasks may involve basic operations such as arithmetic calculations, data manipulation, or simple control structures like loops and conditionals.

Partial Program Synthesis Tasks

In these tasks, CodeRL can generate or complete portions of existing code. Given a partially complete program and a natural language description of the missing functionality, CodeRL can synthesize the code required to complete the program.

Question-Answering Tasks

While CodeRL is primarily designed for code generation, it can also be adapted to answer questions based on its knowledge of programming languages, concepts, and techniques. By leveraging its understanding of natural language and code, CodeRL can answer questions about programming-related topics accurately.

Synthetic Navigation Tasks

In programming, synthetic navigation tasks could involve navigating through a codebase or generating code snippets to accomplish specific goals. CodeRL can be used to understand the structure and relationships within a codebase and generate the required code to achieve a particular outcome or navigate to a desired location.

Code Understanding and Generation Tasks

CodeRL excels at tasks that involve understanding the semantics of code and generating new code based on natural language descriptions. This includes tasks such as code summarization, bug detection, code refactoring, and generating code in response to specific problem statements.

Fine-tuning

While the model is pre-trained on a large-scale code corpus, it can be fine-tuned on specific code-related tasks to improve performance.

Finetuning with Ground-truth Programs

The CodeRL model can be fine-tuned as a program synthesis model that generates code from a problem description in natural language. To achieve this, the model undergoes a warm-up stage using ground-truth annotations before a further fine-tuning stage on synthetic or generated programs. The training process uses two scripts, train_actor.sh and train_actor_deepspeed.sh, which include the necessary parameters. Once trained, the model can take a problem description as input and output a corresponding solution program in Python. The model checkpoints are saved in a folder under the name exps/.

Finetuning with Generated Programs

The CodeRL model has two scripts, train_actor_rl.sh and train_actor_rl_deepspeed.sh, that can train pre-trained language models using synthetic generated programs. During training, a finetuned CodeT5-large model is loaded and continues training with both generated programs and ground-truth programs in alternating steps. The resulting model checkpoints are saved in a folder called exps/.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including CodeRL. The key results are;

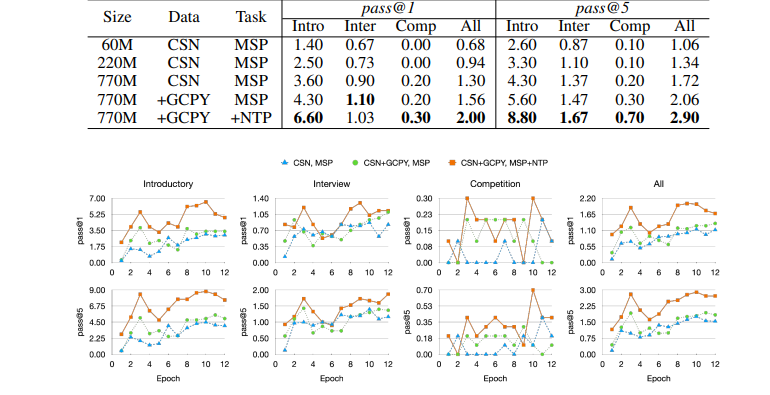

Table: Ablation results of CodeT5 pretrained model variants: We report the results of models pretrained on different configurations by model size, pretraining data, and pretraining task. CSN: CodeSearchNet, GCPY: Github Code Python, MSP: Masked Span Prediction, NTP: Next Token Prediction. For a fair comparison, all models are finetuned only with Lce on APPS.

Figure: Ablation results by finetuning epochs: We report the finetuning progress of CodeT5- 770M models that are pretrained on different configurations by pretraining data and pretraining tasks. CSN: CodeSearchNet, GCPY: Github Code Python, MSP: Masked Span Prediction, NTP: Next Token Prediction. All models are finetuned only with Lce on APPS.

Sample Codes

Running the model on a CPU

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load the pre-trained model and tokenizer

model_name = "gpt2"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Set the device to CPU

device = torch.device("cpu")

# Move the model to the device

model.to(device)

# Generate text

prompt = "Hello, how are you?"

input_ids = tokenizer.encode(prompt, return_tensors="pt").to(device)

output = model.generate(input_ids, max_length=50, do_sample=True)

# Decode the generated output

output_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(output_text)

Running the model on GPU

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load the pre-trained model and tokenizer

model_name = "gpt2"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Set the device to GPU if available

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# Move the model to the device

model.to(device)

# Generate text

prompt = "Hello, how are you?"

input_ids = tokenizer.encode(prompt, return_tensors="pt").to(device)

output = model.generate(input_ids, max_length=50, do_sample=True)

# Decode the generated output

output_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(output_text)

Limitations

While the CodeRL model has proven to be a highly effective tool for natural language processing, its capabilities have limitations.

Biased Code and Toxic Comments

Language models trained on code may generate biased code and even toxic natural language as code comments, which can be a concern for any code generation system, including CodeRL.

Security Vulnerabilities

Pretraining data from public Github repositories might contain security vulnerabilities, and as a result, synthesis models like CodeRL may generate programs with weak security measures. It is essential to have qualified human developers to verify the output.

Computational Cost

Training the critic model in addition to the original language model (actor-network) increases the computational cost of CodeRL. However, it is mentioned that fine-tuning costs for the critic model are minor compared to the pretraining of the original language model.

Alignment Failure

Like other code generation systems, CodeRL may exhibit alignment failure, where the model outputs are not entirely aligned with the user's intent. While leveraging unit tests during training and inference can help improve alignment, this remains a limitation.

Dependence on Human Verification

Although CodeRL generates improved code, its output still requires verification by qualified human developers to ensure the code's correctness, safety, and alignment with user requirements.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More