Code LLMs Explained,

CodeT5

CodeT5, developed by Salesforce Research, is a Transformer model that improves code understanding and generation using developer-assigned identifiers. Their method includes a pre-training task for distinguishing code tokens that are identifiers, as well as a dual-generation task that uses user-written code comments. Experiments show that CodeT5 outperforms previous methods on various understanding and generation tasks and better captures code semantics.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of CodeT5

CodeT5 leverages the power of large-scale pre-training on code data, combined with fine-tuning on downstream code-related tasks, to improve the accuracy and efficiency of code-related applications.

SOTA results on the 14 sub-tasks in CodeXGLUE.

SOTA results 14 sub-tasks

The research paper on CodeT5 shows that it yields state-of-the-art results on the fourteen sub-tasks in CodeXGLUE.

CodeT5 is a newly developed encoder-decoder model

8.35 million Functions

CodeT5 is a recently developed encoder-decoder model designed for programming languages, and it has been pre-trained on a dataset of 8.35 million functions

CodeT5 achieves over 99% F1 for all PLs for identifier tagging.

Over 99% F1 for all PLs

Researchers also identify the identifier tagging performance and find it achieves over 99% F1 for all PLs, showing that CodeT5 can confidently distinguish identifiers in code.

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Model Types

-

Key Results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

CodeT5 is a pre-trained Transformer model designed specifically for code understanding and generation tasks. The model is based on the T5 architecture, which has been widely used for natural language processing tasks. CodeT5 leverages the power of large-scale pre-training on code data, combined with fine-tuning on downstream code-related tasks, to improve the accuracy and efficiency of code-related applications.

Model Highlights

CodeT5 is a state-of-the-art natural language processing (NLP) model designed specifically for code understanding and generation tasks.

- CodeT5 is a pre-trained Transformer model designed specifically for code understanding and generation tasks.

- The model is based on the T5 architecture, which has been widely used for natural language processing tasks.

- CodeT5 leverages large-scale pre-training on code data, combined with fine-tuning on downstream code-related tasks, to improve the accuracy and efficiency of code-related applications.

- CodeT5 generates high-quality representations of code, learning from a large corpus of code data.

- The model has been shown to be effective on several code-related tasks, including code completion, code summarization, and code-to-text generation.

- CodeT5 represents an important advance in the field of code understanding and generation, with the potential to improve the accuracy and efficiency of a wide range of code-related applications.

Training Details

Training data

CodeSearchNet is used to pre-train CodeT5, consisting of six PLs with unimodal and bimodal data. Two C/CSharp datasets from BigQuery also ensure that all downstream tasks have overlapped PLs with the pre-training data.

Training Procedure

Tokenization is critical to the success of pre-trained language models such as BERT and GPT. Like T5, CodeT5 is trained on a Byte-level BPE tokenizer with a vocabulary size of 32,000. There are also additional special tokens such as [PAD], [CLS], [SEP], [MASK0],..., [MASK99].

Training dataset size

The CODEX dataset comprises over 1.1 million functions with their corresponding natural language descriptions. The dataset is split into a training set (70%), validation set (10%), and test set (20%).

Training Time and Resources

The total training time for CodeT5-small and CodeT5-base is 5 and 12 days, respectively. Code T5 is pre-trained with the denoising objective for 100 epochs and bimodal dual training for another 50 epochs on a cluster of 16 NVIDIA A100 GPUs with 40G memory.

Model Types

The model incorporates a novel attention mechanism called "active intermediate attention" that selectively attends to relevant parts of the input to generate accurate code expressions.

| Model | Parameters | Highlight |

| CodeT5-small | 60 million | Smaller and faster than the original CodeT5 model, making it more efficient and easier to deploy on resource-constrained devices. |

| Dual-gen | Varies | Designed to generate code from both natural language and code inputs, allowing for tasks such as code completion with partial code input. |

| Multi-task | Varies | Can perform multiple code-related tasks simultaneously, allowing for more efficient and effective learning and generalization across tasks. |

Key Results

CodeT5 is a Transformer model that uses developer-assigned identifiers to improve code understanding and generation. Experiments show that CodeT5 outperforms previous methods on various understanding and generation tasks and better captures code semantics.

| Task | Dataset | Score |

| code summarization | BLEU-4 | 19.77 |

| Code generation | BLEU | 41.48 |

| Code generation | CodeBLEU | 44.1 |

| Code generation | EM | 22.7 |

| Code translation (Java to C#) | BLEU-4 | 84.03 |

| Code translation (C# to Java) | BLEU-4 | 79.87 |

| code refine (medium) | BLEU-4 | 87.64 |

| code defect detection | PLBART | 65.78 |

| code clone detection | PLBART | 97.2 |

Model Features

The model is based on the T5 architecture, which has been widely used for natural language processing tasks.

Adapted for Code Generation

CodeT5 has been fine-tuned for code generation tasks, making it well-suited for tasks such as code summarization, code translation, and generating code snippets from natural language descriptions.

Pre-trained on Large-scale Code Datasets

CodeT5 is pre-trained on massive code datasets, including GitHub, Stack Overflow, and other code repositories. This extensive pre-training enables the model to better understand programming language syntax, semantics, and common coding patterns.

Multilingual Support

CodeT5 has multilingual capabilities, enabling it to understand and generate code snippets in multiple programming languages, such as Python, Java, JavaScript, and more. This makes the model versatile and useful for developers working with various programming languages.

Model Tasks

CodeT5 is capable of performing a wide range of code-related tasks. These tasks involve generating code from natural language inputs or generating natural language descriptions from code inputs.

Code summarization

The process of automatically generating a brief and concise summary of code that accurately captures its functionality without requiring manual effort from developers is known as code summarization. This can aid in code comprehension and upkeep.

Code generation

Code generation refers to automatically generating code based on specific requirements or input, potentially saving developers significant time and effort. This can range from simple code snippets to more complex applications and systems.

Code refinement

Code refinement automatically improves the quality and readability of existing code, making it easier to understand, maintain, and modify. This can include optimizing code for performance, reducing complexity, and improving documentation.

Code defect detection

Code defect detection involves automatically identifying errors and bugs in code, allowing developers to fix issues before they become bigger problems. This can range from syntax errors to more complex logical errors and can help ensure software reliability.

Code clone detection

Code clone detection automatically identifies code segments that are identical or similar to each other, which can indicate redundant code or potential issues with code maintenance. This can help improve code quality and reduce the risk of errors or bugs.

Fine-tuning

CodeT5-base model fine-tuned on CodeSearchNet data in a multi-lingual training setting ( Ruby/JavaScript/Go/Python/Java/PHP) for code summarization. It was introduced in this EMNLP 2021 paper. You can use codet5-small to fine-tune it for a downstream task of interest, such as code summarization, code generation, code translation, code refinement, code defect detection, and code clone detection. Here is an example of fine-tuning CodeT5. It explains how to Fine-tune CodeT5 to generate docstrings from Ruby code.ipynb.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including CodeT5. The key results are;

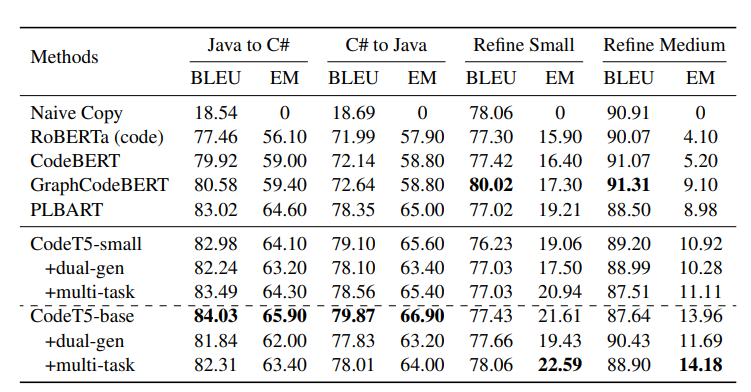

BLEU-4 scores and exact match (EM) accuracies for code translation (Java to C# and C# to Java) and code refinement (small and medium) tasks.

Sample Codes

Running the model on a CPU

import torch

from transformers import T5Tokenizer, T5ForConditionalGeneration

# Initialize the model and tokenizer

tokenizer = T5Tokenizer.from_pretrained('t5-base')

model = T5ForConditionalGeneration.from_pretrained('t5-base')

# Set the device to CPU

device = torch.device('cpu')

model.to(device)

# Generate code from natural language input

prompt = 'Convert a string to lowercase in Python'

input_text = 'code: ' + prompt.strip() + ' </s>'

input_ids = tokenizer.encode(input_text, return_tensors='pt').to(device)

outputs = model.generate(input_ids)

# Decode the generated code and print it

generated_code = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(generated_code)

Running the model on GPU

import torch

from transformers import T5Tokenizer, T5ForConditionalGeneration

# Initialize the model and tokenizer

tokenizer = T5Tokenizer.from_pretrained('t5-base')

model = T5ForConditionalGeneration.from_pretrained('t5-base')

# Set the device to CPU

device = torch.device('cpu')

model.to(device)

# Generate code from natural language input

prompt = 'Convert a string to lowercase in Python'

input_text = 'code: ' + prompt.strip() + ' </s>'

input_ids = tokenizer.encode(input_text, return_tensors='pt').to(device)

outputs = model.generate(input_ids)

# Decode the generated code and print it

generated_code = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(generated_code)

Limitations

While the CodeT5 model has proven to be a highly effective tool for natural language processing, its capabilities have limitations.

PL-PL tasks

The bimodal pre-training brings consistent improvements for code summarization and generation tasks on both CodeT5-small and CodeT5-base. However, this pre-training task does not help and even sometimes slightly hurts the performance for PL-PL generation and understanding tasks

Dataset bias

It is possible that the datasets used by the model would encode some stereotypes like race and gender from the text comments or even from the source code such as variables, function and class names. As such, social biases would be intrinsically embedded into the models trained on them.

Misuse

Due to the non-deterministic nature of generation models, it might produce some vulnerable code to harmfully affect the software and even be able to benefit more advanced malware development when deliberately misused.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More