Code LLMs Explained,

InCoder

InCoder is a large-scale generative code model that can synthesize and edit programs by infilling masked code. After being trained on permissively licensed code, it can infill any region of code, resulting in improved performance on tasks like type inference and variable renaming. Because of the bidirectional context, the model performs well on challenging tasks such as comment generation in zero-shot settings. On program synthesis benchmarks, it performs similarly to left-to-right models.

Model Details View All Models

View All Models

Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Incoder

InCoder is a generative model for code infilling and synthesis designed to assist developers in writing and completing code by automatically generating missing or required code segments.

InCoder achieves 82.43% accuracy on CodeXGLUE.

82.43% Accuracy

InCoder achieved 82.43% accuracy on the CodeXGLUE benchmark. It is widely used for code-infilling tasks, demonstrating the effectiveness of InCoder's neural language modeling approach.

Trained on a total of 159 GB of code and 28 languages

Trained on 159 GB of code

InCoder is trained on a large dataset with a total of 159 GB of code, 52 GB of it in Python, and 57 GB of content from StackOverflow. And trained in 28 languages, all included in StackOverflow.

Trained on a single NVIDIA GeForce RTX 2080 Ti GPU.

Trained on NVIDIA GeForce

InCoder achieves top performance on code infilling and synthesis tasks with training on a single NVIDIA GeForce RTX 2080 Ti GPU using the PyTorch deep learning framework,

Blockchain Success Starts here

-

About Model

-

Model Highlights

-

Training Details

-

Model Types

-

Key Results

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmark Results

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

InCoder is a generative model for code infilling and synthesis designed to assist developers in writing and completing code by automatically generating missing or required code segments. Based on advanced machine learning algorithms and trained on vast source code repositories, InCoder leverages state-of-the-art natural language processing techniques, such as transformer-based models, to comprehend the underlying structure and semantics of programming languages. This enables the model to perform code infilling and synthesis, allowing developers to quickly prototype new ideas, explore alternative implementations, and learn new programming techniques. InCoder's language-agnostic design and integration with popular integrated development environments (IDEs) and code-editing tools provide a seamless experience for developers, enhancing productivity and code quality across different projects and platforms.

Model Highlights

InCoder is a powerful code infilling and synthesis model that utilizes advanced machine learning techniques and is built on state-of-the-art natural language processing and machine learning models. It is trained on a large dataset of code snippets from various open-source repositories, making it highly accurate and useful for generating high-quality code suggestions.

- InCoder is a unified generative model that can perform program synthesis and editing.

- In this paper, the authors adopt the recently proposed causal masking objective, which aims to combine the strengths of both causal and masked language models.

- InCoder is trained on a large corpus of permissively licensed code, where code regions are randomly masked and moved to the end of each file, allowing bidirectional code infilling.

- The model is the first large generative code model capable of infilling arbitrary regions of code.

- In a zero-shot setting, the model is evaluated on tasks such as type inference, comment generation, and variable re-naming.

- The ability to condition on bidirectional context substantially improves performance on these tasks.

- The model performs comparably to left-to-right-only models pretrained at a similar scale on standard program synthesis benchmarks.

Training Details

Training data

The model was trained on public open-source repositories with a permissive, non-copyleft license (Apache 2.0, MIT, BSD-2, or BSD-3) from GitHub and GitLab, as well as StackOverflow. Repositories primarily contained Python and JavaScript but also included code from 28 languages, as well as StackOverflow.

Training Procedure

During training, contiguous token spans are randomly masked in each document. The number of spans is sampled from a Poisson distribution with a mean of one and truncated to [1,256]. The span length is uniformly sampled from the document, and overlapping spans are rejected and resampled.

Training dataset size

After filtering and deduplication, the data corpus contains 159 GB of code, 52 GB of it in Python, and 57 GB of StackOverflow content. While training, the per-GPU batch size was 8, with a maximum token sequence length of 2048.

Training time and resources

INCODER-6.7B was trained on 248 V100 GPUs for 24 days. One epoch on the training data was performed, using each training document exactly once. The per-GPU batch size was 8, with a maximum token sequence length of 2048.

Model Types

InCoder is a generative model for code infilling and synthesis that has two pre-trained models with different parameter sizes: incoder-6B and incoder-1B. Both models are decoder-only transformer models trained on code using a causal-masked objective, allowing for both code infilling and standard left-to-right code generation.

| Model | Parameters |

| incoder-6B | 6.7B |

| incoder-1B | 1B |

Key Results

InCoder is a unified generative model that can synthesize code from scratch (via left-to-right generation) or edit existing code blocks (via infilling). The model is trained on a vast corpus of permissively licensed code, where random code regions are masked and shifted to the end of each file. This approach enables InCoder to generate code infillings with bidirectional context, making it the first generative code model capable of infilling arbitrary code regions.

| Task | Dataset | Score |

| Single-line infilling (L-R single) | HumanEval | 48.2 |

| Single-line infilling (L-R reranking) | HumanEval | 54.9 |

| Single-line infilling (CM infilling) | HumanEval | 69 |

| Multi-line infilling (L-R single) | HumanEval | 24.9 |

| Multi-line infilling (L-R reranking) | HumanEval | 28.2 |

| Multi-line infilling (CM infilling) | HumanEval | 38.6 |

| Python Docstring generation avg | CodeXGLUE | 17.15 |

| code generation (pass@100) | HumanEval | 47 |

| code generation (pass@100) | MBPP | 19.4 |

| Left-to-right single | HumanEval | 48.2 |

| Left-to-right reranking | HumanEval | 54.9 |

| Infilling | HumanEval | 69 |

Model Features

These technical features make InCoder a powerful tool for software development tasks, as it can assist in writing complex code and prototyping new ideas with little additional training data.

Causal masking objective

InCoder employs a masking procedure during training to ensure that the model only generates code based on the context preceding the current token. The model masks random contiguous token spans from a Poisson distribution in each document and ensures that spans do not overlap.

Program synthesis

InCoder can synthesize code from a high-level task description, such as natural language input. This task involves understanding the task semantics and generating a corresponding code block to perform the desired task.

Infilling

InCoder can generate code to fill gaps in an existing code block based on the surrounding context and syntax. This task involves inferring the purpose and functionality of the missing code and generating a corresponding code block to fill the gap. InCoder is the first generative code model capable of infilling arbitrary regions of code.

Model Tasks

InCoder, as a generative model for code infilling and synthesis, can perform a range of tasks to assist developers and programmers during the coding process:

Single-line infilling

InCoder can generate code to fill a single missing line in an existing code block. This task involves infilling the missing line based on the code's surrounding context and syntactic structure.

Multi-line infilling

InCoder can also generate code to fill multiple missing lines in an existing code block. This task involves infilling a sequence of missing lines in a code block based on the context and structure of the surrounding code.

Python Docstring generation

InCoder can generate Python docstrings based on the function signature and surrounding code. This task involves inferring the purpose and functionality of a function and generating a corresponding docstring.

Code generation

InCoder can generate code from a high-level natural language description of a task. This task involves understanding the semantics of the task and translating it into executable code.

Left-to-right reranking

InCoder reranks left-to-right generation outputs to select the most likely correct output. This task involves generating multiple possible outputs and choosing the most probable one based on the context and syntax.

Infilling

InCoder can generate code to fill arbitrary gaps in an existing code block. This task involves inferring the purpose and functionality of the missing code and generating a corresponding code block to fill the gap.

Fine-tuning

CodeXGLUE Python Docstring generation BLEU scores. Incoder model is evaluated in a zero-shot setting, with no fine-tuning for docstring generation, but it approaches the performance of pretrained code models that are fine-tuned on the task’s 250K examples. Fine-tuning would allow Incoder models to better condition natural language instructions and other indications of human intent. The model lays a foundation for future work on supervised infilling & editing via model fine-tuning, as well as performing iterative decoding, where the model can be used to refine its output. Fine-tuning methods for CodeGeeX will be updated in this section soon.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including Incoder. The key results are;

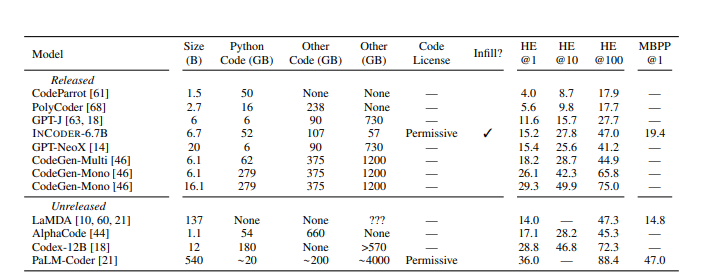

A comparison of our INCODER-6.7B model to published code generation systems using

pass rates @ K candidates sampled on the HumanEval and MBPP benchmarks. All models are

decoder-only transformer models. A “Permissive” code license indicates models trained on only

open-source repositories with non-copyleft licenses. The GPT-J, GPT-NeoX, and CodeGen models

are pre-trained on The Pile [26], which contains a portion of GitHub code without any license filtering,

including 6 GB of Python. Although the LaMDA model does not train on code repositories, its

training corpus includes ∼18 B tokens of code from web documents. The total file size of the LaMDA

corpus was not reported, but it contains 2.8 T tokens total. We estimate the corpus size for PaLM

using the reported size of the code data and the token counts per section of the corpus.

Sample Codes

Running the model on a CPU

import torch

from incoder_model import InCoderModel

# Load the pre-trained InCoder model

model = InCoderModel.from_pretrained('incoder_model')

# Set the device to CPU

device = torch.device('cpu')

model.to(device)

# Create input data

input_data = ['some', 'input', 'data']

# Encode the input data using the InCoder model

with torch.no_grad():

encoded_data = model.encode(input_data)

# Print the encoded data

print(encoded_data)

Running the model on GPU

import torch

from incoder_model import InCoderModel

# Load the pre-trained InCoder model

model = InCoderModel.from_pretrained('incoder_model')

# Set the device to GPU if available, else CPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

# Create input data

input_data = ['some', 'input', 'data']

# Encode the input data using the InCoder model

with torch.no_grad():

encoded_data = model.encode(input_data)

# Print the encoded data

print(encoded_data)

Model Limitations

- Incoder, using the causal-masked infill format with a single token (containing min/max) as the masked region (CM infill-token) performs better than using just the left context, but not as well as scoring the entire sequence left to right.

- For Cloze, infilling with the original tokenization increases the performance slightly, but does not match full left-right scoring.

Other LLMs

Polycoder

Polycoder is a deep learning model for multilingual natural language processing tasks

Read More

CodeGeex

CodeGeeX, a large-scale multilingual code generation model with 13 billion parameters pre-trained

Read More

CodeRL

CodeRL is a novel framework for program synthesis tasks that combines pretrained language models (LMs)

Read More