LLMs Explained,

GLaM

GLaM, Generalist Language Model, is a trillion-weight model introduced by Google. It achieves competitive performance on multiple few-shot learning tasks. Its performance is comparable to GPT-3. It has significantly improved learning efficiency across 29 public NLP benchmarks in seven categories. Based on the Transformer architecture, the model is pre-trained on a large corpus of text data using unsupervised learning. This pre-training enables the model to learn natural language patterns and structures, which it can then apply to various downstream tasks.

Model Card

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of GLaM

GLaM is a mixture of experts model, a model that can be thought of as having different submodels, each specialized for different inputs.

GLaM is approximately 7x larger than GPT-3

1.2 Trillion Parameters

The largest GLaM has 1.2 trillion parameters, and it is approximately 7x larger than GPT-3.

Less training cost, less energy consumption

Relatively Energy Efficient

GLaM consumes only 1/3 of the energy used to train the GPT-3 model and requires half of the computation flops for inference.

Better performance with less computation

Excelled in 29 NLP tasks

The model achieves better overall zero, one, and few-shot performance across 29 NLP tasks.

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Getting Started

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

Introduction to GLaM

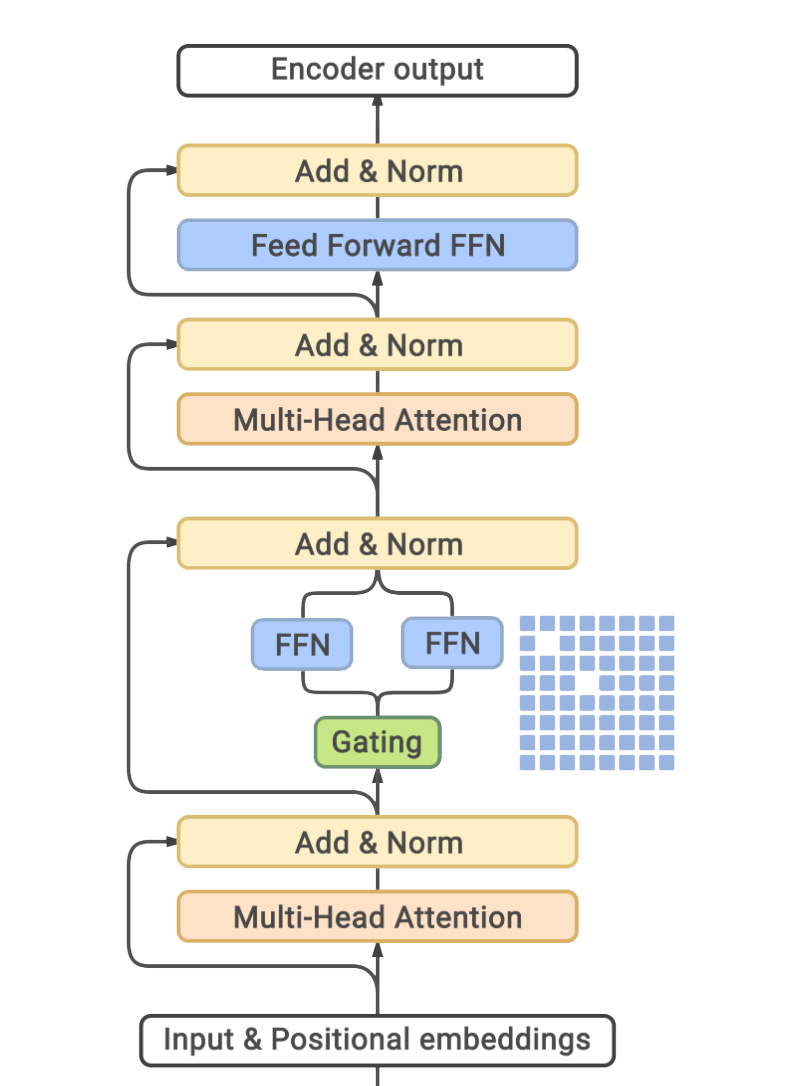

GLaM is a mixture of expert (MoE) models, which can be thought of as having different submodels specialized for different inputs. The full version of the model has 1.2T total parameters across 64 experts per MoE layer with 32 MoE layers in total. It only activates a subnetwork of 97B parameters per token prediction during inference. The model has shown significantly improved learning efficiency across 29 public NLP benchmarks in seven categories, including language completion, open-domain question answering, and natural language inference tasks.

Model highlights

The GLaM model is an impressive language model with several notable highlights that distinguish it from others. Here are the key highlights of GLaM model.

- GLaM is a family of language models that uses a sparsely activated mixture-of-experts architecture.

- GLaM scales the model capacity while incurring substantially less training cost than dense variants.

- The largest GLaM has 1.2 trillion parameters, approximately 7x larger than GPT-3.

- GLaM consumes only 1/3 of the energy used to train GPT-3.

- GLaM requires half of the computation flops for inference compared to GPT-3.

- GLaM achieves better overall zero, one, and few-shot performance across 29 NLP tasks.

Training Details

Training data

Most of the information comes from web pages, including professional writing and low-quality comment and forum pages. The GLaM team created its text quality classifier to ensure the quality of the web pages in the dataset. This classifier detects and categorizes high-quality web pages in a larger raw corpus of web pages.

Training Procedure

The GLaM model is trained on multiple GPUs using a distributed training approach, allowing faster and more efficient training. Iterative training minimizes the loss function, with the model's parameters updated after each iteration. The final trained model is then assessed on various downstream tasks to determine its performance and generalization ability.

Training dataset size

The training dataset of the GLaM model contains 1.6 trillion tokens. It is a is a massive dataset that is significantly larger than most other language models currently available. According to the paper's authors, the size of this massive dataset is critical for ensuring that the model generalizes well across a wide range of natural language use cases.

Training time and resources

The original paper does not specify a training time for GLaM, but it does state that the model was trained on a cluster of GPUs using a distributed training approach. The model was specifically trained on a cluster of 1,024 Nvidia V100 GPUs, a powerful computing resource commonly used for large-scale deep learning applications.

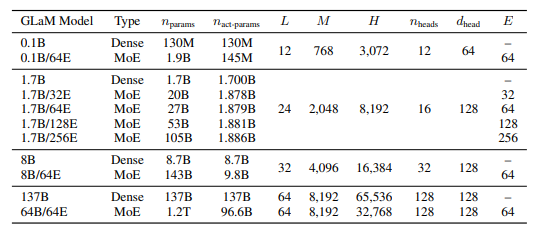

Model Types

To study the behavior of MoE and dense models on the same training data, the model is trained using several GLaM variants. The table below shows the hyperparameter settings of various scale GLaM models ranging from 130 million to 1.2 trillion parameters.

Business Applications

GLaM shows the best results for tasks- Language Modeling and Question answering. You can use this model for building business applications for use cases like;

| Language Modeling | Multilingual NLP |

| Text completion and prediction | Multilingual customer support |

| Sentiment analysis | Multilingual chatbots and virtual assistants |

| Text classification | Multilingual sentiment analysis |

| Language translation | Multilingual social media monitoring |

| Content generation and summarization | Multilingual search engines |

| Speech recognition and transcription | Multilingual voice assistants and speech recognition |

| Personalization and recommendation systems | Multilingual voice assistants and speech recognition |

| Information retrieval and search engines | Multilingual text summarization and classification |

| Fraud detection and spam filtering. | Multilingual data analysis and visualization |

Model Features

GLaM (Generative Language Modeling) model is also a highly innovative language model that incorporates several techniques to make it more effective and scalable than conventional models.

Licensing

GLaM is an open-source model and is available under Apache License 2.0, which means that it can be used, modified, and distributed freely, even for commercial purposes.

Level of customization

GLaM is highly customizable and can be fine-tuned for specific natural language processing tasks using transfer learning. Users can add their own data and labels to pre-trained models or train their own models from scratch using the provided code.

Available pre-trained model checkpoints

GLaM provides pre-trained checkpoints for several of its architectures, including GPT, GPT-2, BART, T5, RoBERTa, DistilBERT, and Electra. These pre-trained models are available in several languages. They can be used as-is for various natural language processing tasks, or they can be fine-tuned for specific use cases.

Model Tasks

Machine translation

The GLaM model can also be used for machine translation, translating text from one language to another. This complex task involves many different components, but the GLaM model can be a useful part of the overall system.

Text completion

This task involves predicting the next word or words in a sentence or phrase. The GLaM model can be trained to suggest the most likely next word based on the context of the sentence.

Dialogue generation

This task involves generating a natural-sounding dialogue between two or more speakers. The GLaM model can be trained to generate responses that are appropriate to the context of the conversation.

Natural language inference

In this task, the GLaM model is used to determine whether a statement logically follows from another statement. GLaM can be useful for tasks like fact-checking or automated reasoning.

Document clustering

In this task, the GLaM model groups similar documents based on their content. This model can be useful for tasks like information retrieval or text analysis.

Getting Started

- Install Python 3.x: GLaM requires Python 3.x to run. You can download the latest version of Python from the official website.

- Install PyTorch: GLaM is built on top of the PyTorch library. You can install PyTorch by following the instructions on the PyTorch website. Be sure to install the version that matches your system configuration.

- Install Hugging Face Transformers: GLaM is part of the Hugging Face Transformers library, which provides a high-level interface for working with pre-trained language models. You can install Transformers using pip:

- Download a pre-trained GLaM model: GLaM is available in several pre-trained configurations, such as "base", "large", and "x-large". You can download a pre-trained model from the Hugging Face model hub.

- Test your installation: Once you have installed GLaM, you can test it out by running some sample code.

Fine-tuning

Several techniques can be used to improve the Generalist Language Model (GLAM), depending on the particular purpose and data. Here are a few typical techniques for GLAM fine-tuning:

Supervised fine-tuning

Using this technique, the GLAM is trained on a task-specific dataset using labeled examples. By modifying the model's parameters to reduce the loss function on the task-specific data, the model is fine-tuned for that task.

Transfer learning

The model is initially pre-trained on a huge corpus of text data, then fine-tuned on a smaller task-specific dataset, improving performance. This method is very beneficial when there is a dearth of task-specific data.

Domain adaptation

By customizing the model for a different domain or language, domain adaptation strategies can help GLAM be improved. This may be accomplished by fine-tuning the model using a smaller dataset from the new task-specific domain or language.

Semi-supervised learning

A smaller quantity of labeled data combined with more unlabeled data is used to fine-tune the Algorithm. To fine-tune the model, the full dataset is utilized after it has been trained on the labeled data to predict the labels of the unlabeled data.

Multi-task learning

This method of fine-tuning Algorithms entails simultaneously training the model on some related tasks. By the use of many sources of input, this method can help the model perform better on each specific job.

Benchmarking

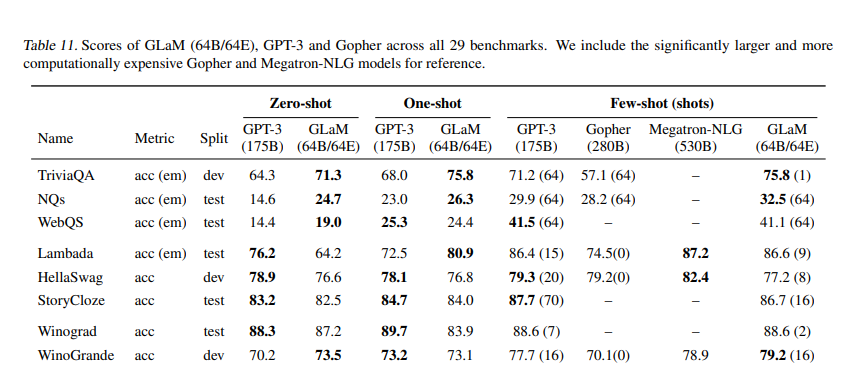

Table 1 shows scores of GLaM (64B/64E), GPT-3 and Gopher across all 29 benchmarks. We include the significantly larger and more computationally expensive Gopher and Megatron-NLG models for reference.

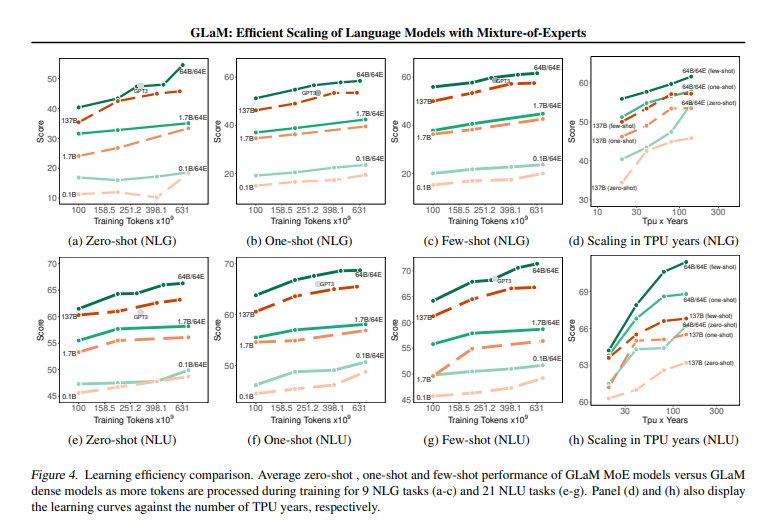

Table 2 shows Learning efficiency comparison of models with MoE.

Sample Code 1

Running the model on a CPU

import torch

from transformers import AutoModel, AutoTokenizer

# Load the model and tokenizer

model_name = "glam"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModel.from_pretrained(model_name).to("cuda")

# Define your input text

input_text = "This is an example input sentence."

# Tokenize the input text

input_ids = tokenizer.encode(input_text, return_tensors="pt").to("cuda")

# Run the input through the model

with torch.no_grad():

outputs = model(input_ids)

# Get the output logits

logits = outputs.last_hidden_state

# Print the output logits

print(logits)

Sample Code 2

Running the model on a GPU

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Load the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained("EleutherAI/gpt-neo-1.3B")

model = AutoModelForCausalLM.from_pretrained("EleutherAI/gpt-neo-1.3B")

# Set the device to GPU

device = torch.device("cuda")

model.to(device)

# Generate some text

prompt = "Once upon a time"

input_ids = tokenizer.encode(prompt, return_tensors="pt").to(device)

output = model.generate(input_ids, max_length=50, do_sample=True)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

# Print the generated text

print(generated_text)

Here is some sample code for running the GLaM model on a GPU using half-precision

(FP16):

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

# Load the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained("EleutherAI/gpt-neo-1.3B")

model = AutoModelForCausalLM.from_pretrained("EleutherAI/gpt-neo-1.3B")

# Set the device to GPU

device = torch.device("cuda")

model.to(device)

# Enable mixed-precision training

model.half()

# Generate some text with half precision

with torch.cuda.amp.autocast():

prompt = "Once upon a time"

input_ids = tokenizer.encode(prompt, return_tensors="pt").half().to(device)

output = model.generate(input_ids, max_length=50, do_sample=True)

# Convert the output to full precision

generated_text = tokenizer.decode(output[0].to(torch.float32), skip_special_tokens=True)

# Print the generated text

print(generated_text)

Limitations

While the GLaM is a powerful model for natural language processing tasks, it also has several limitations. Here are some of the limitations of the model

Training time and resource requirements

Fine-tuning a language model like GLAM can be computationally expensive and time-consuming, requiring a significant amount of computational resources and time.

Domain-specific knowledge

While GLAM is a generalist language model that can perform well on a wide range of natural language processing tasks, it may not be able to capture domain-specific knowledge and models specifically trained for a particular task or domain.

Bias

Language models can often pick up on biases in the training data, which can lead to biased or unfair predictions.

Lack of explainability

Language models like GLAM are often seen as "black boxes," making it difficult to understand how they arrived at a particular prediction or decision.

Limited ability to handle multimodal inputs

Language models like GLAM are primarily designed to handle text-based inputs and may struggle with tasks that require multimodal inputs (e.g., image captioning or video summarization).