LLMs Explained,

Transformer

The Transformer model is a neural network architecture transforming natural language processing (NLP). Vaswani et al. published a seminal paper titled "Attention Is All You Need" in 2017. Since then, the Transformer model has advanced to the forefront of many NLP tasks, including machine translation, language modeling, and text generation. Transformer models are among the most recent and powerful machine learning models. They are driving significant progress in the field, prompting some to call this the "transformer AI" era.

Model Card

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Transformer

With superior performance and the ability to model complex relationships between tokens in input sequences, the Transformer model has become a critical component in many NLP applications and prompted additional research and development of other models based on the Transformer architecture.

Model downloaded over 3 million times

3M Downloads

The Transformer model has been downloaded over 3 million times and has over 10,000 stars on GitHub.

Largest model with 175b parameters

175b parameters

Transformer models to date, has 175 billion parameters and was trained on a dataset of over 570 GB of text.

41.8 BLEU score in language translation

41.8 BLEU score

Transformer achieves a BLEU score of 41.8 on the WMT 2014 English-to-French translation task after only 3.5 days of training on eight GPUs.

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Getting Started

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

Introduction to Transformer

The Transformer model differs from traditional sequence-to-sequence NLP models such as the recurrent neural network and the long short-term memory network. It employs a self-attention mechanism that allows it to process and generate text by attending to all input positions simultaneously rather than sequentially. The training dataset for the Transformer model used in the paper consisted of about 4.5 million sentence pairs for English-German and 36 million sentences for English-French.

About Model

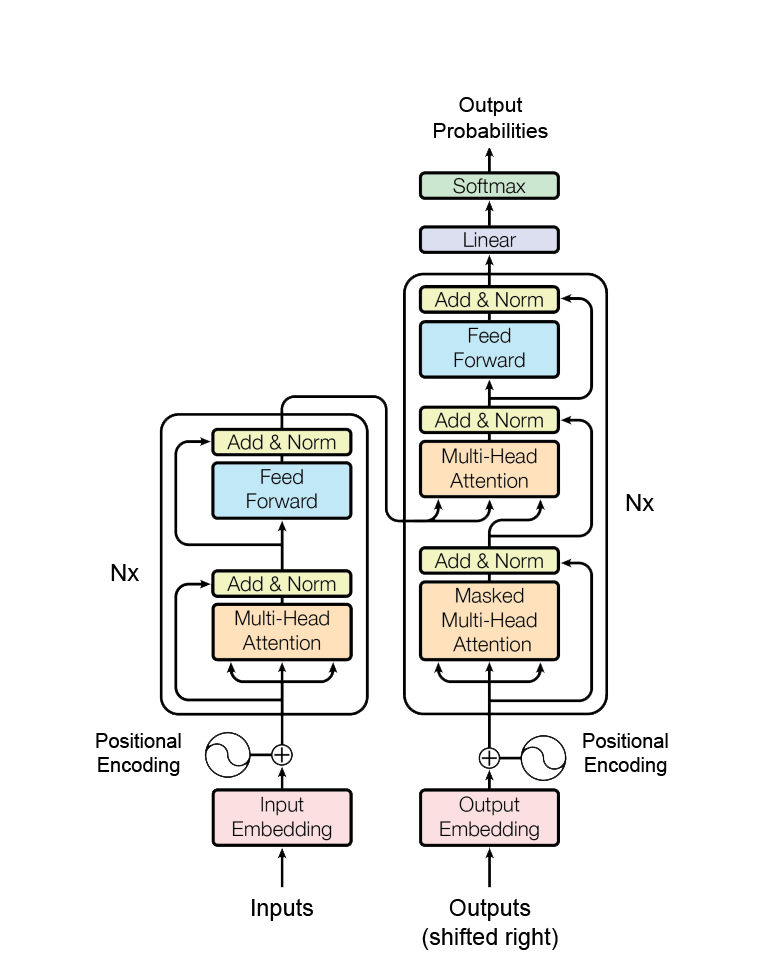

The Transformer model's architecture is based on self-attention, which enables the model to process input sequences in parallel and capture long-term dependencies between tokens. Self-attention is computed in each layer of the Transformer by computing a weighted sum of the embeddings of all input tokens, with the weights determined by a learned attention function.

The self-attention mechanism enables the Transformer to attend to all positions in the input sequence simultaneously, allowing it to capture both local and global token dependencies. The Transformer model also employs residual connections and layer normalization to stabilize the training process and avoid the vanishing gradient problem.

Model Type: Deep Learning Model

Language(s) (NLP): English, German and French

License: Apache 2.0

Model highlights

Following are the key highlights of the Transformer model.

- Superior performance in sequence transduction tasks compared to other models

- More parallelizable, allowing for faster training

- Significantly less time required for training compared to other models

- Generalizes well to other tasks

- Achieves state-of-the-art performance on machine translation tasks

Training Details

Training data

The transformer model was trained on two large-scale machine translation datasets: WMT 2014 English-German and WMT 2014 English-French. This large and diverse training data allowed the Transformer model to learn from a wide range of language structures and contexts, allowing it to achieve cutting-edge machine translation results.

Training Procedure

The training batches were created by grouping sentence pairs based on approximate sequence length, with each batch containing approximately 25,000 source tokens and 25,000 target tokens. The training process used backpropagation and Adam optimization with a learning rate warmup period.

Training dataset size

The English-German dataset used for training the Transformer model consisted of 4.5 million sentence pairs, while the English-French dataset was significantly larger, consisting of 36 million sentence pairs. The sentences were encoded using byte-pair encoding and word-piece vocabulary, respectively.

Training time and resources

The training time and resources used to train the Transformer model are not clearly specified. However, it is noted that the researchers used a large-scale parallel training setup with 8 NVIDIA P100 GPUs to train the model, indicating that the training process likely required a significant amount of computational resources and time.

Model Types

There are different versions of the Transformer model that have been trained on the same dataset that help to enhance different functions. Here are the two variations of the transformer model:

| Model | Parameters | Highlights |

| Transformer Base model | 65 million | 6 encoder and 6 decoder layers, with a hidden size of 512 and 8 attention heads |

| Transformer Big model | 213 million | 6 encoder and 6 decoder layers, with a hidden size of 1024 and 16 attention heads |

Business Applications

Transformer shows the best results for tasks- Fine-Grained Image Classification and Multilingual NLP. You can use this model for building business applications for use cases like;

| Fine-Grained Image Classification | Multilingual NLP |

| Product categorization in e-commerce platforms | Multilingual customer support |

| Quality control in manufacturing industries | Multilingual chatbots and virtual assistants |

| Detection of plant diseases in agriculture | Multilingual sentiment analysis |

| Identification of bird species in wildlife conservation | Multilingual social media monitoring |

| Classification of skin diseases in healthcare | Multilingual search engines |

| Identification of car models in the automotive industry | Multilingual voice assistants and speech recognition |

| Classification of geological formations in mining | Multilingual voice assistants and speech recognition |

| Identification of defects in materials during inspection processes | Multilingual text summarization and classification |

| Classification of food dishes in restaurant management. | Multilingual data analysis and visualization |

Model Features

Transformers are designed with several innovative techniques that make them more effective and scalable than traditional models. Here are some of their key features:

Language modeling

The library includes pre-trained models for unidirectional and bidirectional language modeling.

Transfer learning

Enables easy transfer learning by allowing users to load and use pre-trained models for specific NLP tasks.

Pre-trained models

Transformers library provides access to hundreds of pre-trained transformer models for a wide range of NLP tasks.

Fine-tuning

The library supports fine-tuning pre-trained models on custom datasets for specific NLP tasks.

Tokenization

The library provides a range of tokenization methods for different models and languages.

Custom architectures

Library allows users to implement and train custom transformer architectures easily.

Model Tasks

Text classification

This task involves assigning predefined categories or labels to a given text. This can be useful for tasks like spam filtering, sentiment analysis, and topic categorization.

Named entity recognition (NER)

NER is the task of identifying and extracting entities such as people, organizations, and locations from a given text. This can be useful for information extraction, search engines, and machine translation.

Question answering

This task involves answering questions posed in natural language. This can be useful for chatbots, customer service, and search engines.

Language modeling

Language modeling involves predicting the probability of the next word in a sequence given the previous words. This is the core task of many natural language processing applications, including speech recognition, machine translation, and text generation.

Masked language modeling (MLM)

MLM is a task in which a model predicts missing words in a given sentence. This can be used for text completion and correction.

Getting Started

Here are the steps to install transformers:

You should create a virtual environment and install Transformers in that environment before using it. Python 3.6+, Flax 0.3.2+, PyTorch 1.3.1+, and TensorFlow 2.3+ have all been tested. Before installing Transformers, you should also install Flax, PyTorch, or TensorFlow. The installation instructions for the backends can be found on their respective installation pages. Once the backend is installed, you can use pip to install Transformers.

pip install transformers

You can easily install Transformers and a deep learning library for CPU support only with a single line of code. For example, you can use the following command to install Transformers and PyTorch:

pip install transformers[torch]

To ensure that Transformers has been installed properly, you can run the following command, which downloads a pre-trained model:

from transformers import pipeline

classifier = pipeline('sentiment-analysis')

result = classifier('I am so excited to start using Transformers!')

print(result)

If the output shows a positive sentiment, then the installation was successful.

Please keep in mind that the preceding steps are merely examples, and you may need to modify them depending on your specific use case. Also, based on your needs, select the appropriate pre-trained Transformer model size.

Fine-tuning

Here are some of the fine-tuning techniques that can be used with transformers:

Learning rate scheduling

Learning rate scheduling adjusts the learning rate during training to improve model performance. A high learning rate can cause the model to converge quickly but may lead to unstable behavior, while a low learning rate can lead to slow convergence or getting stuck in local minima. Techniques like cyclic learning rate scheduling, where the learning rate is adjusted cyclically, can help improve performance.

Early stopping

Early stopping is a technique used to stop training when the model's performance on a validation set stops improving. This is done to prevent overfitting, where the model performs well on the training data but poorly on the validation or test data. Early stopping can be achieved by monitoring the validation loss or accuracy and stopping the training when it has not improved for a certain number of epochs.

Gradient accumulation

Gradient accumulation is a technique that allows the model to accumulate gradients over multiple batches before performing a weight update. This helps conserve memory and can improve training stability. In gradient accumulation, the gradients are averaged over multiple batches, and the weights are updated only once every few batches.

Weight decay

Weight decay is a technique that adds a penalty term to the loss function to encourage the model to use smaller weights. This can prevent overfitting and improve model generalization. Weight decay is achieved by adding an L2 penalty term to the loss function that penalizes large weights.

Dropout

Dropout is a regularization technique where some units in the model are randomly dropped out during training. This can help prevent overfitting by forcing the model to learn more robust representations. Dropout is applied to the inputs and/or outputs of the model at a certain probability.

Layer freezing

Layer freezing is a technique where the weights of some layers in the model are frozen during training to prevent them from being updated and retain their pre-trained representations. This can be useful when fine-tuning a pre-trained model, where the lower layers of the model are usually fine-tuned while the higher layers are frozen. This can help prevent catastrophic forgetting and improve model generalization.

Benchmarking

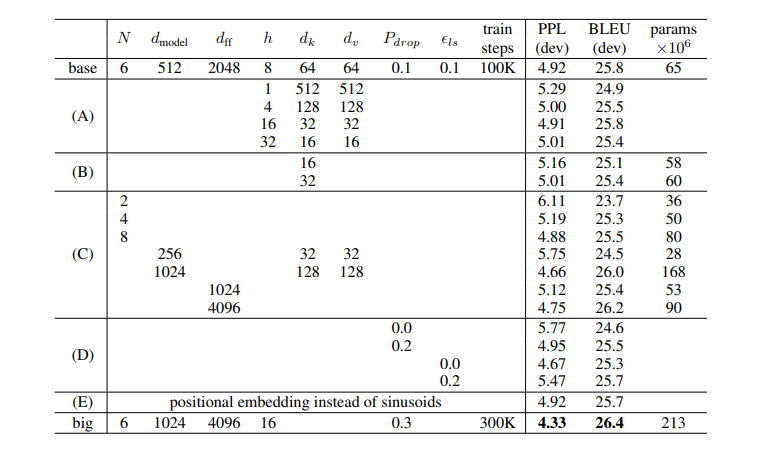

Table 1 shows variations on the Transformer architecture. Unlisted values are identical to those of the base

model. All metrics are on the English-to-German translation development set, newstest2013. Listed

perplexities are per-wordpiece, according to our byte-pair encoding, and should not be compared to

per-word perplexities.

Table 2 The Transformer generalizes well to English constituency parsing.

| Parser | Training | WSJ 23 F1 |

| Vinyals & Kaiser el al. (2014) [37] | WSJ only, discriminative | 88.3 |

| Petrov et al. (2006) [29] | WSJ only, discriminative | 90.4 |

| Dyer et al. (2016) [8] | WSJ only, discriminative | 91.7 |

| Transformer (4 layers) | WSJ only, discriminative | 91.3 |

| Zhu et al. (2013) [40] | semi-supervised | 91.3 |

| Huang & Harper (2009) [14] | semi-supervised | 91.3 |

| McClosky et al. (2006) [26] | semi-supervised | 92.1 |

| Vinyals & Kaiser el al. (2014) [37] | semi-supervised | 92.1 |

| Transformer (4 layers) | semi-supervised | 92.7 |

| Luong et al. (2015) [23] | multi-task | 93.0 |

| Dyer et al. (2016) [8] | generative | 93.3 |

Sample Codes

Running the model on a GPU

import torch

from transformers import BertModel, BertTokenizer

# Initialize the model and tokenizer

model = BertModel.from_pretrained('bert-base-uncased')

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

# Move the model to the GPU

device = torch.device('cuda')

model.to(device)

# Define input text

text = 'This is a sample input text.'

# Tokenize the input text

inputs = tokenizer(text, return_tensors='pt')

inputs = inputs.to(device)

# Run the model on the input

outputs = model(**inputs)

# Move the outputs back to the CPU

outputs = tuple(output.to('cpu') for output in outputs)

Limitations

While the Transformer model is a state-of-the-art model for natural language processing tasks, it also has some limitations. Here are a few examples:

Computational Requirements

Transformers are computationally expensive and require significant resources, including memory and processing power. This can make them challenging for large-scale applications or on devices with limited resources.

Sequential Processing

Transformers are designed to process sequential data, such as text or speech, in a left-to-right or right-to-left direction. This means they may be less effective for tasks requiring more complex input structures, such as an image or graph processing.

Interpretability

Transformers can be difficult to interpret due to the complex nature of their attention mechanisms and internal representations. This can make it challenging to understand why a model is making certain predictions or to debug issues with the model.

Limited Data Efficiency

While pre-training on large datasets can improve the performance of transformer models, they may still require large amounts of labeled data to perform well on specific tasks. This can make it challenging to use them for tasks with limited amounts of labeled data.

Limited Multimodality

Transformers have primarily been used for text-based applications and may be less effective for multimodal tasks that require the processing of multiple modalities, such as text, images, and audio.