LLMs Explained,

T5

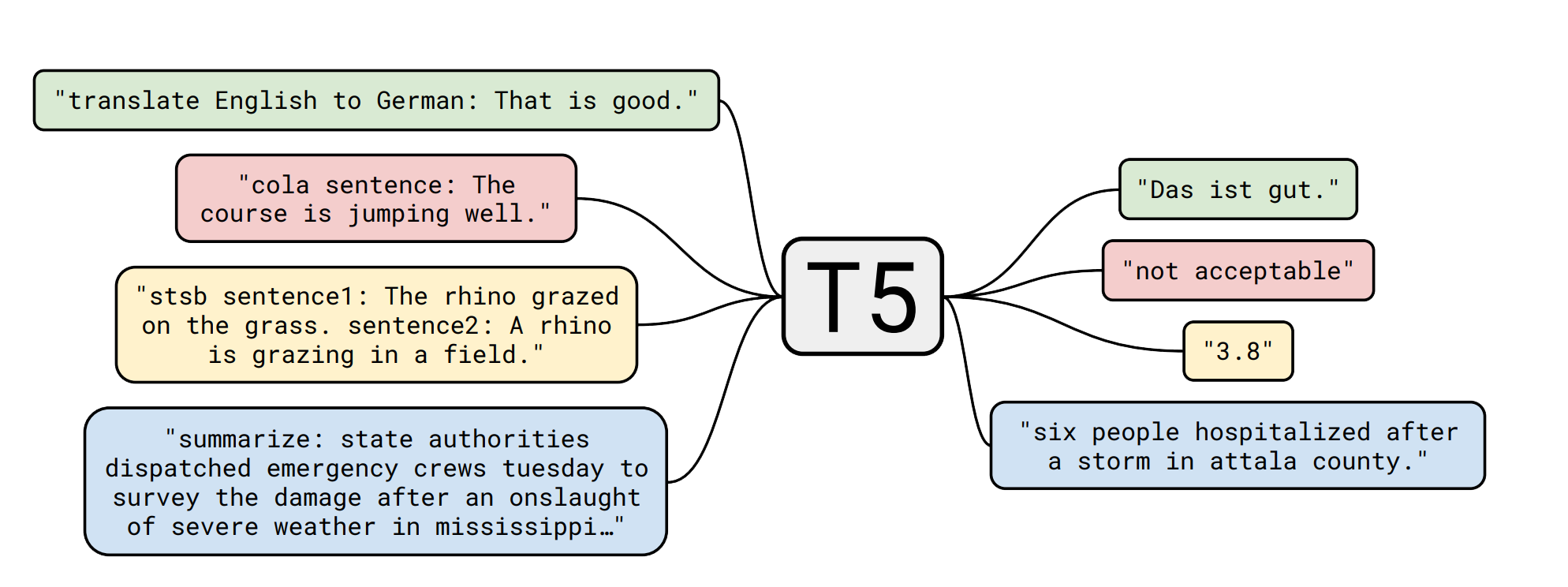

The Text-to-Text Transfer Transformer (T5) is a cutting-edge text-to-text transformer model developed by Google Research and published in a research paper in October 2019. T5 is based on the transformer architecture, which Vaswani et al. introduced in their seminal paper "Attention Is All You Need" in 2017. Because of its effectiveness and scalability, the transformer architecture has since become the standard for many NLP tasks. T5 employs a unified framework that can convert any text-based language problem to a text-to-text format. This allows the model to be trained on various NLP tasks, including machine translation, document summarization, question answering, and classification tasks, using the same model, loss function, and hyperparameters.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of T5

T5 is a cutting-edge natural language processing model designed as a text-to-text framework for various NLP tasks such as machine translation, summarization, question answering, and classification. Here are some of the model's key features.

Largest T5 variant has 11 billion parameters

11B parameters

The largest T5 model variant, T5-11B, has 11 billion parameters. The larger models are more powerful but also require more computational resources to train and use.

T5 model was trained on a massive dataset

750GB Data

750GB massive dataset used to train the T5 model is known as the "Colossal Clean Crawled Corpus" (C4) and is one of the largest publicly available text corpora.

General language learning abilities

Performance

The model has shown good performance on a diverse set of benchmarks, including machine translation, question answering, abstractive summarization, and text classification

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

T5 is a text-to-text transformer model that employs a unified framework to handle text-based language tasks, making it versatile and powerful for NLP. T5 comes in several sizes, ranging from T5-Base to T5-11B, and is widely used in industry and academia for various NLP tasks. The model computes representations of input sequences using self-attention mechanisms. It employs the sequence-to-sequence (seq2seq) method, in which an input sequence is encoded into a fixed-length vector representation and decoded into an output sequence. T5's encoder and decoder are made up of multiple layers of transformers, which allows it to capture long-range dependencies and perform well on tasks that require advanced reasoning and inference skills. T5 is available in sizes ranging from 220 million to 11 billion parameters. Larger models are more powerful but also require more computational resources to train and use. T5 is used in the Hugging Face Transformers library, which has become one of the most popular and widely used NLP libraries.

Model Type: Language model

Language(s) (NLP): English, German, French, and Romanian

License: Apache 2.0

Model Highlights

T5 is developed using the insights from a detailed analysis of various transfer learning techniques. The model is pre-trained on the C4 dataset and excels in many NLP benchmarks.

- The model introduces a unified framework that converts all text-based language problems into a text-to-text format

- The model is trained on Colossal Clean Crawled Corpus (C4), a cleaned version of Common Crawl that is 2X larger than Wikipedia.

- The model (11B) achieved state-of-the-art on the GLUE, SuperGLUE, SQuAD, and CNN/Daily Mail benchmarks.

- For open-domain question answering, the 11B model produces the exact text of the answer for TriviaQA (50.1%), WebQuestions(37.4%), and Natural Questions(34.5%).

- One can apply T5 to regression tasks by training it to predict the string representation of a number instead of the number itself.

Training Details

Training data

For unsupervised denoising objective: C4, Wiki-DPR. For supervised text-to-text language modeling objective: CoLA, SST-2, MRPC, STS-B, QQP, MNLI, QNLI, RTE, CB, COPA, WIC, MultiRC, ReCoRD, BoolQ

Dataset size

The training dataset was a collection of text that is not only orders of magnitude larger than most data sets used for pre-training (about 750 GB) but also comprises reasonably clean and natural English text.

Training Procedure

T5 is trained to predict missing tokens in a sequence using a masked language modeling objective. This entails masking out tokens in the input sequence at random and training the model to predict the masked tokens.

Training Observations

Researchers find that pre-training provides significant gains across almost all benchmarks. The only exception is WMT English to French, which is a large enough data set that gains from pre-training tends to be marginal.

Model Types

There are several versions of the T5 model that have been trained on the same dataset. Here are the variations of the T5 model based on parameter count:

| Model | Parameters | Highlights |

| T5-Small | 60 million | NLP tasks |

| T5-Base | 220 million | general-purpose language processing |

| T5-Large | 770 million | higher accuracy or more complex NLP |

| T5-3B | 3 billion | high accuracy on complex and large-scale NLP tasks |

| T5-11B | 11 billion | specialized applications that need powerful NLP models. |

Google has released some follow-up works based on the original T5 model. Each of these have specialised on different NLP capabilities.

| Adaptions | Details |

| T5v1.1 | The improved version of T5 with some architectural tweaks is pre-trained on C4 only without mixing in the supervised tasks. |

| mT5 | Multilingual T5 model pre-trained on the mC4 corpus, which includes 101 languages. |

| byT5 | T5 model pre-trained on byte sequences rather than SentencePiece subword token sequences. |

| UL2 | Model similar to T5, pretrained on various denoising objectives |

| Flan-T5 | The Flan-T5 are T5 models trained on the Flan collection of datasets. Flan is a prompt-based pretraining method. |

Business Applications

T5 shows the best performance for tasks- Text summarization, question answering, and text classification. You can use this model for building business applications for use cases like;

| Business Use Case | Task | Example |

| News Aggregation | Text Summarization | Automatically generating short summaries of news articles to allow users to quickly scan headlines and decide which stories to read in-depth. |

| Customer Support | Question Answering | Providing immediate and accurate answers to common customer questions, such as "What's your return policy?" or "How do I reset my password?" via chatbots or virtual assistants. |

| Spam Filtering | Text Classification | Sorting incoming emails or messages into categories such as "spam," "important," or "promotions" to prioritize and organize incoming communication. |

| Document Management | Text Classification | Automatically categorizing and organizing documents into relevant groups based on their content or topic, making it easier to search and retrieve them later. |

| Sentiment Analysis | TaskText Classification | Analyzing social media posts or customer reviews to determine whether sentiment is positive, negative, or neutral, allowing companies to better understand their customers' opinions and improve their products or services. |

Model Features

The T5 model includes advanced techniques that improve its effectiveness and scalability compared to traditional models. The model has several key features that contribute to its efficiency.

Transformer Architecture

T5 encoder-decoder Transformer implementation follows the original Transformer except for removing the Layer Norm bias, placing the layer normalization outside the residual path, and using a different position embedding scheme.

Layer Normalization

The encoder consists of blocks comprising a self-attention layer and a small feed-forward network. T5 uses a simplified layer normalization version where the activations are only rescaled, and no additive bias is applied. The decoder is similar in structure to the encoder, but it includes a standard attention mechanism.

Position Embeddings

T5 uses a simplified form of position embeddings where each “embedding” is simply a scalar added to the corresponding logit used for computing the attention weights.

Model Tasks

T5 shows the best performance for tasks- Text summarization, question answering, and text classification. The model's pre-trained and fine-tuned tasks are;

Machine translation

The T5 language model can translate text from one language to another language. It can generate translations that are both grammatically correct and semantically accurate.

Question answering

The model can answer questions based on the context provided. It can comprehend the text and provide relevant and accurate answers to questions.

Text classification

Model can classify text into predefined categories based on its content. It can be used for sentiment analysis, spam filtering, and topic modeling tasks.

Sentence acceptability judgment

It can judge whether a given sentence is grammatically correct or not. It can be used for tasks such as language modeling and grammaticality check.

Sentiment analysis

T5 language model can analyze the sentiment of a given text and classify it as positive, negative, or neutral. It can be used for tasks such as customer feedback analysis and social media monitoring.

Paraphrasing

The model can generate paraphrases of a given sentence or judge the similarity between two sentences. It can be used for tasks such as text augmentation and data augmentation.

Sentence completion

The T5 language model can predict a given sentence's next word or phrase. It can be used for tasks such as language modeling and text completion.

Word sense disambiguation

Identify the correct meaning of a word based on the context in which it appears. It can be used for tasks such as lexical semantics and text understanding.

Text summarization

Summarize a long text into a shorter version while preserving its most important information. It can be used for tasks such as document summarization and news article summarization.

Pronoun reference ambiguity

Identify the referent of a pronoun in a given text. It can be used for tasks such as co-reference resolution and natural language understanding.

Semantic similarity

Measure the similarity between two sentences based on their meaning. It can be used for tasks such as sentence similarity and text classification.

Multi-Genre Natural Language Inference

Perform natural language inference on texts from multiple genres, such as news articles, social media posts, and scientific papers. It can be used for tasks such as cross-domain text understanding and knowledge transfer.

Fine-tuning

T5 focus on fine-tuning approaches that update only a subset of the parameters of its encoder-decoder model.

Using Adapter layers

The approach keeps most of the original model fixed while fine-tuning. Adapter layers are additional dense-ReLU-dense blocks that are added after each of the preexisting feed-forward networks in each block of the Transformer. These new feed-forward networks are designed so that their output dimensionality matches their input. This allows them to be inserted into the network without additional structure or parameter changes. When fine-tuning, only the adapter layer and layer normalization parameters are updated. (Approach Houlsby et al., 2019; Bapna et al., 2019)

Using Gradual Unfreezing

In gradual unfreezing, more and more of the model's parameters are fine-tuned over time. Gradual unfreezing was originally applied to a language model architecture consisting of a single stack of layers. To adapt this approach to the T5 encoder-decoder model, gradually unfreeze the encoder and decoder layers in parallel, starting from the top in both cases. Since the parameters of the T5 input embedding matrix and output classification matrix are shared, you can update them throughout fine-tuning. (Approach ” (Howard and Ruder, 2018)

Benchmarking

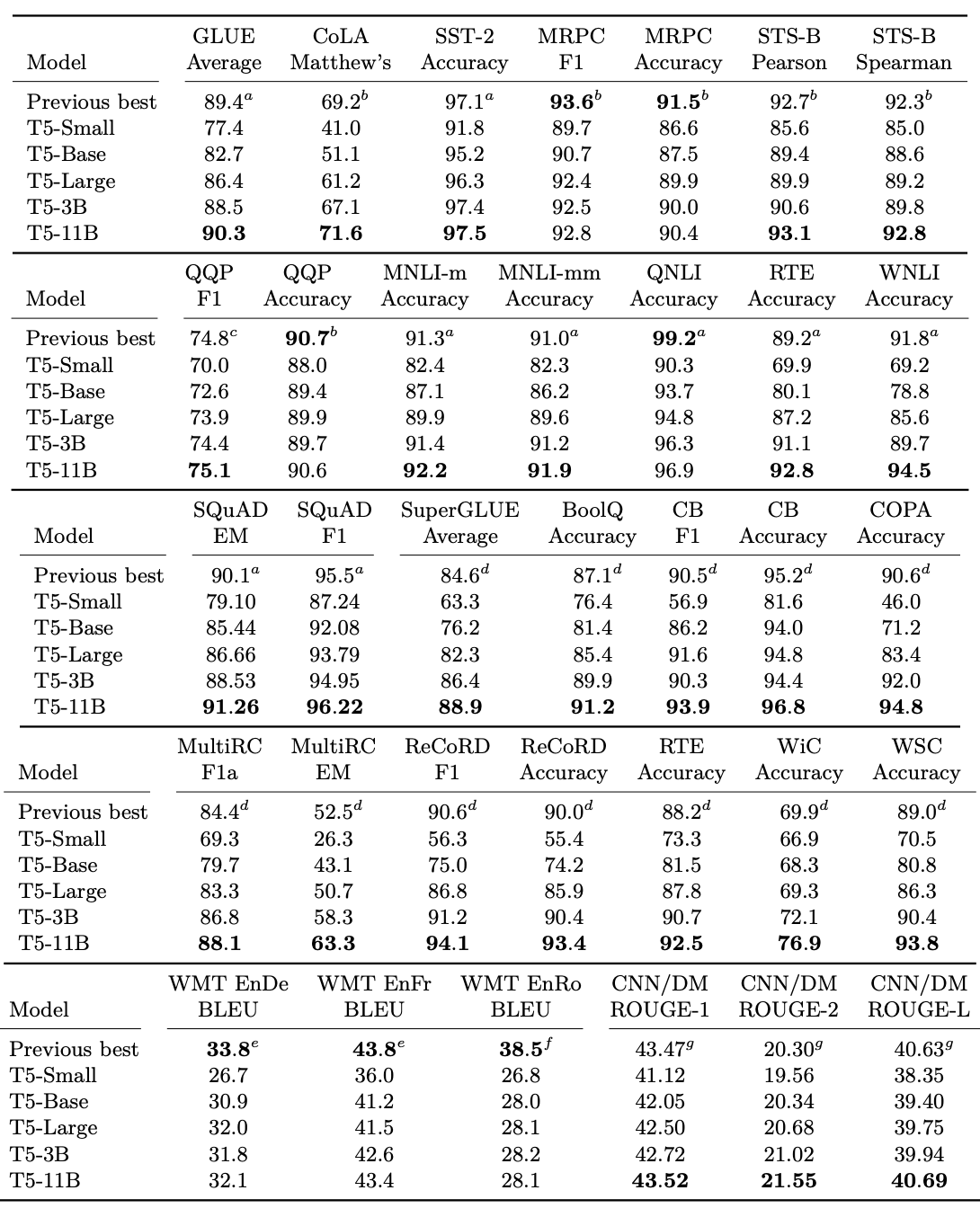

Table below shows the average and standard deviation of scores achieved by the T5 baseline model and training procedure.

| GLUE | CNNDM | SQuAD | SGLUE | EnDe | EnFr | EnRo | |

| * Baseline average | 83.28 | 19.24 | 80.88 | 71.36 | 26.98 | 39.82 | 27.65 |

| Baseline standard deviation | 0.235 | 0.065 | 0.343 | 0.416 | 0.112 | 0.090 | 0.108 |

| No pre-training | GLUE | CNNDM | SQuAD | SGLUE | EnDe | EnFr | EnRo |

Table below shows the performance of T5 variants on various tasks.

Sample Codes

Getting Started

from transformers import T5Tokenizer, T5Model

tokenizer = T5Tokenizer.from_pretrained("t5-base")

model = T5Model.from_pretrained("t5-base")

input_ids = tokenizer(

"Studies have been shown that owning a dog is good for you", return_tensors="pt"

).input_ids # Batch size 1

decoder_input_ids = tokenizer("Studies show that", return_tensors="pt").input_ids # Batch size 1

# forward pass

outputs = model(input_ids=input_ids, decoder_input_ids=decoder_input_ids)

last_hidden_states = outputs.last_hidden_state

Train on TPU

- Create a Cloud Storage bucket for your data and model checkpoints at http://console.cloud.google.com/storage, and fill in the BASE_DIR parameter in the following form.

- On the main menu, click Runtime and select Change runtime type. Set "TPU" as the hardware accelerator.

- Run the following cell and follow instructions to:

- Set up a Colab TPU running environment

- Verify that you are connected to a TPU device

- Upload your credentials to TPU to access your GCS bucket

print("Installing dependencies...")

%tensorflow_version 2.x

!pip install -q t5

import functools

import os

import sys

import time

import warnings

warnings.filterwarnings("ignore", category=DeprecationWarning)

import tensorflow.compat.v1 as tf

import tensorflow_datasets as tfds

import t5

import t5.models

import seqio

# Required to fix Colab flag parsing issue.

sys.argv = sys.argv[:1]

BASE_DIR = "gs://" #@param { type: "string" }

if not BASE_DIR or BASE_DIR == "gs://":

raise ValueError("You must enter a BASE_DIR.")

DATA_DIR = os.path.join(BASE_DIR, "data")

MODELS_DIR = os.path.join(BASE_DIR, "models")

ON_CLOUD = True

if ON_CLOUD:

print("Setting up GCS access...")

# Use legacy GCS authentication method.

os.environ['USE_AUTH_EPHEM'] = '0'

import tensorflow_gcs_config

from google.colab import auth

# Set credentials for GCS reading/writing from Colab and TPU.

TPU_TOPOLOGY = "v2-8"

try:

tpu = tf.distribute.cluster_resolver.TPUClusterResolver() # TPU detection

TPU_ADDRESS = tpu.get_master()

print('Running on TPU:', TPU_ADDRESS)

except ValueError:

raise BaseException('ERROR: Not connected to a TPU runtime; please see the previous cell in this notebook for instructions!')

auth.authenticate_user()

tf.enable_eager_execution()

tf.config.experimental_connect_to_host(TPU_ADDRESS)

tensorflow_gcs_config.configure_gcs_from_colab_auth()

tf.disable_v2_behavior()

# Improve logging.

from contextlib import contextmanager

import logging as py_logging

if ON_CLOUD:

tf.get_logger().propagate = False

py_logging.root.setLevel('INFO')

@contextmanager

def tf_verbosity_level(level):

og_level = tf.logging.get_verbosity()

tf.logging.set_verbosity(level)

yield

tf.logging.set_verbosity(og_level)

Limitations

While T5 is a highly versatile and powerful NLP model, it does have few drawbacks;

Fixed Input Length

With T5, we can only use shorter input sequences, typically of a length of fewer than 512 tokens. This limitation is due to quadratic computation growth. Computation resources increase quadratically with respect to the input sequence length.

High Memory Consumption

Due to quadratic computation growth, the model has increased training time and memory consumption for longer inputs.