LLMs Explained,

Long T5

Long T5 is a pre-trained language model that extends Google Research's T5 architecture. It can generate longer and more coherent text than previous models and has demonstrated promising results in various language tasks such as question answering, summarization, and dialogue generation. Long T5 was trained on a large-scale dataset of diverse text sources. Long T5 is publicly available through the Hugging Face Transformers library, which provides pre-trained checkpoints and fine-tuning scripts for various downstream tasks. The model can be fine-tuned on custom datasets and tasks, making it a versatile tool for natural language processing applications.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Long T5

Long T5 has outperformed previous models on several benchmark datasets, demonstrating its ability to generate longer, more coherent text.

Trained on dataset of over 800GB of text data

800GB text data

Long T5 is trained on a massive dataset of over 800GB of text data from diverse sources, including Wikipedia, books, and web pages.

11 times more parameters than its predecessor

11x more parameters

Long T5 has 11x more than its predecessor. The increased number of parameters allows capturing of complex patterns in natural language.

Long T5 can perform zero-shot learning

Zero-shot learning

Long T5 can generate responses for unseen prompts in the Persona-Chat dialogue generation dataset and can generates text for tasks it was yet to be explicitly trained on.

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Getting Started

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

Long T5 employs a modified T5 architecture comprised of an encoder and a decoder. The encoder is responsible for processing the input text, while the decoder produces the output text. The input and output text is represented as token sequences that are mapped to high-dimensional vectors with the help of an embedding layer.

The encoder is built up of convolutional and transformer layers. The convolutional layers process the input text in chunks, whereas the transformer layers capture the relationships between different parts of the input text. A pooling layer is also included in the encoder, summarising the encoded text and sending it to the decoder.

The decoder comprises a series of transformer layers that produce the output text. The decoder generates the output text token by token based on the encoded input text. A masked language modeling (MLM) objective is included in the decoder, which encourages the model to predict the correct output token given the previous tokens.

Model Type: Language model

Language(s) (NLP): English, German, French, Romanian, and many more.

License: Apache 2.0

Model highlights

Following are the key highlights of the model.

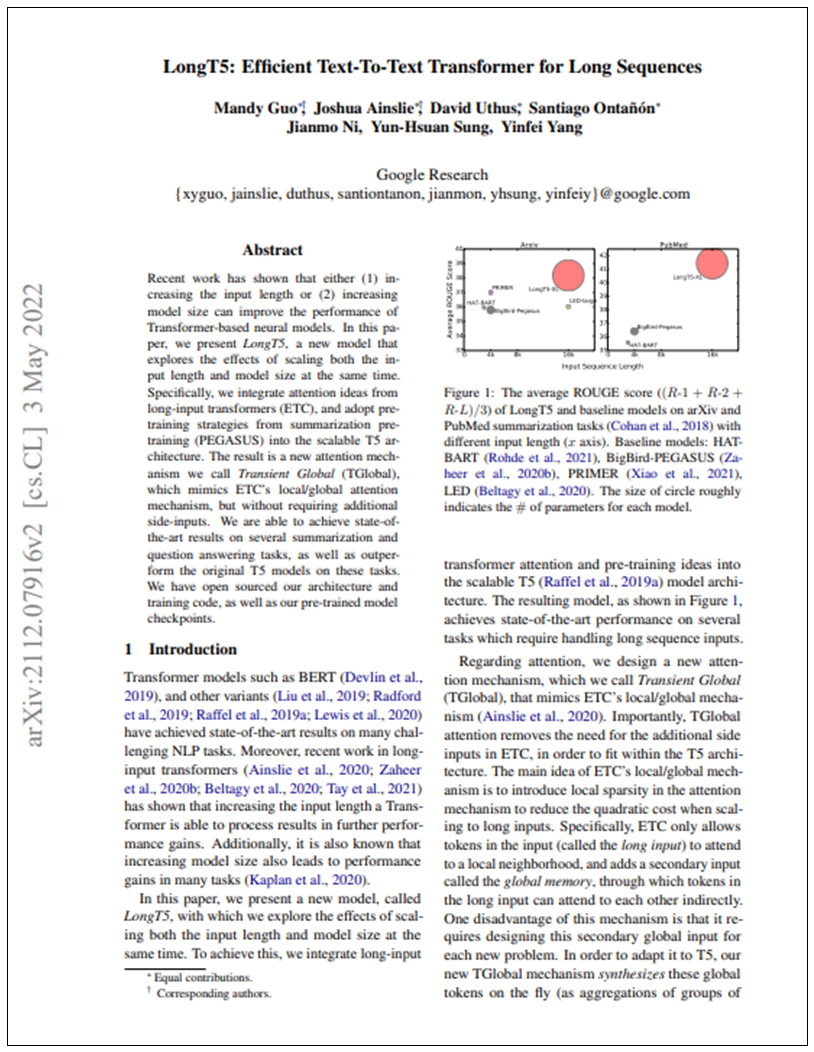

- LongT5 explores the effects of scaling both input length and model size at the same time.

- LongT5 integrates attention ideas from long-input transformers and adopts pretraining strategies from summarization pretraining into the scalable T5 architecture.

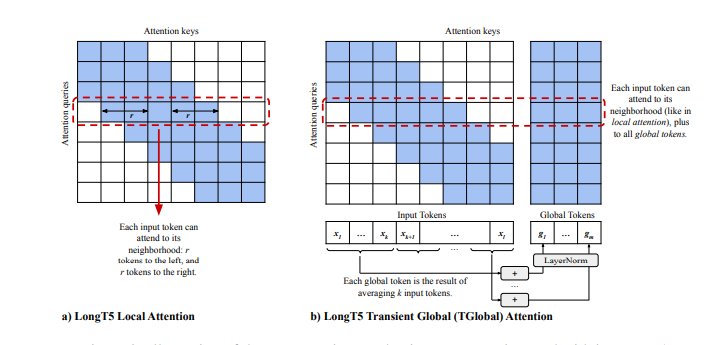

- LongT5 introduces a new attention mechanism called Transient Global (Global), miming ETC's local/global attention mechanism without requiring additional side inputs.

- LongT5 achieves state-of-the-art results on several summarization and question-answering tasks.

- LongT5 outperforms the original T5 models on these tasks.

- LongT5's architecture, training code, and pre-trained model checkpoints are open-sourced.

Training Details

Training data

Long T5 is trained on diverse text data in 24 languages, including Wikipedia, web pages, and books. The training data is preprocessed to convert to a text-to-text format, with each example containing a source text and a target text representing the desired output.

Training dataset size

The size of the training dataset is not stated explicitly in the paper. However, the authors state that they use a preprocessed dataset similar to the original T5 model and containing 37B tokens from various languages.

Training Procedure

Long T5 is pre-trained using a Masked Language Modeling (MLM) objective variant, where a certain percentage of tokens in the input sequence is randomly masked, and the model is trained to predict the masked tokens.

Training Observations

The Long T5 model was trained using a curriculum learning approach. Hyperparameter tuning and parallel training across multiple GPUs were implemented to achieve the best performance. Despite the computational difficulties, the Long T5 model showed the best results on several NLP benchmarks, including the LAMBADA and the SuperGLUE. benchmark.

Model Types

Several versions of the Long T5 model have been trained on the same dataset. Here are the variations of the Long T5 model based on parameter count:

| Model | Parameters | Highlights |

| LongT5-Local-Base | 250 million | Text classification, language modeling |

| LongT5-TGlobal-Base | 250 million | Question answering, summarization, translation |

| LongT5-Local-Large | 780 million | Text classification, language modeling |

| LongT5-TGlobal-Large | 780 million | Question answering, summarization, translation |

| LongT5-TGlobal-XL | 3 billion | Question answering, summarization, translation |

Business Applications

Long T5 shows the optimal results in tasks like Machine Reading Comprehension, Scientific Paper Summarization, Biomedical Text Mining, Patent Summarization, and News Article Summarization. These have multiple business applications. Some examples are listed below:

| Task | Business Use Cases | Examples |

| Machine Reading Comprehension | Customer support, chatbots, search engines, virtual assistants | Given a news article and questions, answer the questions (CNN/Daily Mail) |

| News summarization | Language translation | Customer service chatbots |

| Scientific Paper Summarization | Academic research, patent analysis | Summarize scientific papers (arXiv) |

| Biomedical Text Mining | Healthcare, drug discovery, clinical trial analysis | Extract named entities, relationships, classify text (PubMed) |

| Patent Summarization | Patent analysis, intellectual property rights, technology patents | Summarize patents (BigPatent) |

| News Article Summarization | News websites, content creation, social media | Summarize news articles (MediaSum) |

| Multi-Document Summarization | Content creation, journalism, research | Generate summary from multiple news articles (Multi-News) |

| Question Answering on Long-Form Text | Customer support, chatbots, search engines, virtual assistants | Answer questions from long-form text (Natural Questions) |

| Open-Domain Question Answering | Customer support, chatbots, search engines, virtual assistants | Answer general knowledge questions (TriviaQA) |

Model Features

Long T5 is a model that includes advanced techniques which improve its effectiveness and scalability compared to traditional models. The model has several key features that contribute to its success.

Curriculum Learning

The Long T5 model is trained using a curriculum learning approach that gradually increases the length and complexity of the training examples, which has been shown to improve the quality of the learned representations.

T-Global Attention

The Long T5 model uses a variant of the transformer attention mechanism called T-Global attention, which allows it to attend to global patterns across the input sequence. It has improved performance on tasks such as question answering and summarization.

Reversible Residual Layers

Long T5 uses reversible residual layers to reduce the memory requirements of the model. These layers allow the activations from the forward pass to be reconstructed in the backward pass. This helps to save memory during training and allows for longer sequences to be processed.

Low-Rank Factorization

Long T5 employs low-rank factorization to reduce the number of parameters in the model. This technique decomposes large-weight matrices into smaller ones, reducing the number of parameters. The model can be trained more efficiently by reducing the number of parameters.

Kernelized Attention

Long T5 uses kernelized attention to improve the quality of the attention mechanism. This technique replaces the dot product in the attention mechanism with a kernel function. The kernel function helps to capture more complex relationships between the query and the keys, improving the quality of the attention mechanism.

Model Task

Below are some important tasks of the model Long T5.

Machine Reading Comprehension

The CNN/Daily Mail dataset is used for machine reading comprehension tasks; when given a news article and a set of related questions, the task is to answer the questions. The Long T5 language model trained on this dataset can be used for reading comprehension, question answering, and information retrieval tasks.

Scientific Paper Summarization

The arXiv dataset is used for scientific paper summarization tasks. The Long T5 language model trained on this dataset can be used for tasks such as text summarization, information retrieval, and machine translation in the scientific domain.

Biomedical Text Mining

The PubMed dataset is used for biomedical text-mining tasks. The Long T5 language model trained on this dataset can be used for tasks such as named entity recognition, relationship extraction, and text classification in the biomedical domain.

Patent Summarization/strong>

The BigPatent dataset is used for patent summarization tasks. The Long T5 language model trained on this dataset can be used for tasks such as text summarization, information retrieval, and machine translation in the patent domain.

News Article Summarization

The MediaSum dataset is used for abstractive summarization tasks on news articles. The Long T5 language model trained on this dataset can be used for tasks such as text summarization, information retrieval, and machine translation in the news domain.

Multi-Document Summarization

The Multi-News dataset is used for multi-document summarization tasks, where given a set of news articles, the task is to generate a summary that covers the main points from all the articles. The Long T5 language model trained on this dataset can be used for tasks such as text summarization, information retrieval, and machine translation in the news domain.

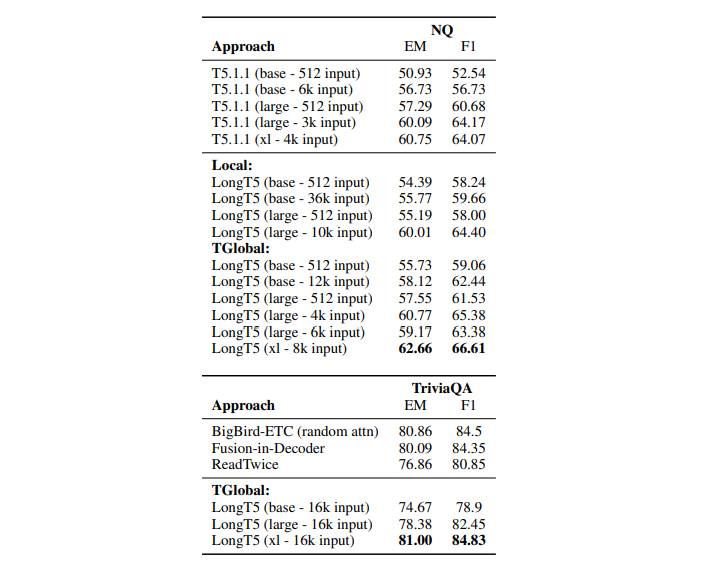

Question Answering on Long-Form Text

The NQ dataset is used for question-answering tasks on long-form text, such as Wikipedia articles. The Long T5 language model trained on this dataset can be used for reading comprehension, question answering, and information retrieval on long-form text.

Open-Domain Question Answering

The TriviaQA dataset is used for open-domain question-answering tasks. The Long T5 language model trained on this dataset can be used for reading comprehension, question answering, and information retrieval on general knowledge questions.

Getting Started

Clone the Long T5 GitHub repository to your local machine using the following command:

git clone https://github.com/google-research/longt5.git

Navigate to the longt5 Directory using the following command:

cd longt5

Create a Python virtual environment using the following command:

python3 -m venv env

Activate the virtual environment using the following command:

source env/bin/activate

Install the required packages using the following command:

pip install -r requirements.txt

Install Long T5 using the following command:

pip install -e .

Download a pre-trained Long T5 model checkpoint from the Hugging Face model hub, or train your own Long T5 model.

Run inference on the pre-trained Long T5 model using the 'run_t5.py' script provided in the 'longt5/scripts' directory.

Fine-tuning

Fine-tuning Long T5 involves training the model on a downstream task using task-specific training data. Here are the general steps to fine-tune Long T5:

Standard fine-tuning

This technique involves training Long T5 on a downstream task, then fine-tuning it on task-specific data using pre-trained weights. During fine-tuning, the last layer of the Long T5 decoder is replaced with a task-specific output layer, and the entire model is fine-tuned on the downstream task data.

Adapters

Adapters are small, task-specific neural networks added to pre-trained models to increase their adaptability to new tasks. This technique adds task-specific adapters to the pre-trained Long T5 model and fine-tuned rather than the entire model. This lowers the computational cost of fine-tuning and facilitates knowledge transfer across tasks.

Multi-task learning

Multi-task learning is teaching a model to perform multiple tasks simultaneously. This technique entails fine-tuning the Long T5 model on multiple downstream tasks simultaneously to improve overall performance across all tasks. The Long T5 model can be fine-tuned on a diverse set of tasks such as summarization, question answering, and translation to learn useful representations across multiple natural language processing tasks.

Knowledge distillation

Transferring knowledge from a large, pre-trained model to a smaller one is known as knowledge distillation. A smaller Long T5 model is trained to mimic the behavior of a larger Long T5 model pre-trained on a large corpus of data. The smaller model can then be fine-tuned on task-specific data, benefiting from the knowledge transferred from the larger model.

Domain adaptation

Domain adaptation is modifying a pre-trained model to perform well in a specific domain. To improve its performance in the target domain, this technique involves fine-tuning the pre-trained Long T5 model on task-specific data.

Benchmarking

The below image shows an illustration of the two attention mechanisms experimented with in LongT5

The below image shows: QA results: (1) NQ results comparing T5.1.1

and LongT5. Base/large models are trained on 4x8

TPUv3 with no model partitioning. Xl models are

trained on 8x16 TPUv3 with 8 partitions. (2) TriviaQA results compared to top models on leader board.

LongT5 scores using Local and TGlobal attention.

Sample Codes

An example showing how to evaluate a fine-tuned LongT5 model on the PubMed dataset is below.

import evaluate

from datasets import load_dataset

from transformers import AutoTokenizer, LongT5ForConditionalGeneration

dataset = load_dataset("scientific_papers", "pubmed", split="validation")

model = (

LongT5ForConditionalGeneration.from_pretrained("Stancld/longt5-tglobal-large-16384-pubmed-3k_steps")

.to("cuda")

.half()

)

tokenizer = AutoTokenizer.from_pretrained("Stancld/longt5-tglobal-large-16384-pubmed-3k_steps")

def generate_answers(batch):

inputs_dict = tokenizer(

batch["article"], max_length=16384, padding="max_length", truncation=True, return_tensors="pt"

)

input_ids = inputs_dict.input_ids.to("cuda")

attention_mask = inputs_dict.attention_mask.to("cuda")

output_ids = model.generate(input_ids, attention_mask=attention_mask, max_length=512, num_beams=2)

batch["predicted_abstract"] = tokenizer.batch_decode(output_ids, skip_special_tokens=True)

return batch

result = dataset.map(generate_answer, batched=True, batch_size=2)

rouge = evaluate.load("rouge")

rouge.compute(predictions=result["predicted_abstract"], references=result["abstract"])

Limitations

While Long T5 is a highly versatile and powerful NLP model, it does have some limitations:

Computation resources

Long T5 models require massive computational resources, including large amounts of RAM, GPU memory, and computing power, making it difficult for smaller research teams or organizations with limited resources to train and fine-tune the model.v

Inability to handle certain types of data

While Long T5 can handle a wide range of NLP tasks, it may not perform well on certain data types, such as low-resource languages or highly technical jargon.

Need for large training datasets

Like other large-scale language models, Long T5 requires massive training data to achieve optimal performance. This can challenge organizations with limited access to high-quality training data.

Model biases

Like all language models, Long T5 may be prone to biases based on the training data it is exposed to. These biases can manifest in the model's outputs and may require additional post-processing or filtering.