LLMs Explained,

Cerebras-GPT

Cerebras, a Silicon Valley AI company released Cerebras-GPT to provide an alternative to the tightly controlled and proprietary systems available today. The models are trained using 16 CS-2 systems in their Andromeda AI supercomputer with 111 million, 256 million, 590 million, 1.3 billion, 2.7 billion, 6.7 billion, and 13 billion parameters. The company released the pre-trained models and code and claimed that Cerebras-GPT is the first open and reproducible work comparing compute-optimal model scaling to models trained on fixed dataset sizes.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Cerebras-GPT

Cerebras-GPT models are trained on the Eleuther Pile dataset following DeepMind Chinchilla scaling rules for efficient pre-training

Better accuracy than similar-sized publicly-available models

Improved Accuracy

Publisher claims that Cerebras-GPT 13B model shows improved accuracy on most downstream tasks compared to other similar-sized publicly-available models.

Improves the compute-optimal frontier loss by 0.4 percentage

Improved Frontier Loss

The models are configured using µP, enabling direct hyperparameter transfer from smaller to larger models and improving the compute-optimal frontier loss by 0.4%.

Model loss is expected to be ∼1.2% better than GPT-NeoX 20B.

Better than GPT-NeoX

If the model is trained with FLOPs equivalent to GPT-NeoX 20B, the publisher expects the Cerebras-GPT model loss to be ∼1.2% better than GPT-NeoX 20B.

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

Cerebras-GPT is an autoregressive transformer similar to GPT-2 and GPT-3. The model has a hidden size of 5,120. It has 40 layers and a head size of 128. The filter size is 20,480, and The context (sequence) length is 2,048. The model is trained from randomly initialized weights and the base variant uses standard parameterization initialization. The model is released under the Apache 2.0 license. Model is evaluated using text prediction cross-entropy on upstream tasks and text generation accuracy on downstream tasks. Moreover, due to limited computing resources, the model was not evaluated for prediction uncertainty or calibration. Variability analysis was only performed for small variants of Cerebras-GPT models using multiple runs from different random initializations and data loader seeds to assess variance in task performance.

Model Highlights

The Cerebras-GPT family of models was released to help researchers study the scaling laws of large language models (LLMs). The models are open-source and use open datasets, which makes it easier for researchers to reproduce the results and compare different approaches. The models were also trained on Cerebras hardware, which demonstrates the scalability of LLM training on this platform.

- Cerebras-GPT models are configured using µP. It enables direct hyperparameter transfer from smaller to larger models, improving the compute-optimal frontier loss by 0.4%.

- If the models are trained with FLOPs equivalent to GPT-NeoX 20B, the publisher expects the Cerebras-GPT model loss to be ∼1.2% better than GPT-NeoX 20B

- Cerebras-GPT 13B model shows the best average downstream result for models of comparable size on a suite of seven common-sense reasoning tasks in both the zero-shot and five-shot settings.

- Across Cerebras-GPT 13B's Maximal Update Parameterization model sizes, models exhibit an average of 0.43% improved Pile test loss and 1.7% higher average downstream task accuracy compared to standard parameterization models

Training Details

Training data

Cerebras-GPT is trained on the Pile dataset. Pile was cleaned using ftfy library to normalize text, and then filtered using scripts provided by Eleuther. Then, data was tokenized with byte-pair encoding using the GPT-2 vocabulary.

Preprocessing

Preprocess of the Pile dataset using tools and instructions from Eleuther and the community. Publisher clean the raw text using the ftfy library to normalize text and remove corrupted unicode. Our tokenized version of the Pile training set contains roughly 371B tokens. We find that shuffling samples across all training set documents improves validation loss by 0.7-1.5% compared to shuffling within a window of a few thousand documents.

Training Hardware

Cerebras-GPT is trained on the Pile dataset using Andromeda AI Supercomputer: Cerebras Wafer-Scale Cluster with 16 Cerebras CS-2 systems.

Evaluation Data

Upstream (pre-training) evaluations were completed using the Pile validation and test set splits. Downstream evaluations were performed on standardized tests. Cloze and completion tasks: LAMBADA, HellaSwag. Common Sense Reasoning tasks: PIQA, ARC, OpenBookQA. Winograd schema type tasks: Wino-grande. Downstream evaluations were performed using the Eleuther lm-eval-harness.

Model Types

Here are the variations of the PaLM-Emodel based on parameter count:

| Model | Parameters |

| PaLM-E-12B | 12 Billion |

| PaLM-E-66B | 66 Billion |

| PaLM-E-84B | 84 Billion |

| PaLM-E-562B | 562 Billion |

Business Applications

T5 shows the best performance for tasks- Text summarization, question answering, and text classification. You can use this model for building business applications for use cases like;

| Business Use Case | Task | Example |

| Online Retail | Visual Tasks | Analyzing product images to categorize them into correct product categories. |

| Tech Support | Language Tasks | Automated customer support, answering customer queries in a conversational manner. |

| Social Media Management | Visual-Language Tasks | Analyzing user posted images and generating appropriate descriptions or responses. |

| Factory Automation | Robotics Tasks | Controlling a robotic arm in a factory to sort items based on their visual characteristics. |

| News and Media | Visual-Language Tasks | Auto-generating captions for images and videos based on their content. |

Model Features

PaLM-E is an advanced robotics model integrating large language and visual models to perform diverse tasks while exhibiting knowledge transfer capabilities from the vision and language domains to robotics systems.

Multimodal Input Capability

PaLM-E is designed to handle multiple types of input data, including text, images, robot states, and scene embeddings. This multimodal capability allows it to understand and interpret complex, real-world data streams, enabling it to function effectively in diverse robotic tasks.

Knowledge Transfer Capabilities

This model significantly benefits from knowledge transfer from the vision and language domains. The integration of large language and visual models allows PaLM-E to leverage this knowledge, improving the effectiveness of robot learning and enabling it to solve complex, long-horizon tasks efficiently.

Visual-Language Generalist

Besides being a robust robotics model, PaLM-E is a generally-capable vision-and-language model. It can perform visual tasks, like object detection or scene classification, and language tasks, such as generating code or solving mathematical equations, thereby showing a high level of proficiency in both visual and language tasks.

Task Generalization

One of the notable features of PaLM-E is its ability to generalize to new tasks that it has yet to encounter during training. This zero-shot generalization capability enables the model to adapt to new tasks quickly, increasing its utility in real-world applications.

Model Tasks

PaLM-E can perform diverse tasks across robotics, vision, and language domains due to its multimodal input capability and knowledge transfer characteristics.

Robotics Tasks

PaLM-E is designed to function effectively in a range of robotics tasks. The model can control various types of robots, making decisions based on sensory data inputs and generating action sequences to accomplish tasks. Examples include asking a mobile robot to retrieve an item from a location or commanding a tabletop robot to sort objects based on certain characteristics.

Visual Tasks

As a competent visual model, PaLM-E can analyze and interpret image data to perform tasks like describing images, detecting objects, or classifying scenes. This means the model could look at an image and describe what it sees, identify specific objects, or categorize the scene depicted in the image.

Language Tasks

PaLM-E is also adept at a variety of language tasks. This includes generating human-like text, answering questions, and performing complex tasks like solving mathematical equations or generating code. This can be particularly useful in situations that require human-like interaction or understanding.

Visual-Language Tasks

In addition to separate visual and language tasks, PaLM-E also excels in tasks that require the integration of these two domains. For example, it can answer questions about images, such as identifying the actions taking place or the objects present in an image. It has set a new state of the art on the visual-language OK-VQA benchmark, which requires understanding visual content and language.

Fine-tuning

No Specific fine-tuning was performed in PaLM-E

Benchmarking

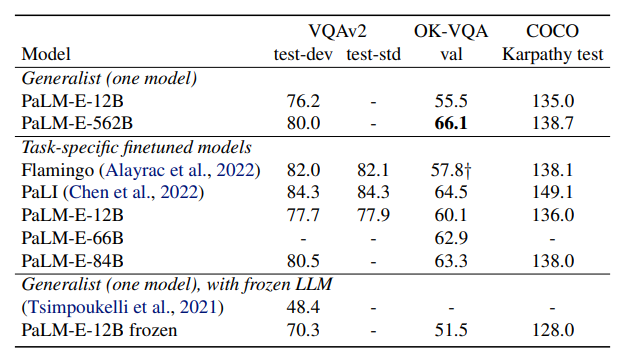

Table below shows the performance of T5 variants on various tasks.

" Results on general visual-language tasks. For the generalist models, they are the same checkpoint across the different evaluations, while task-specific finetuned models use differentfinetuned models for the different tasks. COCO uses Karpathy splits. † is 32-shot on OK-VQA (not fine-tuned)."

"PaLM-E-562B model achieves the highest reported number on OK-VQA, including outperforming models fine-tuned specifically on OK-VQA. Compared to (Tsimpoukelli et al., 2021), PaLM-E achieves the highest performance on VQA v2 with a frozen LLM to the best of our knowledge. This establishes that PaLM-E is a competitive visual-language generalist, in addition to being an embodied reasoner on robotic tasks."

Sample Codes

Getting Started

from transformers import T5Tokenizer, T5Model

tokenizer = T5Tokenizer.from_pretrained("t5-base")

model = T5Model.from_pretrained("t5-base")

input_ids = tokenizer(

"Studies have been shown that owning a dog is good for you", return_tensors="pt"

).input_ids # Batch size 1

decoder_input_ids = tokenizer("Studies show that", return_tensors="pt").input_ids # Batch size 1

# forward pass

outputs = model(input_ids=input_ids, decoder_input_ids=decoder_input_ids)

last_hidden_states = outputs.last_hidden_state

Train on TPU

- Create a Cloud Storage bucket for your data and model checkpoints at http://console.cloud.google.com/storage, and fill in the BASE_DIR parameter in the following form.

- On the main menu, click Runtime and select Change runtime type. Set "TPU" as the hardware accelerator.

- Run the following cell and follow instructions to:

- Set up a Colab TPU running environment

- Verify that you are connected to a TPU device

- Upload your credentials to TPU to access your GCS bucket

print("Installing dependencies...")

%tensorflow_version 2.x

!pip install -q t5

import functools

import os

import sys

import time

import warnings

warnings.filterwarnings("ignore", category=DeprecationWarning)

import tensorflow.compat.v1 as tf

import tensorflow_datasets as tfds

import t5

import t5.models

import seqio

# Required to fix Colab flag parsing issue.

sys.argv = sys.argv[:1]

BASE_DIR = "gs://" #@param { type: "string" }

if not BASE_DIR or BASE_DIR == "gs://":

raise ValueError("You must enter a BASE_DIR.")

DATA_DIR = os.path.join(BASE_DIR, "data")

MODELS_DIR = os.path.join(BASE_DIR, "models")

ON_CLOUD = True

if ON_CLOUD:

print("Setting up GCS access...")

# Use legacy GCS authentication method.

os.environ['USE_AUTH_EPHEM'] = '0'

import tensorflow_gcs_config

from google.colab import auth

# Set credentials for GCS reading/writing from Colab and TPU.

TPU_TOPOLOGY = "v2-8"

try:

tpu = tf.distribute.cluster_resolver.TPUClusterResolver() # TPU detection

TPU_ADDRESS = tpu.get_master()

print('Running on TPU:', TPU_ADDRESS)

except ValueError:

raise BaseException('ERROR: Not connected to a TPU runtime; please see the previous cell in this notebook for instructions!')

auth.authenticate_user()

tf.enable_eager_execution()

tf.config.experimental_connect_to_host(TPU_ADDRESS)

tensorflow_gcs_config.configure_gcs_from_colab_auth()

tf.disable_v2_behavior()

# Improve logging.

from contextlib import contextmanager

import logging as py_logging

if ON_CLOUD:

tf.get_logger().propagate = False

py_logging.root.setLevel('INFO')

@contextmanager

def tf_verbosity_level(level):

og_level = tf.logging.get_verbosity()

tf.logging.set_verbosity(level)

yield

tf.logging.set_verbosity(og_level)

Limitations

While T5 is a highly versatile and powerful NLP model, it does have few drawbacks;

Fixed Input Length

With T5, we can only use shorter input sequences, typically of a length of fewer than 512 tokens. This limitation is due to quadratic computation growth. Computation resources increase quadratically with respect to the input sequence length.

High Memory Consumption

Due to quadratic computation growth, the model has increased training time and memory consumption for longer inputs.