LLMs Explained,

Flan T5

Flan T5 is a large-scale pre-trained transformer-based language model developed by Google. It is designed to perform natural language processing (NLP) tasks, such as text classification, sentiment analysis, and question answering. Flan T5 is among Google's largest models based on the T5 architecture. It has been pre-trained on massive data and can be fine-tuned for various NLP tasks.

Model Card View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Flan T5

Flan T5's architecture allows for easy adaptation to new tasks and domains, making it a flexible tool for various natural language processing applications.

Fine-tuned on 1.8K tasks using the standard T5 architecture

1.8K tasks

Flan-T5 was fine-tuned on 1.8K tasks, using the standard T5 architecture with 12 transformer layers and a sequence length of 512.

Flan-T5 XXL has 11 billion parameters

11B parameters

Flan-T5 XXL has 11 billion parameters, making it one of the largest publicly available language models.

Flan-T5 11B outperforms T5 11B by double-digit improvements

Outperforms T5

Flan-T5 11B outperforms T5 11B by double-digit improvements and also outperforms PaLM 62B on some challenging BIG-Bench tasks.

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Getting Started

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

About Model

Google created Flan T5, a transformer-based language model. It is based on the T5 architecture and has 12 transformer layers and a feed-forward neural network to process text in parallel. The model is one of Google's largest, with over 20 billion parameters and pre-trained on massive data sets such as web pages, books, and articles. Flan T5 comes in various sizes and is used for various NLP tasks such as text classification, summarization, and question-answering. The model is pre-trained with the BERT-style objective, where it learns to predict masked tokens, and is trained with a denoising autoencoder to capture the text's semantics.

Model Type: Transformer-based language model

Language(s) (NLP): English, German, and French.

License: Apache 2.0

Model highlights

The Flan T5 model is an impressive language model with several notable highlights that distinguish it from others. Here are the key highlights of the Flan T5 model.

- Scaling the number of tasks, model size, and finetuning on chain-of-thought data significantly improves model performance.

- Instruction finetuning improves performance on various model classes, setups, and evaluation benchmarks.

- Publicly released Flan-T5 checkpoints achieve strong few-shot performance compared to larger models, such as PaLM 62B.

- Instruction finetuning is a general method for improving the performance and usability of pretrained language models.

- Finetuning on instruction datasets improves model performance and generalization to unseen tasks.

Training Details

Training data

Flan T5 is pre-trained on a large amount of text data, which includes web pages, books, articles, and other sources in multiple languages. The pre-training data is curated to cover various domains and

Training dataset size

The paper does not provide information on the exact size of the pre-training dataset for Flan T5. However, it is noted that the pre-training data is massive, and the model is pre-trained using the T5 architecture.

Training Procedure

The training procedure for Flan T5 involves two stages: pre-training and instruction finetuning. The pre-training stage is done using the T5 architecture, and it involves training the model to predict the next token in a sequence given the previous tokens. Finetuning instruction involves training the model on a collection of instruction datasets to improve its performance and generalization to unseen tasks.

Training time and resources

The paper does not provide detailed information on the training time and resources used to train Flan T5. However, it is noted that the model is pre-trained using Google's proprietary TPU (Tensor Processing Unit) hardware, which is specifically designed for deep learning workloads and can provide significant speedups compared to traditional hardware.

Model Types

Several versions of the Flan T5 model have been trained on the same dataset. Here are the variations of the Flan T5 model based on parameter count:

| Model | Parameters |

| Flan-T5-Small | 80 million |

| Flan-T5-Base | 250 million |

| Flan-T5-Large | 780 million |

| Flan-T5-XL | 3 billion |

| Flan-T5-XXL | 11 billion |

Business Applications

Flan T5 shows the best results for tasks - Multi-task Language Understanding and Cross-Lingual Question Answering. You can use this model for building business applications for use cases like;

| Multi-task Language Understanding | Cross-Lingual Question Answering |

| Chatbots and virtual assistants | Customer support and service in multilingual environments |

| Sentiment analysis and customer feedback analysis | Business intelligence and analytics across international markets |

| Content summarization and generation | Multilingual search engines and content indexing |

| Personalized recommendations and advertising | Translation and localization services |

| Document classification and information extraction | Language learning and education platforms |

Model Features

Flan T5 model is a highly innovative language model that incorporates several techniques to make it more effective and scalable than conventional models.

Task mixtures

Increasing the number of tasks in finetuning has improved generalization to previously unseen tasks. In this paper, the authors combine four task mixtures, Muffin, T0-SF, NIV2, and CoT, to scale up to 1,836 finetuning tasks.

Chain-of-thought finetuning mixture

The authors develop a new finetuning data mixture called "Chain-of-thought," incorporating CoT annotations. They manually created ten instruction templates per task for nine previous datasets, including arithmetic reasoning and natural language inference, for which human raters created CoT annotations.

Templates and formatting

The authors use instructional templates for each task assigned by the creators of Muffin, T0-SF, and NIV2. To create few-shot templates, they manually write around ten instruction templates for each of the nine datasets and randomly apply exemplar delimiters (e.g., "Q:"/"A:") at the example level.

Model Tasks

Text-to-code conversion

Flan T5 can convert natural language text into executable code, such as Python or JavaScript. This feature can be particularly useful for developers who want to write code faster and more efficiently. Flan T5 can understand natural language queries related to coding and generate the corresponding code, making programming more accessible to non-experts.

Text-to-SQL conversion

Flan T5 can generate SQL queries from natural language questions. This can help query databases or retrieve information from structured data sources. For example, a user can ask a question in natural languages, such as "What are the total sales of product A in the last month?" Flan T5 can generate the corresponding SQL query to retrieve the required information from a database.

Semantic similarity

Flan T5 can compare the semantic similarity between two pieces of text, which can be useful for tasks such as information retrieval or duplicate detection. This feature can help to identify duplicate content or similar documents in a large corpus of text.

Cross-lingual retrieval

Flan T5 can retrieve information across different languages, allowing users to search for information in one language and retrieve results in another. This feature can be particularly useful for organizations operating in multiple countries and languages, as it can facilitate communication and information sharing across language barriers.

Image captioning

Flan T5 can generate captions for images based on the visual content. This feature can be useful for applications such as image search engines or accessibility tools for the visually impaired. Flan T5 can analyze the visual features of an image and generate a natural language description that accurately reflects the image's content.

Getting Started

- Install the transformers library: Flan T5 is available through the Hugging Face Transformers library, so you will need to first install this library. You can do so using pip or conda, depending on your preference.

- Download the Flan T5 model checkpoint: The Flan T5 model checkpoint is available for download from the Hugging Face model hub. You can download the checkpoint using the 'transformers-cli' command.

- Load the Flan T5 model: Once you have downloaded the Flan T5 checkpoint, you can load it into your Python script using the 'T5ForConditionalGeneration.from_pretrained()' method.

- Use the Flan T5 model: With the Flan T5 model loaded, you can use it to perform a variety of natural language processing tasks, such as text generation, summarization, translation, and more.

Fine-tuning

Several techniques can be used to improve the Flan T5 model depending on the particular purpose and data. Here are a few typical techniques for Flan T5 fine-tuning:

Scaling Curves for Instruction Finetuning

The size of the model and the number of finetuning tasks both have a positive impact on instruction finetuning performance. The margin of improvement is significant and not decreasing, implying that instruction finetuning will remain relevant for future models.

CoT Finetuning is Critical for Reasoning Abilities

Joint finetuning on non-CoT and CoT data enables significantly better CoT performance without compromising non-CoT task performance. Large model CoT finetuning improves performance on held-out tasks while maintaining performance improvements on non-CoT tasks.

Instruction Finetuning Is Generalizable Across Models

Instruction finetuning can be applied to language models with varying architectures, sizes, and pre-training objectives while improving performance. This finding is consistent with previous research that has shown the effectiveness of instruction finetuning across different types of models.

Instruction Finetuning is Relatively Compute-Efficient

Instruction finetuning improves the performance of models that use a small amount of computing and can sometimes outperform larger models that do not use this. Utilizing existing checkpoints could increase efficiency even further.

Benchmarking

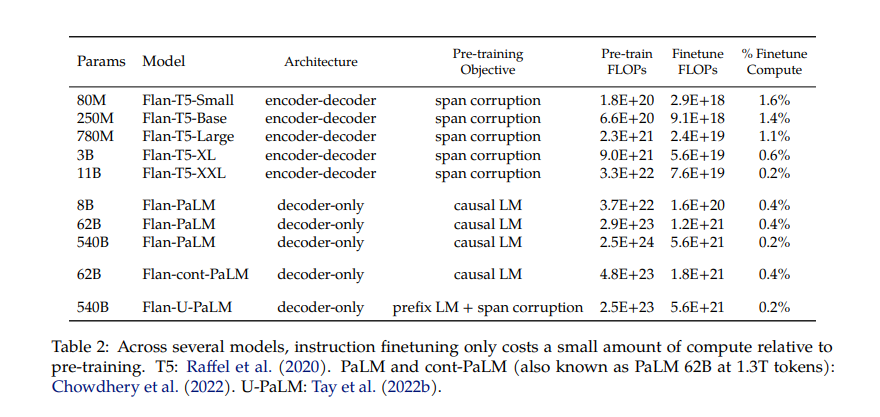

Table 1 instruction finetuning only costs a small amount of compute relative to

pre-training. T5: Raffel et al. (2020). PaLM and cont-PaLM (also known as PaLM 62B at 1.3T tokens):

Chowdhery et al. (2022). U-PaLM: Tay et al. (2022b).

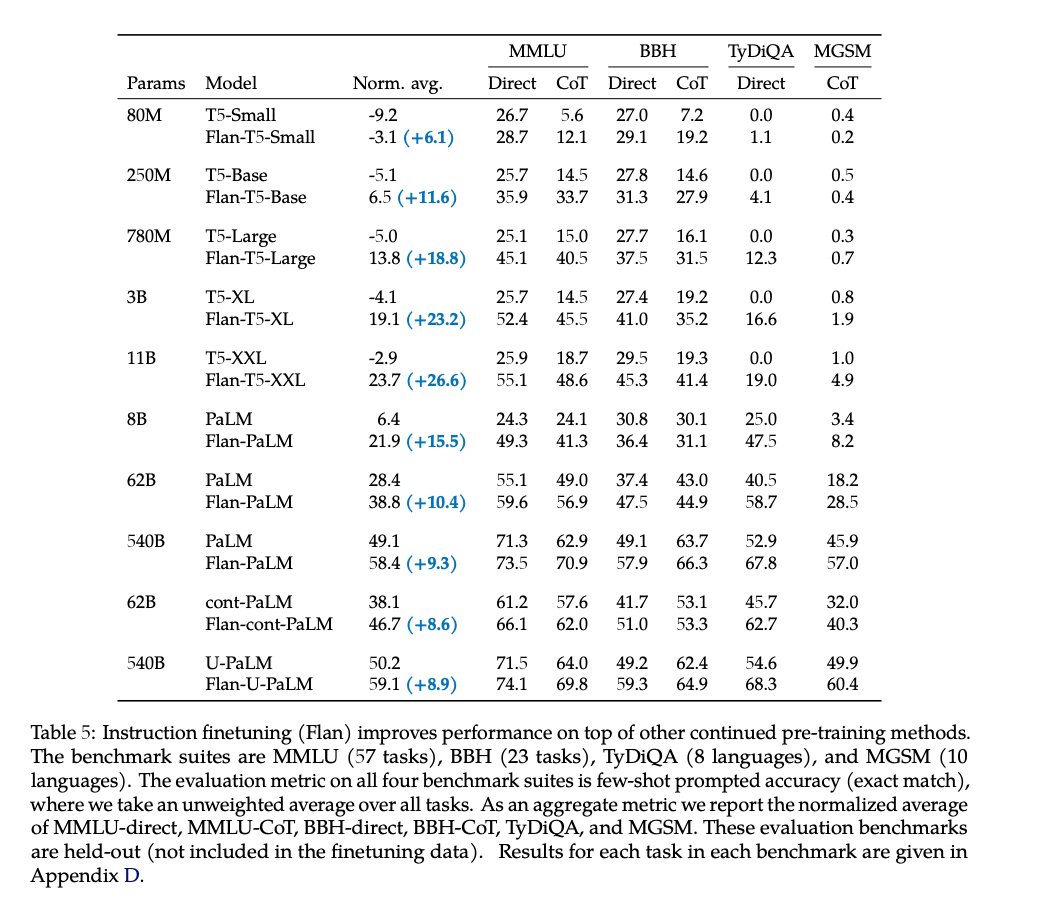

Table 2 shows instruction finetuning (Flan) improves performance on top of other continued pre-training methods.

The benchmark suites are MMLU (57 tasks), BBH (23 tasks), TyDiQA (8 languages), and MGSM (10

languages). The evaluation metric on all four benchmark suites is few-shot prompted accuracy (exact match),

where we take an unweighted average over all tasks. As an aggregate metric we report the normalized average

of MMLU-direct, MMLU-CoT, BBH-direct, BBH-CoT, TyDiQA, and MGSM. These evaluation benchmarks

are held-out (not included in the finetuning data). Results for each task in each benchmark are given in

Appendix D.

Sample Code 1

Running the model on a CPU

from transformers import T5Tokenizer, T5ForConditionalGeneration

tokenizer = T5Tokenizer.from_pretrained("google/flan-t5-xxl")

model = T5ForConditionalGeneration.from_pretrained("google/flan-t5-xxl")

input_text = "translate English to German: How old are you?"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

Sample Code 2

Running the model on a GPU

# pip install accelerate

from transformers import T5Tokenizer, T5ForConditionalGeneration

tokenizer = T5Tokenizer.from_pretrained("google/flan-t5-xxl")

model = T5ForConditionalGeneration.from_pretrained("google/flan-t5-xxl", device_map="auto")

input_text = "translate English to German: How old are you?"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids.to("cuda")

outputs = model.generate(input_ids)

print(tokenizer.decode(outputs[0]))

Limitations

While the Flan T5 is a powerful model for natural language processing tasks, it also has several limitations. Here are some of the limitations of the Flan T5 model

Bias, Risks, and Limitations

Rae et al. (2021) have cautioned that Flan-T5 may have harmful applications despite its potential for language generation. Therefore, authors recommend that Flan-T5 should not be used without a thorough assessment of the safety and fairness concerns specific to the application in question.

Ethical considerations and risks

Since Flan-T5 is trained on a vast amount of text data that was not screened for explicit content or evaluated for potential biases, the model may have the potential to generate similarly inappropriate content or reproduce existing biases present in the original data. Therefore, caution should be exercised before using Flan-T5 in any application, and proper steps should be taken to address issues related to safety and fairness.

Known Limitations

It is explicitly stated in the whitepaper that 'Flan-T5 has not been tested in real world applications.'