LLMs Explained,

GLM 130B

GLM is a General Language Model pretrained with an autoregressive blank-filling objective and can be finetuned on various natural language understanding and generation tasks. The model is trained on a diverse and extensive corpus of text data. GLM-130B, with 130 billion parameters, has demonstrated cutting-edge performance in various language tasks, including question-answering, sentiment analysis, and machine translation. On a wide range of tasks across Natural Language Understanding, conditional and unconditional generation, GLM outperforms BERT, T5, and GPT on common testing conditions.

Model Details View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of GLM

GLM's largest variant, GLM-130B, is a large-scale language model with 130 billion parameters, trained on a diverse and extensive corpus of text data.

GLM has variants' parameter range from 110M to 130B

130B parameters

Largest GLM, GLM-130B, is a pre-trained language model developed with 130 billion parameters. It can capture complex linguistic patterns and nuances.

GLM-130B is trained on English as well as Chinese.

Bilingual Lang Model

GLM-130B is also evaluated on Chinese benchmarks as a bilingual LLM with Chinese. Trained on 1.0T Chinese WudaoCorpora, and 250G Chinese corpora.

GLM-130B outperforms ERNIE TITAN 3.0

Outperforms ERNIE TITAN

GLM-130B consistently outperforms ERNIE Titan 3.0 (largest Chinese LLM). Model outperforms ERNIE by at least 260% on two abstractive MRC datasets.

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Fine-tuning

-

Benchmarking

-

Limitations

-

Other LLMs

Model Details

GLM is a General Language Model pretrained with an autoregressive blank-filling objective and can be finetuned on various natural language understanding and generation tasks. Its largest variant, GLM-130B, with 130 billion parameters, is trained on a diverse and extensive corpus of text data. GLM-130B has achieved state-of-the-art performance on various language tasks, including question-answering, sentiment analysis, and machine translation. GLM-130B uses a transformer-based architecture similar to other large-scale language models such as GPT-3. It is a dense bidirectional model pre-trained with over 400 billion tokens on a cluster of 96 NVIDIA DGX-A100 (840G) GPU nodes. GLM-130B outperforms GPT-3 175B on a wide range of popular English benchmarks, while the performance advantage is not observed in OPT-175B and BLOOM-176B. It also consistently and significantly outperforms ERNIE TITAN 3.0 260B, the largest Chinese language model across related benchmarks.

Model Type: Transformer-based language model with autoregressive blank infilling

Language(s): English, Chinese

License: GLM: MIT license, GLM-130B: Apache-2.0

Model highlights

The GLM-130B has demonstrated cutting-edge performance in a variety of language tasks, including question-answering, sentiment analysis, and machine translation. It has also been used to generate high-quality text in a variety of contexts, ranging from news article generation to poetry composition. Following are the key highlights of the GLM 130B language model.

- Bilingual (English and Chinese) pre-trained language model with 130 billion parameters

- Performance is better than GPT-3 175B davinci (+5.0%), OPT-175B (+6.5%), and BLOOM-176B (+13.0%) on LAMBADA and slightly better than GPT-3 175B (+0.9%) on MMLU.

- Performance for Chinese is significantly better than ERNIE TITAN 3.0 260B on 7 zero-shot CLUE datasets (+24.26%) and 5 zero-shot FewCLUE datasets (+12.75%).

- The model supports fast inference on SAT and FasterTransformer (up to 2.5X faster) with a single A100 server.

- Consistently and significantly outperforms ERNIE TITAN 3.0 260B - the largest Chinese language model - across related benchmarks

- Effective inference on 4×RTX 3090 or 8×RTX 2080 Ti GPUs, the most ever affordable GPUs required for using 100B-scale models

- GLM-130B model weights are publicly accessible. Code, training logs, related toolkits, and lessons learned are open-sourced.

Training Details

Training data

The pre-training data includes 1.2T Pile English corpus, 1.0T Chinese WudaoCorpora, and 250G Chinese corpora, including online forums, encyclopedia, and QA crawled from the web, which forms a balanced composition of English and Chinese contents.

Training infrastructure

GLM-130B is trained on a cluster of 96 DGX-A100 GPU (8×40G) servers with 60-day access. During this period, the publisher managed to train GLM-130B for 400 billion tokens with a fixed sequence length of 2,048 per sample.

Training Objective

GLM-130B pre-training objective includes the self-supervised GLM autoregressive blank infilling and multi-task learning for a small portion of tokens. This is expected to help boost its downstream zero-shot performance.

Training Observation

The training period spanned two months. The publisher managed to reach the INT4 weight quantization for GLM-130B. The INT4 version of GLM-130B without post-training faces negligible performance degradation compared to its uncompressed original.

GLM Model Types

The GLM language model has several architecture variations with varying numbers of parameters. Here's a brief explanation of each of them:

| Model | Parameters |

| GLM-Base | 110 Million |

| GLM-Large | 335 Million |

| GLM-Large-Chinese | 335 Million |

| GLM-Doc | 335 Million |

| GLM-410M | 410 Million |

| GLM-515M | 515 Million |

| GLM-2B | 2 Billion |

| GLM-10B | 10 Billion |

| GLM-10B-Chinese | 10 Billion |

| GLM-130B | 130 Billion |

Business Applications

GLM 130B LM can be used in various business applications that require natural language processing (NLP) capabilities, such as chatbots, virtual assistants, and sentiment analysis.

| Use Case | Description |

| Customer Service | Model can be used to understand customer queries and respond to them accurately and efficiently, reducing the need for human intervention. |

| Content Generation | Model can be used to generate content such as articles, reports, and summaries, saving time and effort for content creators. |

| Personalization | Model can be used to personalize user experiences by understanding their preferences and interests, making recommendations, and suggesting relevant content. |

| Market Research | Model can be used to analyze large volumes of data from various sources, identify trends, and make predictions, helping businesses make informed decisions. |

| Language Translation | Model can be used to translate content accurately and quickly, making it easier for businesses to communicate with customers and partners from different parts of the world. |

Model Features

GLM 130B is a highly modular and flexible language model architecture with various technical features to optimize training performance and model quality. Here are some key technical features of GLM 130B:

Layer Normalization

To stabilize the training of the model, Post-LN practice is adopted due to its favorable downstream results in preliminary experiments. Post-LN initialized with the newly-proposed DeepNorm generates promising training stability.

Positional Encoding and FFNs

For training stability and downstream performance, the positional encoding used in GLM-130B is Rotary Positional Encoding instead of ALiBi. To improve FFNs in Transformer, GLU with the GeLU is used as activation.

Multi-Task Instruction Pretraining

GLM-130B includes a variety of instruction-prompted datasets, including language understanding, generation, and information extraction for multi-task learning in pretraining. This can be more helpful than fine-tuning. MIP only accounts for 5% of tokens and is set in the pretraining stage to prevent spoiling the model’s general ability.

Embedding Layer Gradient Shrink

The model employed gradient shrink on the embedding layer to help overcome loss spikes and thus stabilize the GLM-130B training. This is first used in the multi-modal transformer CogView.

Autoregressive Blanking Infilling

GLM-130B leverages autoregressive blanking infilling as its primary pre-training objective. It masks random continuous spans and predicts them autoregressively.

Model Tasks

GLM-130B, being a large and powerful language model, can perform a wide range of natural language processing tasks, including;

Abstractive summarization

The GLM language model can generate concise summaries of long text by selectively extracting key information and rephrasing it in a coherent and fluent manner.

Question generation

The model can generate questions from a given passage, which can help improve the quality of automated question-answering and information retrieval systems.

Text infilling

The GLM language model can fill in missing words or phrases in a given text, which can be useful for language understanding and completion tasks.

Language Modeling

The model can predict the probability of a sequence of words given the context, which is useful for various downstream tasks such as speech recognition and machine translation.

Question answering

The GLM language model can answer questions based on a given passage, which can be useful for information retrieval and dialogue systems.

Coreference resolution

The model can identify and resolve coreference ambiguities, which is important for natural language understanding and generating coherent text.

Textual entailment

The GLM language model can determine whether one piece of text logically entails another, which can be useful for tasks such as question answering and text classification.

Text generation

The GLM language model can generate coherent and fluent text, which can be useful for various applications such as chatbots and language translation.

Multi-Genre Natural Language Inference

The GLM language model can determine whether two pieces of text have a similar meaning, even if they belong to different genres or domains.

Semantic Textual Similarity

The GLM language model can determine the degree of similarity between two pieces of text based on their meaning, which is important for various downstream tasks such as text classification and machine translation.

Common sense reasoning

The GLM language model can reason about common sense knowledge and make inferences based on that knowledge, which is important for various downstream tasks such as text classification and question answering.

Pronoun reference ambiguity

The model can resolve ambiguities related to pronoun reference, which is important for natural language understanding and generating coherent text.

Fine-tuning Methods

Fine-tuning is the process of training a pre-trained model on a specific task or dataset to adapt it for that task.

Parameter-Efficient P-Tuning.

Parameter-efficient pre-training (P-Tuning) is a technique for optimizing pre-trained language models with a smaller number of parameters than traditional approaches. This approach involves fine-tuning a smaller and more efficient language model on a specific downstream task, and then using this model to initialize the weights of a larger pre-trained model with similar architecture. This results in a larger model that is already partially optimized for the downstream task and requires less fine-tuning than starting from scratch. P-Tuning is designed to improve the efficiency of training and deploying large language models while maintaining their performance on downstream tasks.

Benchmark Results

Benchmarking is an important process to evaluate the performance of any language model, including GLM-130B. The key results are;

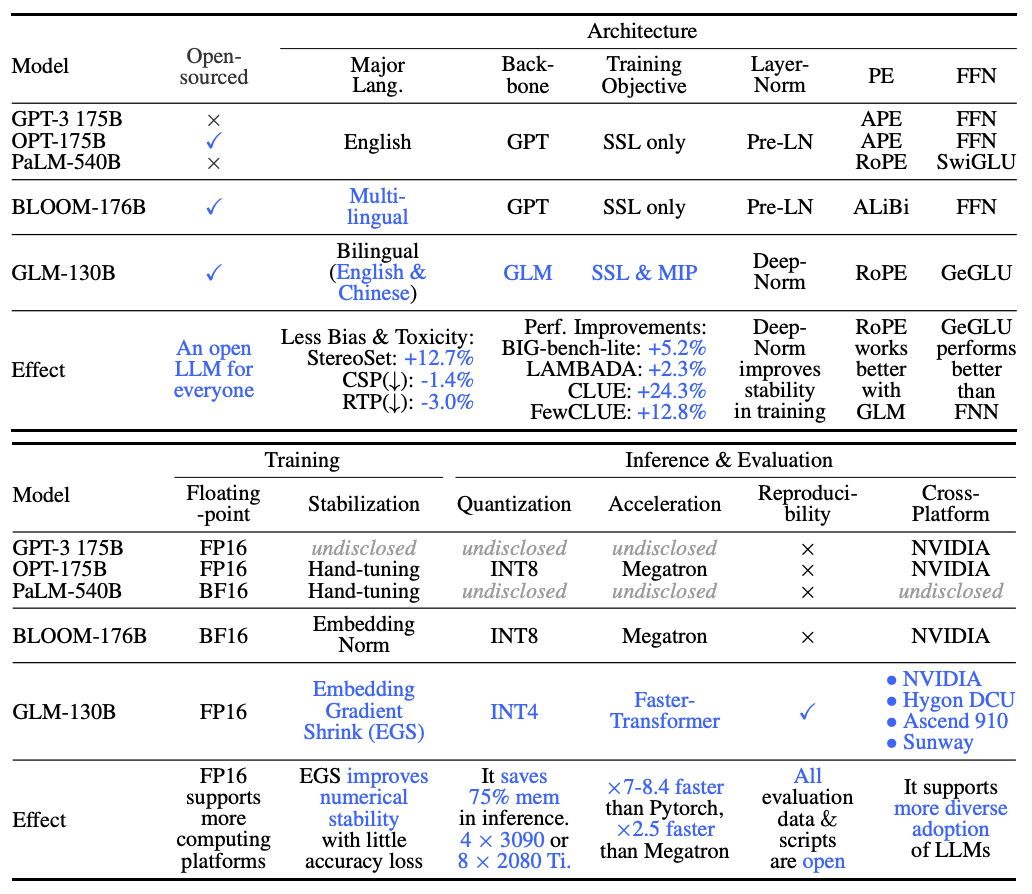

A comparison between GLM-130B and other 100B-scale LLMs and PaLM 540B. (SSL: self-supervised learning; MIP: multi-task instruction pre-training; (A)PE: (absolute) positional encoding; FFN: feed-forward network)

Limitations

Although GLM-130B is a highly advanced language model, it still has some limitations:

Performance Growth

For BIG-bench benchmark tasks, it is observed that GLM-130B’s performance growth with the increase of few-shot samples is not as significant as GPT-3’s.