Llama 2

Llama 2 is a collection of pretrained and fine-tuned large language models (LLMs) that range in scale from 7 billion to 70 billion parameters. The fine-tuned LLMs, known as Llama 2-Chat, are specifically optimized for dialogue applications. These models surpass the performance of most open-source chat models on the benchmarks they were tested on.

Model Details View All Models

View All Models

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Llama 2

The architecture of LLAMA 2 is very similar to the first Llama, with the addition of Grouped Query Attention.

LLAMA 2 have double the context length than Llama 1

Trained on 2 trillion tokens

Llama 2's pretraining utilized an extensive 2 trillion-token dataset from publicly available content. It's fine-tuning involved publicly accessible instructional datasets and over a million new human-annotated examples.

Grouped Query Attention (GQA) to improve inference scalability.

Grouped Query Attention

LLAMA 2 used Grouped Query Attention to improve inference scalability. GQA is a standard practice for autoregressive decoding to cache the key and value pairs for the previous tokens in the sequence, speeding up attention computation.

The model uses a new method, GAtt for multi-turn consistency

Ghost Attention (GAtt)

The model uses Ghost attention for multi-turn consistency after RLHF (Context Distillation to remember previous/initial instructions). It is a novel method for forcing LLMs to follow instructions

Blockchain Success Starts here

-

Model Details

-

Key Highlights

-

Training Details

-

Benchmark Results

-

Sample Codes

-

Fine-tuning

-

Limitations

Model Details

LLAMA 2 offers significant performance enhancements compared to its predecessor and has the added advantage of commercial usage rights. Llama 2 consists of a series of pretrained and fine-tuned LLMs that span from 7 billion to 70 billion parameters. Its model structure is akin to LLaMA 1 but boasts an extended context length and incorporates Grouped Query Attention (GQA) to enhance inference scalability. GQA is commonly employed in autoregressive decoding to store the key and value pairs of preceding tokens in a sequence, which accelerates attention computation. The model is training on 2 trillion tokens of data, Have an expanded context length of 4K and introduces a novel technique for multi-turn consistency named Ghost Attention (GAtt)

Key Highlights

Llama 2 is pretrained on publicly accessible online data. From this, an initial version called Llama-2-chat is developed via supervised fine-tuning. Subsequently, Llama-2-chat undergoes iterative refinement through Reinforcement Learning from Human Feedback (RLHF), incorporating techniques such as rejection sampling and proximal policy optimization (PPO). Llama 2 outperforms other open source language models on many external benchmarks, including reasoning, coding, proficiency, and knowledge tests. Llama-2-chat uses reinforcement learning from human feedback to ensure safety and helpfulness.

- Grouped Query Attention: The models employ Grouped Query Attention (GQA) to improve inference scalability. GQA is a standard practice for autoregressive decoding to cache the key and value pairs for the previous tokens in the sequence, speeding up attention computation. GQA is an interpolation between multi-head and multi-query attention with single key and value heads per subgroup of query heads. GQA achieves quality close to multihead attention while being almost as fast as multiquery attention.

- Ghost Attention: The model employs Ghost Attention (GAtt), a very simple method inspired by Context Distillation that hacks the fine-tuning data to help the attention focus in a multi-stage process. GAtt enables dialogue control over multiple turns.

- Trained on more data: The Llama 2 is trained on 40% more data than Llama 1, the models undergo training on 2 trillion tokens and possess a context length that is twice that of Llama 1. Furthermore, Llama-2-chat models have been trained with more than 1 million fresh human annotations.

Training Details

Training Data

A new mix of publicly available online data is used for pretraining. All models are trained with a global batch-size of 4M tokens. Bigger models - 70B -- use Grouped-Query Attention (GQA) for improved inference scalability. Llama 2 is a static model trained on an offline dataset.

Training Infrastructure

Custom training libraries, Meta's Research Super Cluster, and production clusters were utilized for pretraining. The processes of fine-tuning, annotation, and evaluation were carried out on third-party cloud computing platforms.

Training Cost

Pretraining involved a total of 3.3M GPU hours of computation using A100-80GB hardware (with a TDP ranging from 350-400W). The estimated emissions amounted to 539 tCO2eq, and Meta's sustainability program offset 100% of these emissions.

Training Evaluation

Average scores are presented for HumanEval, MBPP, and multiple benchmarks in areas like Commonsense Reasoning, World Knowledge, Reading Comprehension, and MATH. The breakdown includes 7-shot results, 0-shot, , 5-shot and distinct shot counts.

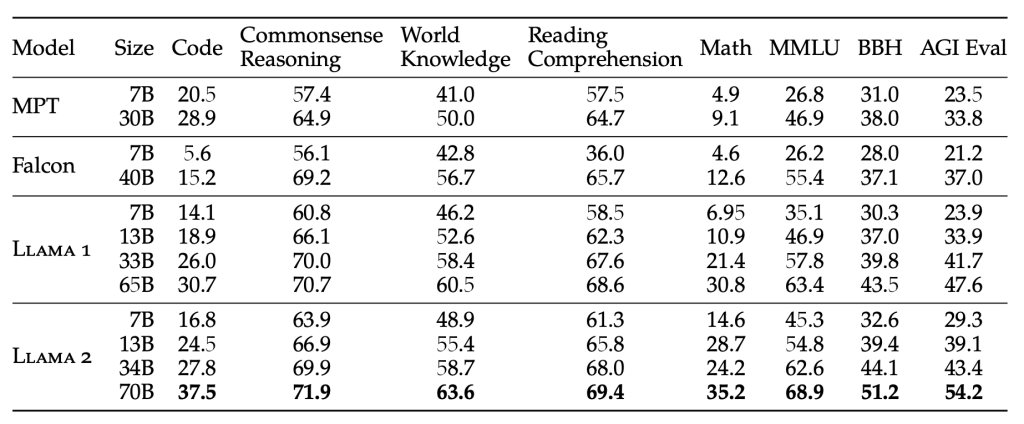

Benchmark Results

Llama 2 models outperform Llama 1 models. In particular, Llama 2 70B improves the results on MMLU and BBH by ≈5 and ≈8 points, respectively, compared to Llama 1 65B.

| Model | Size | Code | Commonsense Reasoning | World Knowledge | Reading Comprehension | Math | MMLU | BBH | AGI Eval |

| Llama 1 | 7B | 14.1 | 60.8 | 46.2 | 58.5 | 6.95 | 35.1 | 30.3 | 23.9 |

| Llama 1 | 13B | 18.9 | 66.1 | 52.6 | 62.3 | 10.9 | 46.9 | 37.0 | 33.9 |

| Llama 1 | 33B | 26.0 | 70.0 | 58.4 | 67.6 | 21.4 | 57.8 | 39.8 | 41.7 |

| Llama 1 | 65B | 30.7 | 70.7 | 60.5 | 68.6 | 30.8 | 63.4 | 43.5 | 47.6 |

| Llama 2 | 7B | 16.8 | 63.9 | 48.9 | 61.3 | 14.6 | 45.3 | 32.6 | 29.3 |

| Llama 2 | 13B | 24.5 | 66.9 | 55.4 | 65.8 | 28.7 | 54.8 | 39.4 | 39.1 |

| Llama 2 | 70B | 37.5 | 71.9 | 63.6 | 69.4 | 35.2 | 68.9 | 51.2 | 54.2 |

Llama 2 7B and 30B models outperform MPT models of the corresponding size in all categories besides code benchmarks. For the Falcon models, Llama 2 7B and 34B outperform Falcon 7B and 40B models on all categories of benchmarks. Additionally, Llama 2 70B model outperforms all open-source models.

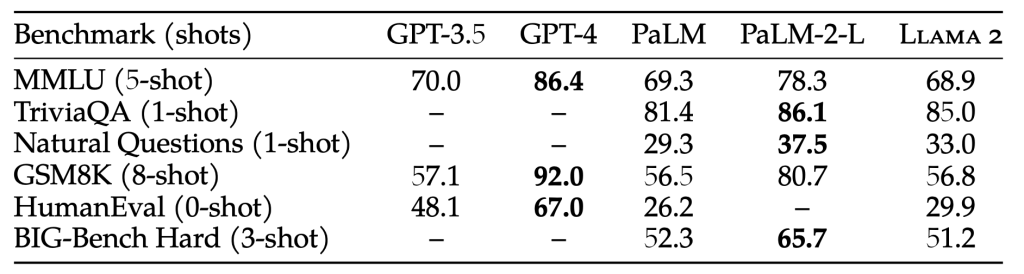

When compared with closed-source LLMs, Llama 2 70B is close to GPT-3.5 on MMLU and GSM8K, but there is a significant gap in coding benchmarks. Llama 2 70B results are on par or better than PaLM (540B) on almost all benchmarks. There is still a large gap in performance between Llama 2 70B and GPT-4 and PaLM-2-L.

Sample Codes

The model and tokenizer can be loaded via:

from transformers import LlamaForCausalLM, LlamaTokenizer

tokenizer = LlamaTokenizer.from_pretrained("/output/path")

model = LlamaForCausalLM.from_pretrained("/output/path")

Configuration class to store the configuration of a LlamaModel. It is used to instantiate LLaMA model according to the specified arguments, defining the model architecture. Instantiating a configuration with the defaults will yield a similar configuration to that of the LLaMA-7B.

from transformers import LlamaModel, LlamaConfig # Initializing a LLaMA llama-7b style configuration configuration = LlamaConfig() # Initializing a model from the llama-7b style configuration model = LlamaModel(configuration) # Accessing the model configuration configuration = model.config

The LlamaForCausalLM forward method, overrides the __call__ special method.

from transformers import AutoTokenizer, LlamaForCausalLM model = LlamaForCausalLM.from_pretrained(PATH_TO_CONVERTED_WEIGHTS) tokenizer = AutoTokenizer.from_pretrained(PATH_TO_CONVERTED_TOKENIZER) prompt = "Hey, are you conscious? Can you talk to me?" inputs = tokenizer(prompt, return_tensors="pt") # Generate generate_ids = model.generate(inputs.input_ids, max_length=30) tokenizer.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]

Fine-tuning

Sample code o fine-tune LLaMA 2 using Low-Rank Adaptation (LoRA). Check out the Detailed tutorial on fine-tuning Llama2

from typing import List

import fire

import torch

import transformers

from datasets import load_dataset

from peft import (

LoraConfig,

get_peft_model,

get_peft_model_state_dict,

prepare_model_for_int8_training,

set_peft_model_state_dict,

PrefixTuningConfig,

TaskType

)

from transformers import LlamaForCausalLM, LlamaTokenizer

def train(

# model/data params

base_model: str = "",

data_path: str = "",

output_dir: str = "",

micro_batch_size: int = 4,

gradient_accumulation_steps: int = 4,

num_epochs: int = 3,

learning_rate: float = 3e-4,

val_set_size: int = 2000,

# lora hyperparams

lora_r: int = 8,

lora_alpha: int = 16,

lora_dropout: float = 0.05,

lora_target_modules: List[str] = [

"q_proj",

"v_proj",

]

):

device_map = "auto"

# Step 1: Load the model and tokenizer

model = LlamaForCausalLM.from_pretrained(

base_model,

# load_in_8bit=True, # Add this for using int8

torch_dtype=torch.float16,

device_map=device_map,

)

tokenizer = LlamaTokenizer.from_pretrained(base_model)

tokenizer.pad_token_id = 0

Add this for training LoRA

config = LoraConfig(

r=lora_r,

lora_alpha=lora_alpha,

target_modules=lora_target_modules,

lora_dropout=lora_dropout,

bias="none",

task_type="CAUSAL_LM",

)

model = get_peft_model(model, config)

model = prepare_model_for_int8_training(model) # Add this for using int8

# Step 2: Load the data

if data_path.endswith(".json") or data_path.endswith(".jsonl"):

data = load_dataset("json", data_files=data_path)

else:

data = load_dataset(data_path)

# Step 3: Tokenize the data

def tokenize(data):

source_ids = tokenizer.encode(data['input'])

target_ids = tokenizer.encode(data['output'])

input_ids = source_ids + target_ids + [tokenizer.eos_token_id]

labels = [-100] * len(source_ids) + target_ids + [tokenizer.eos_token_id]

return {

"input_ids": input_ids,

"labels": labels

}

#split thte data to train/val set

train_val = data["train"].train_test_split(

test_size=val_set_size, shuffle=False, seed=42

)

train_data = (

train_val["train"].shuffle().map(tokenize)

)

val_data = (

train_val["test"].shuffle().map(tokenize)

)

# Step 4: Initiate the trainer

trainer = transformers.Trainer(

model=model,

train_dataset=train_data,

eval_dataset=val_data,

args=transformers.TrainingArguments(

per_device_train_batch_size=micro_batch_size,

gradient_accumulation_steps=gradient_accumulation_steps,

warmup_steps=100,

num_train_epochs=num_epochs,

learning_rate=learning_rate,

fp16=True,

logging_steps=10,

optim="adamw_torch",

evaluation_strategy="steps",

save_strategy="steps",

eval_steps=200,

save_steps=200,

output_dir=output_dir,

save_total_limit=3

),

data_collator=transformers.DataCollatorForSeq2Seq(

tokenizer, pad_to_multiple_of=8, return_tensors="pt", padding=True

),

)

trainer.train()

# Step 5: save the model

model.save_pretrained(output_dir)

if __name__ == "__main__":

fire.Fire(train)

Ethical Considerations and Limitations

Llama 2, like most LLMs, comes with inherent risks. While testing has been primarily in English, it hasn't encompassed every possible scenario. Due to these limitations, and as is the case with all LLMs, it's impossible to foresee all of Llama 2's outputs. In certain situations, the model might generate responses that are incorrect, biased, or otherwise problematic. Hence, developers are advised to conduct thorough safety assessments and adjustments specific to their intended applications of Llama 2 before its deployment.