LLMs Explained,

Megatron

Megatron is a powerful language model developed by NVIDIA, specifically designed for training large-scale natural language processing (NLP) models. The model's name is inspired by the nefarious robot character from the Transformers franchise, which symbolizes its ability to adapt and expand to handle vast amounts of data and complex language-related tasks. By leveraging advanced hardware and software technologies, Megatron can efficiently process massive amounts of data and learn from diverse linguistic patterns, resulting in impressive language generation capabilities. Its name not only reflects its technological prowess but also suggests the transformative impact that it can have on the field of NLP.

Model Card

100+ Technical Experts

50 Custom AI projects

4.8 Minimum Rating

An Overview of Megatron

Megatron is a powerful language model developed by NVIDIA, specifically designed for training large-scale natural language processing (NLP) models.

Scales up to 8.3billion parameters

8.3b parameters

Megatron 8.3B contains 8.3 billion parameters, making it one of the largest language models in the world.

7 times faster and efficient than other models

7X faster

Megatron can train models up to 7 times faster than T5, allowing for faster experimentation and iteration.

94.5% on the Stanford Question Answering Dataset

94.5% Accuracy

The paper shows Megatron achieved an accuracy of 94.5% on SQuAD v1.1 task, and 80.4% score in Natutal language processing tasks.

Blockchain Success Starts here

-

Introduction

-

Business Applications

-

Model Features

-

Model Tasks

-

Getting Started

-

Fine-tuning

-

Benchmarking

-

Sample Codes

-

Limitations

-

Other LLMs

Introduction to Megatron

NVIDIA created Megatron, a high-capacity language model tailored to train large-scale NLP models. It derives its name from the evil robot character in the Transformers series, which signifies its capability to adapt and expand to process immense amounts of data and intricate language-related assignments. Megatron has demonstrated its remarkable ability to surpass state-of-the-art benchmarks in natural language processing, including the challenging Common Crawl and WikiText-103 datasets. Meanwhile, Megatron has proven to be an effective tool for constructing large-scale language models, including the highly acclaimed GPT-2 and GPT-3, which have garnered much attention for their exceptional language generation skills.

About Model

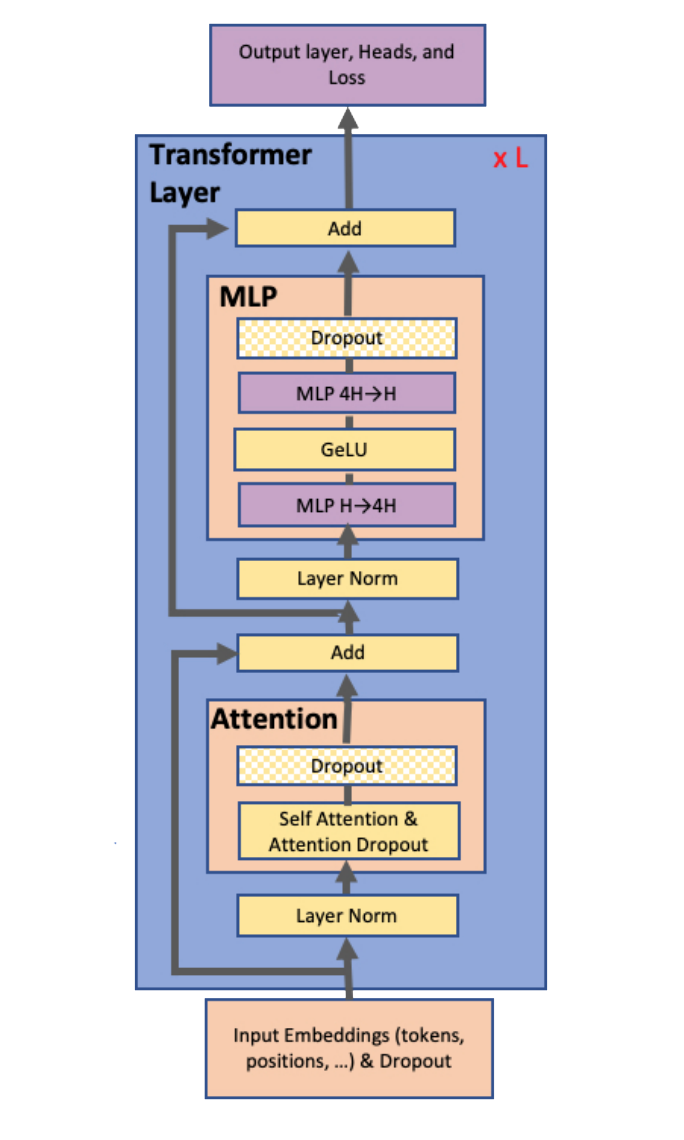

The Megatron model includes feedforward and normalization layers, which aid the model's performance and stability during training. Megatron is built to be highly scalable, allowing it to be trained on massive amounts of data with distributed computing techniques like data parallelism and model parallelism. Megatron achieves scalability by combining model parallelism and data parallelism. Data parallelism involves splitting the training data across multiple GPUs or machines, whereas model parallelism involves splitting the model across multiple GPUs or machines. Megatron can be trained using various optimization techniques such as stochastic gradient descent (SGD), Adam, and Adafactor. Megatron has been used for various NLP tasks other than language modeling, such as answering questions and machine translation.

Model Type: Deep learning model

Language(s) (NLP): English, Spanish, French, German, Chinese, Arabic, and so on.

License: Apache 2.0

Model highlights

Following are the key highlights of the Megatron language model.

- Megatron enables training transformer models with billions of parameters, which advances the state-of-the-art in Natural Language Processing applications.

- The implementation of Megatron is simple, efficient, and can be fully implemented with the insertion of a few communication operations in native PyTorch.

- Megatron achieves state-of-the-art results on various datasets such as WikiText103 and LAMBADA for GPT-2 model and the RACE dataset for the BERT model.

Training Details

Training data

The training data for Megatron LM was sourced from various outlets such as web pages, books, and Wikipedia. It totaled 8.3 terabytes with 40 billion+ tokens. The data was preprocessed with byte-pair encoding (BPE) to divide words into subword units. Segments of 512 tokens were created from the preprocessed data and were further broken down to speed up processing.

Training Procedure

The research paper details the training process for Megatron LM. PyTorch and NCCL were used for parallel training, combining model parallelism and data parallelism. Unsupervised pretraining and supervised fine-tuning were used for training, with varying precision levels. Gradient checkpointing and gradient accumulation were also used to manage memory usage.

Training dataset size

The size of the training dataset used for Megatron LM was approximately 8.3 terabytes, consisting of over 40 billion tokens. The vast size of the training dataset is crucial for enabling Megatron to learn from a vast array of linguistic contexts and patterns, allowing it to capture a broad range of syntactic and semantic structures, as well as world knowledge.

Training time and resources

The research paper doesn't provide a specific training time for Megatron LM. However, the model was trained on 1,024 GPUs using a combination of model parallelism and data parallelism. The training process employed various techniques like precision levels, gradient checkpointing, and gradient accumulation to manage memory usage.

Model Types

The Megatron language model has several architecture variations with varying numbers of parameters. Here's a brief explanation of each of them:

| Model | Parameters | Highlights |

| Megatron-LM | 3.6 billion | Trained on a dataset of over 8 million web pages. |

| Megatron-XL | 5.8 billion | Trained on over 40GB of text dataset. |

| Megatron-11B | 11 billion | Trained on a massive dataset of over 800 billion tokens. |

Business Applications

Megatron LM can be used in various business applications that require natural language processing (NLP) capabilities, such as chatbots, virtual assistants, and sentiment analysis.

| Language Modelling | Reading Comprehension | Question Answering |

| Text generation for content creation | Chatbots for customer support | Customer service chatbots to answer frequently asked questions |

| Predictive text and autocorrect in messaging apps | Automated news summarization and article extraction | Automated customer surveys to gather feedback |

| Sentiment analysis for customer feedback and social media monitoring | Intelligent personal assistants for scheduling and information retrieval | Search engine optimization for improving search results |

Model Features

Megatron is a highly modular and flexible language model architecture with various technical features to optimize training performance and model quality. Here are some key technical features of Megatron:

GShard Transformer

Megatron uses a variant of the transformer architecture called the GShard Transformer, which allows for greater parallelism and scalability. The GShard Transformer splits the model into smaller sub-models that can be trained independently, allowing for more efficient use of computing resources and faster training times.

Large-Scale Model Sizes

Megatron is designed to scale up to very large model sizes, with up to billions of parameters. This allows Megatron to achieve state-of-the-art results on various language modeling tasks, such as text generation and machine translation.

Data Parallelism

Megatron uses data parallelism to split the training data across multiple devices and train the model in parallel. This speeds up the training process and enables larger batch sizes.

Model Parallelism

Megatron uses model parallelism to split the model across multiple devices to reduce the memory requirements of each device. This allows larger models to be trained on devices with limited memory.

Pipeline Parallelism

Megatron uses pipeline parallelism to split the model and training data across multiple devices in a pipeline fashion. This technique allows even larger models to be trained on a single device with limited memory.

Mixed-Precision Training

Megatron uses mixed-precision training to speed up the training process by using half-precision floating-point numbers instead of single-precision numbers. This reduces the memory requirements of the model and speeds up computations.

Gradient Accumulation

Megatron uses gradient accumulation to simulate larger batch sizes during training without increasing the actual batch size. This technique allows for more efficient use of computing resources and faster training times.

Dynamic Loss Scaling

Megatron uses dynamic loss scaling to prevent underflow or overflow of the model's gradients during training. This technique adjusts the loss scale dynamically to maintain numerical stability and prevent training instabilities.

Licensing

Megatron is an open-source project and is released under Apache License 2.0, which is a permissive open-source software license that allows for commercial use, modification, and distribution of the code. Therefore, Megatron can be used by anyone for commercial or non-commercial purposes.

Level of customization

Megatron is highly customizable, and users can modify the code to fit their specific use cases. It is written in Python using PyTorch and supports distributed training on multi-GPU and multi-node systems.

Available pre-trained model checkpoints

Several pre-trained checkpoints are available for Megatron. These checkpoints are trained on large datasets such as WebText, BooksCorpus, and WikiText-103 and can be used to fine-tune specific tasks. The pre-trained checkpoints are available for different model sizes, including Megatron-LM (3.6 billion parameters), Megatron-XL (5.8 billion parameters), and Megatron-11B (11 billion parameters). The checkpoints are available for download from the Megatron GitHub repository. Users can also use the pre-trained checkpoints to generate text using the model's autoregressive language generation capabilities.

Model Tasks

Megatron is a general-purpose language model architecture that can be used for a wide range of natural language processing (NLP) tasks. Here are some tasks that Megatron can perform:

Language Modeling

Megatron can be trained to predict the probability distribution of the next word in a sequence of text, which is the task of language modeling. This is typically done by training the model on a large corpus of text data and then using it to generate new text that is coherent and consistent with the input data.

Text Generation

Megatron can be used for text generation tasks, such as machine translation, summarization, and dialogue generation. This involves training the model to generate coherent and natural-sounding text responding to a given prompt or input.

Question Answering

Megatron can be trained to answer questions based on a given context, which is the task of question answering. This involves training the model to extract relevant information from the input text and generate a natural-language answer to a given question.

Sentiment Analysis

Megatron can be used for sentiment analysis tasks, such as the classification of text into positive, negative, or neutral categories. This involves training the model to recognize patterns in the input text indicative of different sentiments.

Named Entity Recognition

Megatron can be trained to identify and extract named entities from text, such as people, places, and organizations. This involves training the model to recognize patterns in the input text indicative of named entities.

Text Classification

Megatron can be used for text classification tasks, such as spam detection or topic classification. This involves training the model to recognize patterns in the input text indicative of different categories.

Getting Started

Megatron LM is a state-of-the-art language modeling framework developed by NVIDIA that can train multi-billion parameter language models. It is based on the PyTorch deep learning framework and uses several advanced techniques to optimize training performance and memory usage.

git clone https://github.com/NVIDIA/Megatron-LM.git pip install -r requirements.txt bash scripts/setup_env.sh python pretrain.py --config-file configs/pretrain/gpt2_345m.json

To install Megatron, follow these general steps:

- Ensure that Python 3.6 or later is installed on your system.

- Install PyTorch 1.7.1 or later.

- Install the required dependencies using pip.

- Clone the Megatron repository from GitHub.

- Change to the Megatron directory.

- Install Megatron using pip.

- Test the installation by running a pre-training script for the GPT-2 model.

It is important to note that the specific installation steps may vary depending on your system configuration and requirements. The Megatron documentation provides detailed instructions on installation and usage and troubleshooting tips. Once installed, Megatron can be used for various natural languages processing tasks, such as language modeling, text generation, and sentiment analysis.

Fine-tuning

Several methods for fine-tuning Megatron language models, depending on the task and dataset. Here are a few common methods:

Supervised fine-tuning

This is the most common method for fine-tuning Megatron language models. In supervised fine-tuning, you train the model on a labeled dataset specific to your task (e.g., sentiment analysis, question answering, language translation). The goal is to fine-tune the pre-trained model to fit the specific task better and improve performance.

Unsupervised fine-tuning

Unsupervised fine-tuning

Transfer learning

Transfer learning involves fine-tuning a Megatron model on a related task or dataset and then transferring the knowledge gained to a different but related task or dataset. This can be particularly useful when you have limited labeled data for a specific task but have access to larger labeled datasets for related tasks.

Multi-task learning

Multi-task learning involves simultaneously fine-tuning a Megatron model on multiple related tasks. This can be useful when the tasks share common features or representations and can improve performance on all tasks by jointly learning across them.

Benchmarking

Benchmarking is an important process to evaluate the performance of any language model, including Megatron.

| Model | Trained Tokens Ratio | MNLI m/mm Accuracy (Dev Set) | QQP Accuracy (Dev Set) | SQuAD 1.1 F1 / EM (Dev Set) | SQuAD 2.0 F1 / EM (Dev Set) | RACE Accuracy (test set) |

| RoBERTa | 2 | 90.2 / 90.2 | 92.2 | 94.6 / 88.9 | 89.4 / 86.5 | 83.2 (86.5 / 81.8) |

| ALBERT | 3 | 90.8 | 92.2 | 94.8 / 89.3 | 90.2 / 87.4 | 86.5 (89.0 / 85.5) |

| XLNet | 2 | 90.8 / 90.8 | 92.3 | 95.1 / 89.7 | 90.6 / 87.9 | 85.4 (88.6 / 84.0) |

| Megatron-336M | 1 | 89.7 / 90.0 | 92.3 | 94.2 / 88.0 | 88.1 / 84.8 | 83.0 (86.9 / 81.5) |

| Megatron-1.3B | 1 | 90.9 / 91.0 | 92.6 | 94.9 / 89.1 | 90.2 / 87.1 | 87.3 (90.4 / 86.1) |

| Megatron-3.9B | 1 | 91.4 / 91.4 | 92.7 | 95.5 / 90.0 | 91.2 / 88.5 | 89.5 (91.8 / 88.6) |

| ALBERT ensemble | - | - | - | 95.5 / 90.1 | 91.4 / 88.9 | 89.4 (91.2 / 88.6) |

| Megatron-3.9B ensemble | - | - | - | 95.8 / 90.5 | 91.7 / 89.0 | 90.9 (93.1 / 90.0) |

| Model | ARC-Challenge | ARC-Easy | RACE - middle | RACE - high | Winogrande | RTE | BoolQA | HellaSwag | PiQA |

| Megatron-GPT 20B | 0.4403 | 0.6141 | 0.5188 | 0.4277 | 0.659 | 0.5704 | 0.6954 | 0.721 | 0.7688 |

| Megatron-GPT 1.3B | 0.3012 | 0.4596 | 0.459 | 0.3797 | 0.5343 | 0.5451 | 0.5979 | 0.4443 | 0.6934 |

| Megatron-GPT 5B | 0.3976 | 0.5566 | 0.5007 | 0.4171 | 0.6133 | 0.5812 | 0.6356 | 0.6298 | 0.7492 |

Sample Code 1

Running the model on a CPU

import torch

import megatron

# Set device to CPU

device = torch.device("cpu")

# Define the model configuration

model_config = {

"num_layers": 12,

"hidden_size": 768,

"num_attention_heads": 12,

"max_position_embeddings": 512,

"type_vocab_size": 2,

"vocab_size": 50257

# Initialize the model

model, _, _, _ = megatron.initialize_model(model_config)

# Move the model to the CPU

model.to(device)

# Define some example input data

input_ids = torch.randint(low=0, high=model_config["vocab_size"], size=(1, 512)).to(device)

# Run the model on the input data

output = model(input_ids)

# Print the output

print(output)

Sample Code 2

Running the model on a GPU

import torch

import megatron

# Set device to GPU

device = torch.device("cuda")

# Define the model configuration

model_config = {

"num_layers": 12,

"hidden_size": 768,

"num_attention_heads": 12,

"max_position_embeddings": 512,

"type_vocab_size": 2,

"vocab_size": 50257

}

# Initialize the model

model, _, _, _ = megatron.initialize_model(model_config)

# Move the model to the GPU

model.to(device)

# Define some example input data

input_ids = torch.randint(low=0, high=model_config["vocab_size"], size=(1, 512)).to(device)

# Run the model on the input data

output = model(input_ids)

# Print the output

print(output)

Sample Code 3

Running the model on a GPU using different precisions - FP16

import torch

import megatron

# Set device to GPU

device = torch.device("cuda")

# Define the model configuration

model_config = {

"num_layers": 12,

"hidden_size": 768,

"num_attention_heads": 12,

"max_position_embeddings": 512,

"type_vocab_size": 2,

"vocab_size": 50257

}

# Define the precision

precision = "fp16" # Can be "fp32" or "bf16" as well

# Initialize the model with the specified precision

model, _, _, _ = megatron.initialize_model(model_config, fp16=precision == "fp16", bf16=precision == "bf16")

# Move the model to the GPU

model.to(device)

# Define some example input data

input_ids = torch.randint(low=0, high=model_config["vocab_size"], size=(1, 512)).to(device)

# Run the model on the input data

output = model(input_ids)

# Print the output

print(output)

Limitations

While the Megatron language model is one of the most powerful and versatile, it has its limitations. Here are a few potential limitations:

Computational resources

Megatron language model requires significant computational resources, including powerful GPUs and large amounts of memory. This can make it difficult or expensive for some users to train or fine-tune the model.

Data requirements

Megatron language model is trained on massive amounts of data, making it difficult to fine-tune on smaller or more specialized datasets. Additionally, some tasks may require specialized or domain-specific data that may not be available in large pre-training datasets.

Interpretability

The Megatron language model can be difficult to interpret like other deep learning models. This can make understanding how the model makes predictions or diagnose errors or issues challenging.

Bias

As with any language model, the Megatron language model can be biased in the data it is trained on or how it is fine-tuned. It is important to carefully consider potential sources of bias and take steps to mitigate them.

Fine-tuning process

Fine-tuning the Megatron language model can require careful tuning of hyperparameters, such as learning rate and batch size, and may require multiple iterations to achieve optimal performance. Additionally, the fine-tuning process may be time-consuming and require significant computational resources.